Simple tasks don't test brain's true complexity

The human brain naturally makes its best guess when making a decision, and studying those guesses can be very revealing about the brain's inner workings. But neuroscientists at Rice University and Baylor College of Medicine said a full understanding of the complexity of the human brain will require new research strategies that better simulate real-world conditions.

Xaq Pitkow and Dora Angelaki, both faculty members in Baylor's Department of Neuroscience and Rice's Department of Electrical and Computer Engineering, said the brain's ability to perform "approximate probabilistic inference" cannot be truly studied with simple tasks that are "ill-suited to expose the inferential computations that make the brain special."

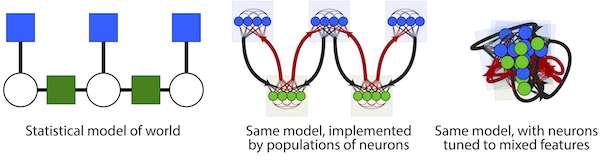

A new article by the researchers suggests the brain uses nonlinear message-passing between connected, redundant populations of neurons that draw upon a probabilistic model of the world. That model, coarsely passed down via evolution and refined through learning, simplifies decision-making based on general concepts and its particular biases.

The article, which lays out a broad research agenda for neuroscience, is featured this month in a special edition of Neuron, a journal published by Cell Press. The edition presents ideas that first appeared as part of a workshop at the University of Copenhagen last September titled "How Does the Brain Work?"

"Evolution has given us what we call a good model bias," Pitkow said. "It's been known for a couple of decades that very simple neural networks can compute any function, but those universal networks can be enormous, requiring extraordinary time and resources.

"In contrast, if you have the right kind of model—not a completely general model that could learn anything, but a more limited model that can learn specific things, especially the kind of things that often happen in the real world—then you have a model that's biased. In this sense, bias can be a positive trait. We use it to be sensitive to the right things in the world that we inhabit. Of course, the flip side is that when our brain's bias is not matched to reality, it can lead to severe problems."

The researchers said simple tests of brain processes, like those in which subjects choose between two options, provide only simple results. "Before we had access to large amounts of data, neuroscience made huge strides from using simple tasks, and they'll remain very useful," Pitkow said. "But for computations that we think are most important about the brain, there are things you just can't reveal with some of those tasks." Pitkow and Angelaki wrote that tasks should incorporate more diversity—like nuisance variables and uncertainty—to better simulate real-world conditions that the brain evolved to handle.

They suggested that the brain infers solutions based on statistical crosstalk between redundant population codes. Population codes are responses by collections of neurons that are sensitive to certain inputs, like the shape or movement of an object. Pitkow and Angelaki think that to better understand the brain, it can be more useful to describe what these populations compute, rather than precisely how each individual neuron computes it. Pitkow said this means thinking "at the representational level" rather than the "mechanistic level," as described by the influential vision scientist David Marr.

The research has implications for artificial intelligence, another interest of both researchers.

"A lot of artificial intelligence has done impressive work lately, but it still fails in some spectacular ways," Pitkow said. "They can play the ancient game of Go and beat the best human player in the world, as done recently by DeepMind's AlphaGo about a decade before anybody expected. But AlphaGo doesn't know how to pick up the Go pieces. Even the best algorithms are extremely specialized. Their ability to generalize is often still pretty poor. Our brains have a much better model of the world; We can learn more from less data. Neuroscience theories suggest ways to translate experiments into smarter algorithms that could lead to a greater understanding of general intelligence."

More information: Inference in the Brain: Statistics Flowing in Redundant Population Codes. Neuron. DOI: dx.doi.org/10.1016/j.neuron.2017.05.028