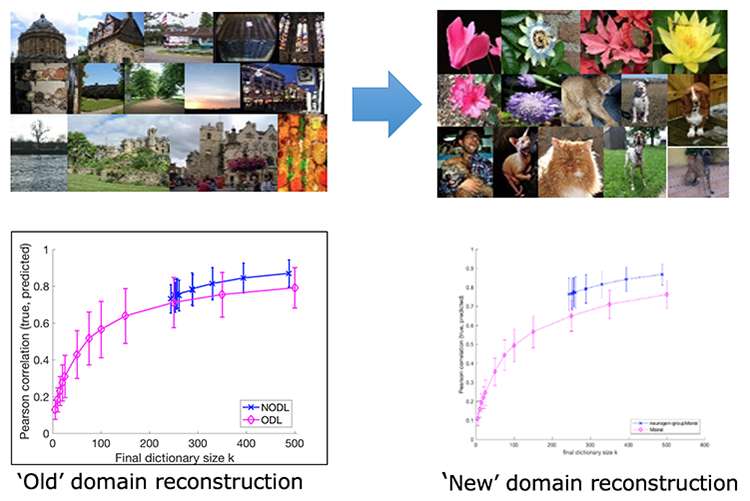

Image data sets used for the evaluation of the IBM team’s online dictionary learning algorithms which outperform standard online dictionary learning methods by improving the reconstruction accuracy and learning more compact representations. Credit: IBM

While building artificial systems does not necessarily require copying nature—after all, airplanes fly without flapping their wings like birds—the history of AI and machine learning convincingly demonstrates that drawing inspirations from neuroscience and psychology can lead to significant breakthroughs, with deep neural networks and reinforcement learning being perhaps the two most prominent examples.

Taking inspiration from the brain, our IBM Research team recently used machine learning techniques to develop computational models of attention and memory. Our ultimate goal is to build lifelong learning AI systems, able to adapt to new environments while retaining what they have learned so far. This challenge can be broken down into short term adaptation, where there is little time to change a system and train it on what to pay attention to, and long term adaptation that is inspired by how the human brain forms memory and how neuroplasticity (e.g., adult neurogenesis) affects this process.

Our team developed two important innovations that enable short-term and long-term adaptation which are a result of reward-driven attention techniques and enabling network "plasticity." These will be discussed in two papers that we will be presenting at IJCAI this week.

Quick adaptation with reward-driven attention

Attention is the ability to quickly select and process the most-important information from an enormous stream of sensory signals (visual, auditory, etc). Since our retinas provide a very limited view of the visual field, we constantly have to decide which "glimpses" to focus on and make quick decisions with. In real life, the problem of choosing a small subset of important features to focus on, out of potentially endless numbers of possibilities, is something we experience every day. For example, faced with by the sudden sight of a lion in the bushes an antelope has to make a split second decision on what it sees and what action to take; in another example, a doctor may only be able ask a finite number of questions before deciding on a drug or test to prescribe to a patient.

In our paper "Context-Attentive Bandit: Contextual Bandit with Restricted Context," we developed an algorithm for the situations described above. Our algorithm learns to quickly focus its attention on the right input based on a reward (i.e. feedback from its environment) obtained during the task. The higher the reward, the more attention it will place on a certain piece of input. In the case of the lion and antelope, the antelope learns which part of its environment to glance at, and when detecting an unusual movement in the bushes, the reward is survival when it takes action to escape from the path of a potential predator. In the example of the doctor above, the number of possible tests and treatments to prescribe is very large, and the doctor needs to decide on the most effective ones. Much like an AI system, with training and experience, the doctor learns to choose the most effective combination of tests and treatments so that the expected reward (i.e. the patient gets better) is maximized.

The novelty of our algorithms is the ability to learn which inputs to focus on in an online manner i.e. the dataset is not fixed, but constantly changing, while receiving a reward for making decisions based on partial inputs. Online means that the system can learn as it performs, and therefore is robust to changes.

Until now we have tested our algorithms on several online classification tasks, using publicly available datasets, and our next steps involve applying our approach to a wider set of real-life datasets and problems with more complex environments.

Building memories for long term adaptation: neurogenetic learning

Another technique we are developing is based on neuroplasticity, which is addressed in our second paper on "Neurogenesis-Inspired Dictionary Learning: Online Model Adaption in a Changing World." This approach lets us enable long term learning and is inspired by the adult neurogenesis process which happens in the hippocampus, the part of the human brain responsible for forming memories.

While synaptic plasticity, i.e. the changing strength of neuronal connections during learning, is the standard approach to neural net training, other types of plasticity, such as neurogenesis, can inspire novel learning methods, where the architecture of the network constantly adapts in response to the changing environment during lifelong learning. In our paper, we propose such an algorithm, which expands and compresses hidden layers of a network, imitating the birth and death of neurons. We demonstrate that our algorithm not only adapts to a new environment (e.g., a new domain) but also preserves memories of the previous domains, thus making a step towards lifelong learning AI systems.

On applications such as image recognition and natural language processing, we observe that our adaptive approach, expanding and collapsing its hidden layer much like the human brain, considerably outperforms the nonadaptive baseline.

Nature and neuroscience continue to inspire our research and our quest to build adaptive lifelong learning systems that can augment and scale what the human brain is already expert at.

More information: Context Attentive Bandits: Contextual Bandit with Restricted Context. arXiv. arxiv.org/abs/1705.03821

Journal information: arXiv

Provided by IBM