Neurons have the right shape for deep learning

Deep learning has brought about machines that can 'see' the world more like humans can, and recognize language. And while deep learning was inspired by the human brain, the question remains: Does the brain actually learn this way? The answer has the potential to create more powerful artificial intelligence and unlock the mysteries of human intelligence.

In a study published December 5th in eLife, CIFAR Fellow Blake Richards and his colleagues unveiled an algorithm that simulates how deep learning could work in our brains. The network shows that certain mammalian neurons have the shape and electrical properties that are well-suited for deep learning. Furthermore, it represents a more biologically realistic way of how real brains could do deep learning.

Research was conducted by Richards and his graduate student Jordan Guerguiev, at the University of Toronto, Scarborough, in collaboration with Timothy Lillicrap at Google DeepMind. Their algorithm was based on neurons in the neocortex, which is responsible for higher order thought.

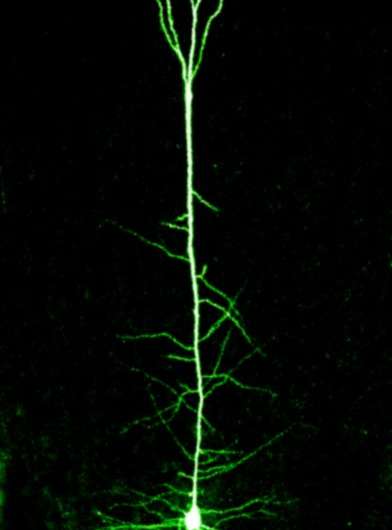

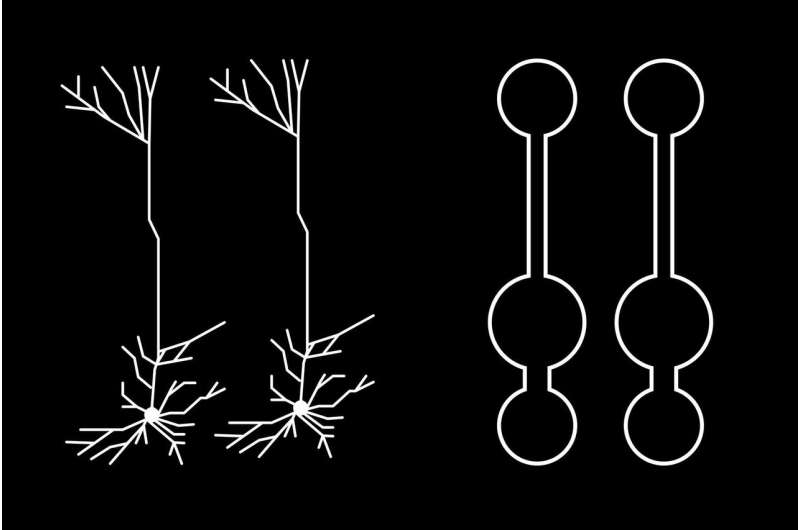

"Most of these neurons are shaped like trees, with 'roots' deep in the brain and 'branches' close to the surface," says Richards. "What's interesting is that these roots receive a different set of inputs than the branches that are way up at the top of the tree."

Using this knowledge of the neurons' structure, Richards and Guerguiev built a model that similarly received signals in segregated compartments. These sections allowed simulated neurons in different layers to collaborate, achieving deep learning.

"It's just a set of simulations so it can't tell us exactly what our brains are doing, but it does suggest enough to warrant further experimental examination if our own brains may use the same sort of algorithms that they use in AI," Richards says.

This research idea goes back to AI pioneers Geoffrey Hinton, a CIFAR Distinguished Fellow and founder of the Learning in Machines & Brains program, and program Co-Director Yoshua Bengio, and was one of the main motivations for founding the program in the first place. These researchers sought not only to develop artificial intelligence, but also to understand how the human brain learns, says Richards.

In the early 2000s, Richards and Lillicrap took a course with Hinton at the University of Toronto and were convinced deep learning models were capturing "something real" about how human brains work. At the time, there were several challenges to testing that idea. Firstly, it wasn't clear that deep learning could achieve human-level skill. Secondly, the algorithms violated biological facts proven by neuroscientists.

Now, Richards and a number of researchers are looking to bridge the gap between neuroscience and AI. This paper builds on research from Bengio's lab on a more biologically plausible way to train neural nets and an algorithm developed by Lillicrap that further relaxes some of the rules for training neural nets. The paper also incorporates research from Matthew Larkam on the structure of neurons in the neocortex. By combining neurological insights with existing algorithms, Richards' team was able to create a better and more realistic algorithm simulating learning in the brain.

The tree-like neocortex neurons are only one of many types of cells in the brain. Richards says future research should model different brain cells and examine how they could interact together to achieve deep learning. In the long-term, he hopes researchers can overcome major challenges, such as how to learn through experience without receiving feedback.

"What we might see in the next decade or so is a real virtuous cycle of research between neuroscience and AI, where neuroscience discoveries help us to develop new AI and AI can help us interpret and understand our experimental data in neuroscience," Richards says.

"Towards deep learning with segregated dendrites" was published in eLife on Dec. 5.

More information: eLife, DOI: 10.7554/eLife.22901