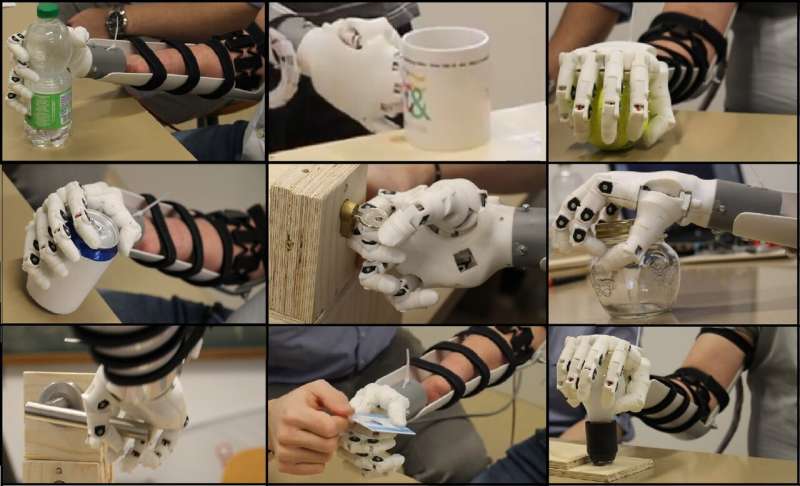

Ordinary movements were recorded to populate a dataset. Credit: snsf, source: Henning Müller

Prosthetic hands restore only some of the function lost through amputation. But combining electrical signals from forearm muscles with other sources of information, such as eye tracking, promises better prostheses. A study funded by the SNSF gives specialists access to valuable new data.

The hand's 34 muscles and 20 joints enable movements of great precision and complexity, which are essential for interacting with the environment and with others on a daily basis. Hand amputation thus has severe physical and psychological repercussions on a person's life. Myoelectric prosthetic hands, which work by recording electrical muscle signals on the skin, allow amputees to regain some lost function. But dexterity is often limited and the variability of the electrical signals from the forearm alone makes the prosthetics sometimes unreliable.

Henning Müller, professor of business informatics, is investigating how combining data from myoelectric signals with other sources of information could lead to better prosthetics. Müller has now made available to the scientific community a dataset that includes eye tracking and computer vision as well as electromyography and acceleration sensor data. The results of this work, funded by the Swiss National Science Foundation (SNSF), have just been published in Nature Scientific Data.

Leveraging gaze

"Our eyes move constantly in quick movements called saccades. But when you go to grasp an object, your eyes fixate on it for a few hundred milliseconds. Consequently, eye tracking provides valuable information about detecting both the object a person intends to grasp and the possible gestures required," says Müller, professor in the Faculty of Medicine at the University of Geneva. Moreover, unlike the muscles of the amputated limb, which atrophy and send changing myoelectric signals, gaze remains constant. Computer vision—i.e., computer recognition of objects in the field of vision—can also be used together with eye tracking to partially automate prosthetic hands.

In order to link common hand gestures with information from the muscles of the amputated limb and these new data sources, Müller studied 45 people—15 amputees and 30 able-bodied subjects—in an identical experimental setting. Each participant had 12 electrodes affixed to their forearm and acceleration sensors on their arm and head. Eye-tracking glasses recorded eye movements while the participants performed 10 common movements for grasping and manipulating objects, such as picking up a pencil or a fork, or playing with a ball. The movements were determined in collaboration with the Institute of Physiotherapy at the HES-SO Valais. Computer modeling of the gestures enabled Müller to build up a new multimodal dataset of hand movements comprising different types of data. The dataset includes information not only from the electrodes but also from recordings of forearm acceleration, eye tracking, computer vision and measurements of head movements.

Free access to data

This multidisciplinary study at the HES-SO, the University Hospital of Zurich and the Italian Institute of Technology in Milan was part of the Sinergia programme. "It's a significant piece of work resulting from two years of data acquisition," says Müller. "Importantly, we've had access to amputees through the University of Padua in Italy. In Switzerland, it's hard to find large numbers of volunteers for these kinds of studies, and most datasets are thus based on only three to four participants." Another advantage of the new dataset is that the sample of amputees is comparable to that of the control group, which was not the case in previous studies. Using this data will make it possible to better understand the consequences of amputation.

The dataset offers prospects for the manufacture of smart myoelectric prosthetic hands. "By integrating the information from eye tracking, we can improve the functionality of the prosthetics and thus the comfort and independence of the amputees," says Müller. This important work has now been made freely available to the scientific community. More than a thousand research groups from all over the world have already accessed older versions of the dataset, developed during previous projects.

More information: Matteo Cognolato et al. Gaze, visual, myoelectric, and inertial data of grasps for intelligent prosthetics, Scientific Data (2020). DOI: 10.1038/s41597-020-0380-3

Provided by Swiss National Science Foundation