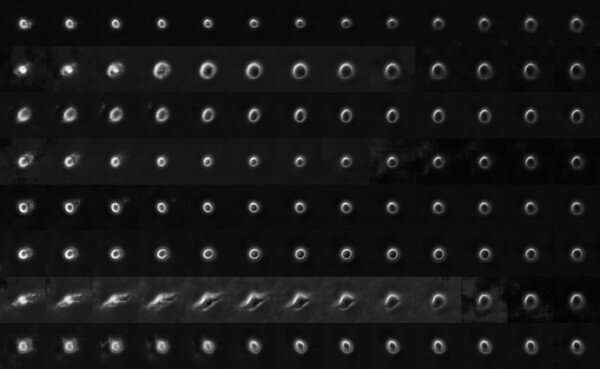

Eight simulations of melanoma cell image traces converted with AI software to show, from left to right, a state of highly metastatic potential to low metastatic potential. Highly metastatic cells tend to extend finger-like protrusions (pseudopodia) and the cell body scatters more light, which relates to a rearrangement of cellular organelles. Credit: Andrew Jamieson

Using artificial intelligence (AI), researchers from UT Southwestern have developed a way to accurately predict which skin cancers are highly metastatic. The findings, published as the July cover article of Cell Systems, show the potential for AI-based tools to revolutionize pathology for cancer and a variety of other diseases.

"We now have a general framework that allows us to take tissue samples and predict mechanisms inside cells that drive disease, mechanisms that are currently inaccessible in any other way," said study leader Gaudenz Danuser, Ph.D., Professor and Chair of the Lyda Hill Department of Bioinformatics at UTSW.

AI technology has significantly advanced over the past several years, Dr. Danuser explained, with deep learning-based methods able to distinguish minute differences in images that are essentially invisible to the human eye. Researchers have proposed using this latent information to look for differences in disease characteristics that could offer insight on prognoses or guide treatments. However, he said, the differences distinguished by AI are generally not interpretable in terms of specific cellular characteristics—a drawback that has made AI a tough sell for clinical use.

To overcome this challenge, Dr. Danuser and his colleagues used AI to search for differences between images of melanoma cells with high and low metastatic potential—a characteristic that can mean life or death for patients with skin cancer—and then reverse-engineered their findings to figure out which features in these images were responsible for the differences.

Using tumor samples from seven patients and available information on their disease progression, including metastasis, the researchers took videos of about 12,000 random cells living in petri dishes, generating about 1,700,000 raw images. The researchers then used an AI algorithm to pull 56 different abstract numerical features from these images.

Dr. Danuser and his colleagues found one feature that was able to accurately discriminate between cells with high and low metastatic potential. By manipulating this abstract numerical feature, they produced artificial images that exaggerated visible characteristics inherent to metastasis that human eyes cannot detect, he added. The highly metastatic cells produced slightly more pseudopodial extensions—a type of fingerlike projection—and had increased light scattering, an effect that may be due to subtle rearrangements of cellular organelles.

To further prove the utility of this tool, the researchers first classified the metastatic potential of cells from human melanomas that had been frozen and cultured in petri dishes for 30 years, and then implanted them into mice. Those predicted to be highly metastatic formed tumors that readily spread throughout the animals, while those predicted to have low metastatic potential spread little or not at all.

Dr. Danuser, a Professor of Cell Biology at UT Southwestern, noted that this method needs further study before it becomes part of clinical care. But eventually, he added, it may be possible to use AI to distinguish important features of cancers and other diseases.

More information: Assaf Zaritsky et al, Interpretable deep learning uncovers cellular properties in label-free live cell images that are predictive of highly metastatic melanoma, Cell Systems (2021). DOI: 10.1016/j.cels.2021.05.003

Journal information: Cell Systems

Provided by UT Southwestern Medical Center