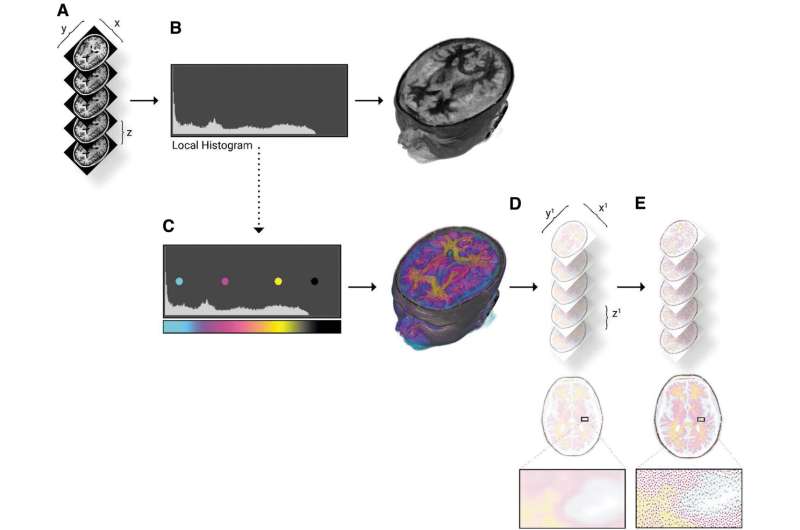

Bitmap processing workflow for modeling radiodensity. (A)The input DICOM data are loaded at the resolution of the scanner, the highest x, y resolution, and thinnest z slice thickness results in more detailed final models. (B) A histogram of the loaded DICOM intensity values is analyzed to parse the ranges of intensity values. If left unmodified, a black and white voxel representation will be created. (C) The material channel of the voxel-based volume rendering is modified, through lookup tables, which map color to the specified intensity ranges. (D) The combined volume rendering is sliced as full-color PNG files to the required constraints and resolution of the printer. (E) Every PNG slice is dithered into each material description that is needed to fabricate the data. The resulting stack of colored and dithered PNG files send directly to the printer for fabrication. DICOM, Digital Imaging and Communications in Medicine. Credit: 3D Printing and Additive Manufacturing (2022). DOI: 10.1089/3dp.2021.0141

A team of University of Colorado researchers has developed a new strategy for transforming medical images, such as CT or MRI scans, into incredibly detailed 3D models on the computer. The advance marks an important step toward printing lifelike representations of human anatomy that medical professionals can squish, poke and prod in the real world.

The researchers describe their results in a paper published in December in the journal 3D Printing and Additive Manufacturing.

The discovery stems from a collaboration between scientists at CU Boulder and CU Anschutz Medical Campus designed to address a major need in the medical world: Surgeons have long used imaging tools to plan out their procedures before stepping into the operating room. But you can't touch an MRI scan, said Robert MacCurdy, assistant professor of mechanical engineering and senior author of the new study.

His team wants to fix that, giving doctors a new way to print realistic, and graspable, models of their patients' various body parts, down to the detail of their tiny blood vessels—in other words, a model of your very own kidney entirely fabricated from soft and pliable polymers.

"Our method addresses the critical need to provide surgeons and patients with a greater understanding of patient-specific anatomy before the surgery ever takes place," said Robert MacCurdy, senior author of the new paper and an assistant professor of mechanical engineering at CU Boulder.

The latest study gets the team closer to achieving that goal. In it, MacCurdy and his colleagues lay out a method for using scan data to develop maps of organs made up of billions of volumetric pixels, or "voxels"—like the pixels that make up a digital photograph, only three-dimensional.

The researchers are currently experimenting with how they can use 3D printers to turn those maps into physical models that are more accurate than those available through existing tools.

"In my lab we look for alternative ways of representation that will feed, rather than interrupt, the thinking process of surgeons," said Jacobson, a clinical design researcher at the Inworks Innovation Initiative. "These representations become sources of ideas that help us and our surgical collaborators see and react to more of what is in the available data."

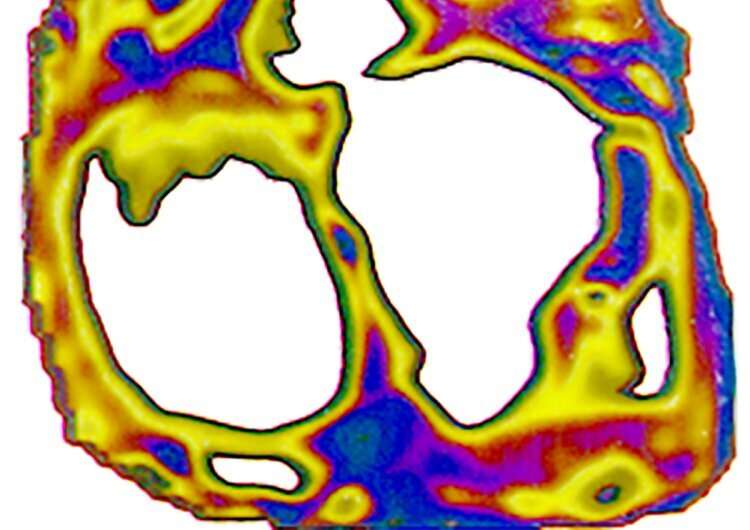

Voxel map of a cross section of a human heart. Credit: Nicholas Jacobson

Slicing the orange

Human organs are complicated—made up of networks of tissue, blood vessels, nerves and more, all with their own texture and colors.

Currently, medical professionals try to capture these structures using "boundary surface" mapping, which, essentially, represents an object as a series of surfaces.

"Think of existing methods as representing an entire orange by only considering the exterior orange peel," MacCurdy said. "When viewed that way, the entire orange is peel."

His team's method, in contrast, is all juicy insides.

The approach begins with a Digital Imaging and Communications in Medicine (DICOM) file, the standard 3D data that CT and MRI scans produce. Using custom software, MacCurdy and his colleagues convert that information into voxels, essentially slicing an organ into tiny cubes with a volume much smaller than a typical tear drop.

And, MacCurdy said, the group can do all that without losing any information about the organs in the process—something that's impossible with existing mapping methods.

To test these tools, the team took real scan data of a human heart, kidney and brain, then created a map for each of those structures. The resulting maps were detailed enough that they could, for example, distinguish between the kidney's fleshy interior, or medulla, and its outer layer or, cortex—both of which look pink to the human eye.

"Surgeons are constantly touching and interacting with tissues," MacCurdy said, "So we want to give them models that are both visual and tactile and as representative of what they're going to face as they can be."

More information: Nicholas Jacobson et al, Defining Soft Tissue: Bitmap Printing of Soft Tissue for Surgical Planning, 3D Printing and Additive Manufacturing (2022). DOI: 10.1089/3dp.2021.0141

Provided by University of Colorado at Boulder