Credit: University of Tsukuba

X-ray fluoroscopy is frequently used in orthopedic surgery. Despite its imaging capabilities, physicians heavily rely on their experience and knowledge to align the 3D shape of the target area using the 2D X-ray image. If X-ray images captured during surgery could be superimposed onto a pre-surgical 3D model (CT model) obtained from a CT scan, it would alleviate the cognitive load associated with visualizing the 3D shape from the 2D image, enabling surgeons to focus more on the surgical procedure.

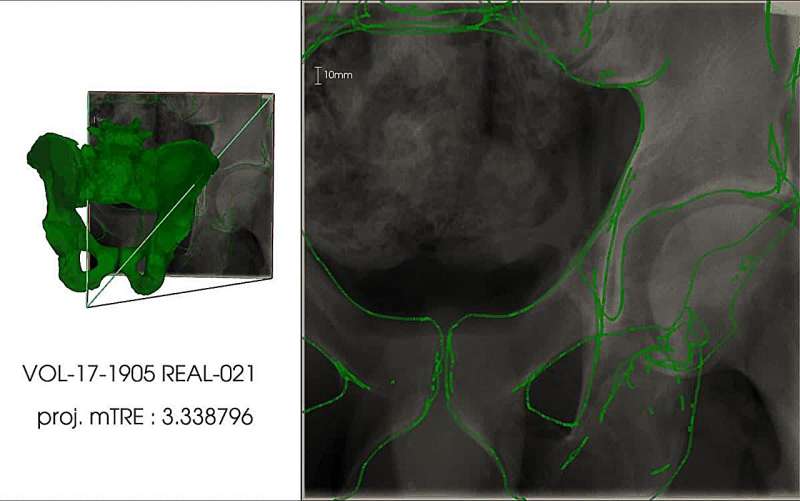

To achieve this goal, the superimposition of the X-ray image and CT model data should "work seamlessly, even when focusing on a specific body part (local image)," and the process should to be "fully automated."

In this study, researchers met these criteria by employing a convolutional neural network capable of regressing the spatial coordinates of X-ray images. By incorporating correspondence formulation with deep learning techniques, they achieved highly accurate superimposition for local X-ray images. The study was published in Medical Image Computing and Computer Assisted Intervention—MICCAI 2023.

The efficacy of this technique was verified using a dataset that included CT data and X-ray images of the pelvis. The superimposition of X-ray images and CT data yielded an error of 3.79 mm (with a standard deviation of 1.67 mm) for simulated X-ray images and 9.65 mm (with a standard deviation of 4.07 mm) for actual X-ray images.

In the case of actual X-ray images, the superimposition of the X-ray image and CT data produced an error of 9.65 mm (with a standard deviation of 4.07 mm).

More information: Pragyan Shrestha et al, X-Ray to CT Rigid Registration Using Scene Coordinate Regression, Medical Image Computing and Computer Assisted Intervention—MICCAI 2023 (2023). DOI: 10.1007/978-3-031-43999-5_74

Provided by University of Tsukuba