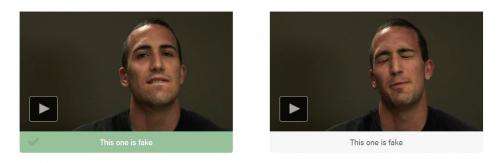

Computer science Ph.D. alumnus Jacob Whitehill demonstrating real and fake expressions of pain in this picture. While at the Jacobs School, Whitehill led an effort to use automated facial expression recognition to make robots better teachers and turned his face into a remote control in the process.

A joint study by researchers at the University of California San Diego and the University of Toronto has found that a computer system spots real or faked expressions of pain more accurately than people can.

The work, titled "Automatic Decoding of Deceptive Pain Expressions," is published in the March 20 issue of Current Biology.

"The computer system managed to detect distinctive dynamic features of facial expressions that people missed," said Marian Bartlett, research professor at UC San Diego's Institute for Neural Computation and lead author of the study. "Human observers just aren't very good at telling real from faked expressions of pain."

Senior author Kang Lee, professor at the Dr. Eric Jackman Institute of Child Study at the University of Toronto, said "humans can simulate facial expressions and fake emotions well enough to deceive most observers. The computer's pattern-recognition abilities prove better at telling whether pain is real or faked."

The research team found that humans could not discriminate real from faked expressions of pain better than random chance – and, even after training, only improved accuracy to a modest 55 percent. The computer system attains an 85 percent accuracy.

"In highly social species such as humans," said Lee, "faces have evolved to convey rich information, including expressions of emotion and pain. And, because of the way our brains are built, people can simulate emotions they're not actually experiencing – so successfully that they fool other people. The computer is much better at spotting the subtle differences between involuntary and voluntary facial movements."

"By revealing the dynamics of facial action through machine vision systems," said Bartlett, "our approach has the potential to elucidate 'behavioral fingerprints' of the neural-control systems involved in emotional signaling."

The single most predictive feature of falsified expressions, the study shows, is the mouth, and how and when it opens. Fakers' mouths open with less variation and too regularly.

"Further investigations," said the researchers, "will explore whether over-regularity is a general feature of fake expressions."

In addition to detecting pain malingering, the computer-vision system might be used to detect other real-world deceptive actions in the realms of homeland security, psychopathology, job screening, medicine, and law, said Bartlett.

"As with causes of pain, these scenarios also generate strong emotions, along with attempts to minimize, mask, and fake such emotions, which may involve 'dual control' of the face," she said. "In addition, our computer-vision system can be applied to detect states in which the human face may provide important clues as to health, physiology, emotion, or thought, such as drivers' expressions of sleepiness, students' expressions of attention and comprehension of lectures, or responses to treatment of affective disorders."

More information: Marian Stewart Bartlett, Gwen C. Littlewort, Mark G. Frank, Kang Lee. "Automatic Decoding of Facial Movements Reveals Deceptive Pain Expressions." Current Biology. Received: December 7, 2011; Received in revised form: December 6, 2013; Accepted: February 5, 2014; Published Online: March 20, 2014. DOI: dx.doi.org/10.1016/j.cub.2014.02.009

Journal information: Current Biology , Pain

Provided by University of California - San Diego