Understanding how the brain manages to process the deluge of information about the outside world has been a daunting challenge. In a recent study in the journal Cell Reports, Yale's Michael Higley and Jessica Cardin from the Department of Neuroscience provide some clues to how cells in the visual cortex direct sensory information to different targets throughout the brain.

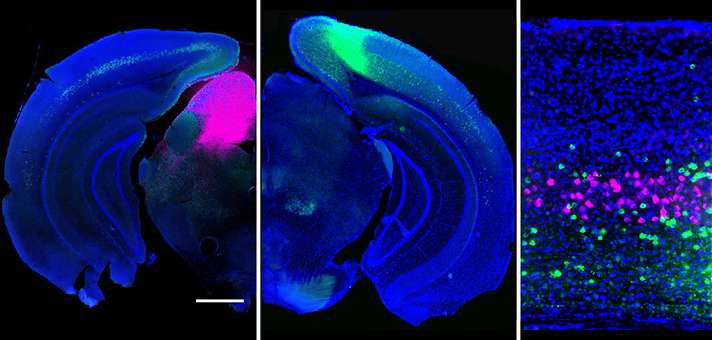

By imaging activity in the mouse brain, the researchers illustrated how neuron types (fluorescently tagged magenta and green) that project to different areas of the brain extract distinct features from a visual scene.

"These results demonstrate how the brain processes multiple sensory inputs in parallel," Higley said, noting that the findings help reveal the normal flow of information in the brain, opening new avenues for understanding how perturbations of these systems might contribute to abnormal behavior.

More information: Gyorgy Lur et al. Projection-Specific Visual Feature Encoding by Layer 5 Cortical Subnetworks, Cell Reports (2016). DOI: 10.1016/j.celrep.2016.02.050

Journal information: Cell Reports

Provided by Yale University