May 1, 2014 report

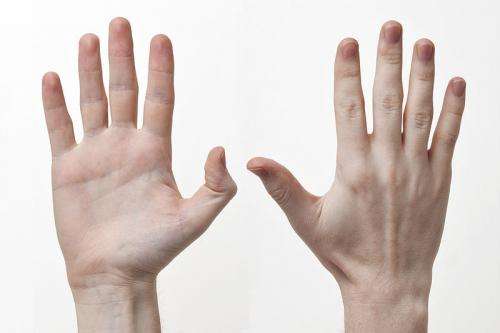

Study shows humans process visual information near our hands differently

(Medical Xpress)—A trio of researchers, one from The Australian National University and the other two from the University of Toronto in Canada, has published a paper in the journal Psychonomic Bulletin and Review, in which they present evidence they've found that shows that humans process visual information near their hands differently than they do for visual information in other places. In their paper, Stephanie Goodhew, Nicole Fogel and Jay Pratt outline a lab study they conducted with volunteer participants that solidifies the notion that spatial information near our hands, is special.

Anecdotal evidence has suggested that we humans see things near our hands differently than we see everything else—our difficulty threading a needle is but one example. Proving this phenomenon has been difficult, but the researchers in this new effort might just have done it.

Scientists have various theories that might explain vision perception differences, such as one that suggest that our brains are hard-wired to think differently when paying close attention, another suggests that we have different kinds of brains cells that respond differently in different scenarios—one kind, call P cells, are better at processing small details in spatial imagery, the thinking goes, and another known as M cells, are not so good at the fine details but react much faster to changes in what is seen. It's this second theory that the researchers in this latest effort have tried to prove true.

To try to prove that we have P and C cells, the researchers asked volunteers to sit at a computer monitor and to watch as images were displayed. On the screen were two objects, each over a single color background. As the volunteers watched, the shapes were made to move behind a rectangle—when the shapes reemerged, they had changed—some had their background color changed, others their shape, and others both. When the researchers asked if the shapes had changed, they found that the volunteers responded slower if the background color had been changed—but, the kicker was that it only occurred when the volunteer's hands were not near the computer screen. When asked to move their hands within view of the screen, the slowdown went away.

The researchers say this simple experiment shows that the theorized M cells are what the brain uses for processing information when the hands are involved—not just when we are looking at something close up, or are concentrating hard. It only happens when the brain is aware that the hands are part of the information being processed.

More information: The nature of altered vision near the hands: Evidence for the magnocellular enhancement account from object correspondence through occlusion, Psychonomic Bulletin & Review, March 2014. link.springer.com/article/10.3758%2Fs13423-014-0622-5

Abstract

A growing body of evidence indicates that the perception of visual stimuli is altered when they occur near the observer's hands, relative to other locations in space (see Brockmole, Davoli, Abrams, & Witt, 2013, for a review). Several accounts have been offered to explain the pattern of performance across different tasks. These have typically focused on attentional explanations (attentional prioritization and detailed attentional evaluation of stimuli in near-hand space), but more recently, it has been suggested that near-hand space enjoys enhanced magnocellular (M) input. Here we differentiate between the attentional and M-cell accounts, via a task that probes the roles of position consistency and color consistency in determining dynamic object correspondence through occlusion. We found that placing the hands near the visual display made observers use only position consistency, and not color, in determining object correspondence through occlusion, which is consistent with the fact that M cells are relatively insensitive to color. In contrast, placing observers' hands far from the stimuli allowed both color and position contribute. This provides evidence in favor of the M-cell enhancement account of altered vision near the hands.

© 2014 Phys.org