What the pupils tells us about language

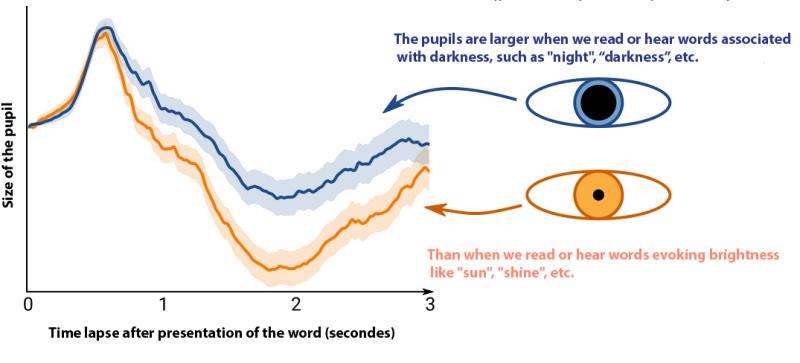

The meaning of a word is enough to trigger a reaction in our pupil: when we read or hear a word with a meaning associated with luminosity ("sun," "shine," etc.), our pupils contract as they would if they were actually exposed to greater luminosity. And the opposite occurs with a word associated with darkness ("night," "gloom," etc.). These results, published on 14 June 2017 in Psychological Science by researchers from the Laboratoire de psychologie cognitive (CNRS/AMU), the Laboratoire parole et langage (CNRS/AMU) and the University of Groningen (Netherlands), open up a new avenue for better understanding how our brain processes language.

The researchers demonstrate here that the size of the pupils does not depend simply on the luminosity of the objects observed, but also on the luminance of the words evoked in writing or in speech. They suggest that our brain automatically creates mental images of the read or heard words, such as a bright ball in the sky for the word "sun," for example. It is thought that this mental image is the reason why the pupils become smaller, as if we really did have the sun in our eyes.

This new study raises important questions. Are these mental images necessary to understand the meaning of words? Or, on the contrary, are they merely an indirect consequence of language processing in our brain, as though our nervous system were preparing, as a reflex, for the situation evoked by the heard or read word? In order to respond to these questions, the researchers wish to pursue their experiment by varying the language parameters, by testing their hypothesis in other languages, for example.

More information: Embodiment as preparation: Pupillary responses to words that convey a sense of brightness or darkness. PeerJ Preprints 4:e1795v1 DOI: 10.7287/peerj.preprints.1795v1