Want to listen better? Lend a right ear

Listening is a complicated task. It requires sensitive hearing and the ability to process information into cohesive meaning. Add everyday background noise and constant interruptions by other people, and the ability to comprehend what is heard becomes that much more difficult.

Audiology researchers at Auburn University in Alabama have found that in such demanding environments, both children and adults depend more on their right ear for processing and retaining what they hear.

Danielle Sacchinelli will present this research with her colleagues at the 174th Meeting of the Acoustical Society of America, which will be held in New Orleans, Louisiana, Dec. 4-8.

"The more we know about listening in demanding environments, and listening effort in general, the better diagnostic tools, auditory management (including hearing aids) and auditory training will become," Sacchinelli said.

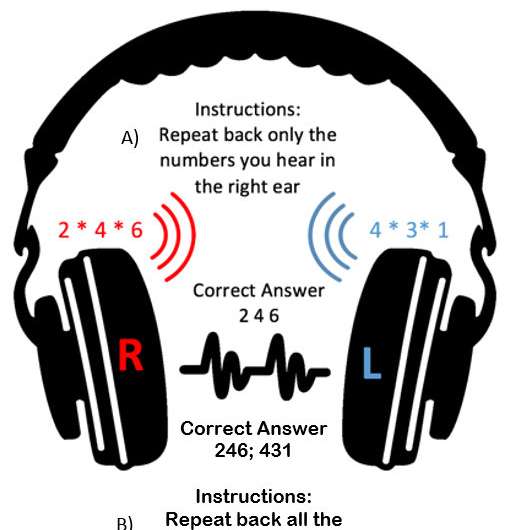

The research team's work is based on dichotic listening tests, used to diagnose, among other conditions, auditory processing disorders in which the brain has difficulty processing what is heard.

In a standard dichotic test, listeners receive different auditory inputs delivered to each ear simultaneously. The items are usually sentences (e.g., "She wore the red dress"), words or digits. Listeners either pay attention to the items delivered in one ear while dismissing the words in the other (i.e., separation), or are required to repeat all words heard (i.e., integration).

According to the researchers, children understand and remember what is being said much better when they listen with their right ear.

Sounds entering the right ear are processed by the left side of the brain, which controls speech, language development, and portions of memory. Each ear hears separate pieces of information, which is then combined during processing throughout the auditory system.

However, young children's auditory systems cannot sort and separate the simultaneous information from both ears. As a result, they rely heavily on their right ear to capture sounds and language because the pathway is more efficient.

What is less understood is whether this right-ear dominance is maintained through adulthood. To find out, Sacchinelli's research team asked 41 participants ages 19-28 to complete both dichotic separation and integration listening tasks.

With each subsequent test, the researchers increased the number of items by one. They found no significant differences between left and right ear performance at or below an individual's simple memory capacity. However, when the item lists went above an individual's memory span, participants' performance improved an average of 8 percent (some individuals' up to 40 percent) when they focused on their right ear.

"Conventional research shows that right-ear advantage diminishes around age 13, but our results indicate this is related to the demand of the task. Traditional tests include four-to-six pieces of information," said Aurora Weaver, assistant professor at Auburn University and member of the research team. "As we age, we have better control of our attention for processing information as a result of maturation and our experience."

In essence, ear differences in processing abilities are lost on tests using four items because our auditory system can handle more information.

"Cognitive skills, of course, are subject to decline with advance aging, disease, or trauma," Weaver said. "Therefore, we need to better understand the impact of cognitive demands on listening."

More information: Abstract: 3aPPa3: "Does the right ear advantage persist in mature auditory systems when cognitive demand for processing increases?" by Danielle M. Sacchinelli, Dec. 6, 2017, in Studios Foyer (poster sessions) in the New Orleans Marriott. asa2017fall.abstractcentral.com/s/u/J4DDi4sip_s