Artificial intelligence tool could increase patient health literacy, study shows

A federal rule that requires health care providers to offer patients free, convenient and secure electronic access to their personal medical records went into effect earlier this year. However, providing patients with access to clinician notes, test results, progress documentation and other records doesn't automatically equip them to understand those records or make appropriate health decisions based on what they read. "Medicalese" can trip up even the most highly educated layperson, and studies have shown that low health literacy is associated with poor health outcomes.

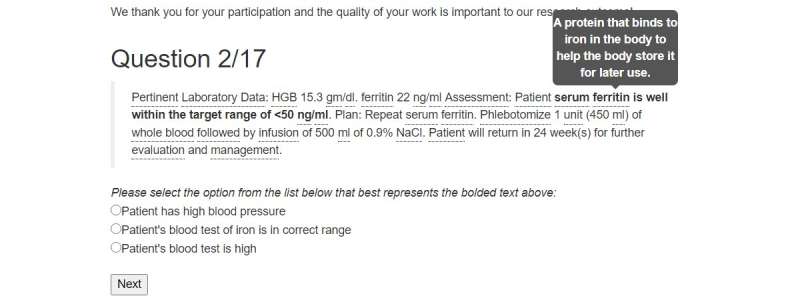

University of Notre Dame researcher John Lalor, an assistant professor of information technology, analytics and operations at the Mendoza College of Business, is part of a team working on a web-based natural language processing system that could increase the health literacy of patients who access their records through a patient portal. NoteAid, a project based at the University of Massachusetts Amherst, conveniently translates medical jargon for health care consumers.

Lalor worked with the team to develop ComprehENotes, a tool to specifically evaluate electronic health record (EHR) note comprehension. They also used crowdsourced workers to compare how an active intervention like NoteAid, which automatically defines medical terms, improved a patient's EHR literacy compared with simply having a passive system, such as MedlinePlus, available on the web. That study found that NoteAid significantly improved health literacy scores compared with those who had no resource and those who had MedlinePlus access.

"In both of those studies, we used crowdsourced workers from Amazon Mechanical Turk and found that the demographics of the participants didn't overlap well with demographic groups typically associated with low health literacy—for example, older, less educated people," Lalor said. "In this study, we wanted to see if the definition tool, NoteAid, was effective for actual patients at a hospital."

For their latest study, published in the May issue of the Journal of Medical Internet Research, the team recruited 174 people waiting for their appointments at a community hospital in Massachusetts.

Trial participants were shown either a NoteAid version of the ComprehENotes test, with medical jargon definitions that were viewable by hovering the mouse over the text, or a version without any definitions.

"We hypothesized that the NoteAid tool would, in fact, improve performance on our comprehension instrument, which it did," Lalor said. Also, they found that the average score for hospital participants was significantly lower than the average score for the crowdsourced participants, which was consistent with the lower education levels in the community hospital sample and the overall impact of education level on test results.

These findings, Lalor explained, are significant for a few reasons. "First, by showing that NoteAid is effective for local patients we can generalize about its usefulness beyond crowdsourced workers to actual patients," he said. "Same for our test of EHR note comprehension. Both of these are relevant now with the recent laws mandating patient access to their EHRs, including notes."

Now that they have evidence that a natural language processing tool can significantly improve patient health literacy, Lalor says the team is working to evaluate and refine the dictionary the tool uses, from both a physician's standpoint regarding accuracy and a patient's standpoint in terms of reading level. Also, he noted, "The last piece is kind of a higher level question of what should even be included in the dictionary as a jargon term versus what is just a rare term, or something you might not understand, but is not critical to your note."

Defining every word in a medical record could potentially overwhelm the patient. "If you know that they just need particular terms, they might be more likely to read them and internalize them and have a better understanding of the note," he said.

At the undergraduate level, Lalor teaches an unstructured data analytics course. He also teaches in Mendoza's Master of Science in Business Analytics program. His research interests are in machine learning and natural language processing, specifically regarding model evaluation, quantifying uncertainty, model interpretability and applications in biomedical informatics.

"Evaluating the Effectiveness of NoteAid in a Community Hospital Setting: Randomized Trial of Electronic Health Record Note Comprehension Interventions with Patients" was co-authored by Wen Hu, Matthew Tran, Kathleen Mazor and Hong Yu of the University of Massachusetts, and Hao Wu of Vanderbilt University.

More information: John P Lalor et al, Evaluating the Effectiveness of NoteAid in a Community Hospital Setting: Randomized Trial of Electronic Health Record Note Comprehension Interventions With Patients, Journal of Medical Internet Research (2021). DOI: 10.2196/26354