This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Brain games reveal clues about how the mind works

Scientists are using supercomputers and data from the game Ebb and Flow to train deep learning models that mimic the human behavior of "task-switching," shifting attention from one task to another.

This basic research is important for helping scientists understand cognitive control, which encompasses the basic mental processes that allow one to focus on the task at hand, but also flexibly disengage from the task if the need arises. These abilities are taxed by the game Ebb and Flow that the researchers studied.

The research may also inform the understanding of diseases in which patients exhibit deficits in cognitive control, such as bipolar disorder and schizophrenia.

During the game, the player uses the arrow keys on one's keyboard to indicate the direction green leaves are pointing in and the direction red leaves are moving in, as green leaves alternate with red ones, Mastery of this task-switching game is supposed to train mental flexibility, as the player must repeatedly shift focus from one task to the other.

"We developed a new way of modeling these data that imposes fewer assumptions on how the brain goes about doing a particular task," said Paul Jaffe, a postdoctoral fellow working with Professor Russell Poldrack, Department of Psychology, Stanford University.

Jaffe and Poldrack are co-authors of a study that developed new and more realistic models of task-switching, published in Nature Human Behaviour.

Existing models of cognitive processing assemble simple components in a 'top down,' rigid fashion.

"They make a lot of assumptions about how the mind does the task. Or they have other limitations, such as they can't actually be fitted to data from participants," Jaffe said.

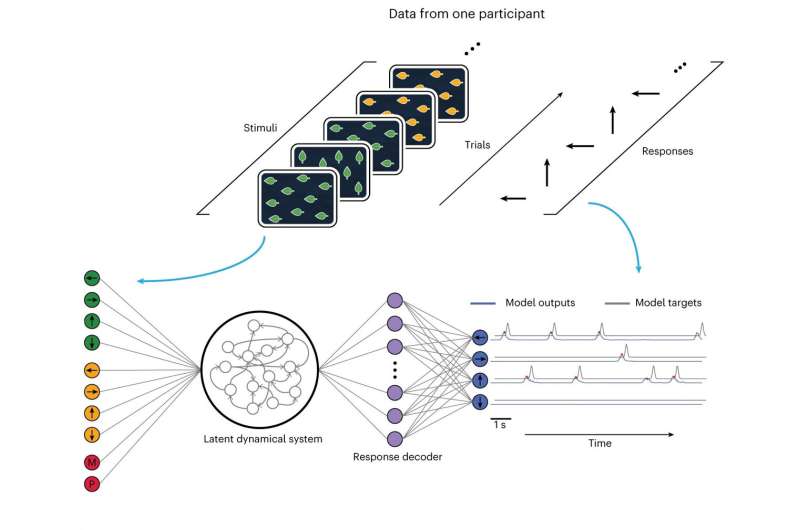

Jaffe and colleagues developed a framework for modeling human behavior on cognitive tasks called task-DyVA. It uses dynamical neural networks that take in the task stimuli as inputs and generate task responses as outputs, much as people do when engaging with a task.

"The task-DyVA framework allowed us to not only fit the vast amounts of Ebb and Flow data available to us, but also to model individual differences of participants," Jaffe said. "We could fit one model for each person's data, and then look at how the models differed. We can then look inside 'the brain' of the model—a neural network—and understand how it's doing the tasks."

The team adapted machine learning algorithms called variational auto encoders, a method developed to handle inference and learning with difficult probabilistic models.

The research team was awarded allocations on the Texas Advanced Computing Center's (TACC) Maverick2 supercomputer, a system dedicated for machine learning workloads achieved via graphics processing unit (GPU)-powered frameworks that can take advantage of its 24 nodes of NVidia GTX 1080 Ti GPUs, with four GPUs in a node, as well as three nodes each with two NVidia P100 GPUs.

"TACC was essential for accomplishing this work because of the GPUs available, hardware that is essentially optimized for calculating many matrix multiplications very quickly, which is an operation used frequently in deep learning models like those we used in this study," Jaffe said.

Russell Poldrack added that "GPUs can greatly accelerate the fitting and testing of machine learning models. The allocation on Maverick2 allowed us to push this work forward much more quickly than we could have without this resource."

The researchers used the Maverick2 supercomputing resources and existing de-identified data sets from 140 participants of Ebb and Flow ages 20-89 to develop their modeling framework and, ultimately, ask questions by analyzing the models about how the brain does the task.

"We looked inside these models to try and understand how they're doing the tasks. One thing we found is that the two tasks in this broader task-switching task are represented in different regions of the model's latent space, an abstract representation of the variables involved in this particular task. We found two different regions of the model's 'brain' doing each task," Jaffe said.

This finding could explain why there is a "switch cost"—slowing in responses when people switch tasks—since it takes time for activity to go from one brain region to the other. What's more, the model could explain why it's advantageous to the brain to split up these tasks, versus just having a centralized control. This supports an idea in a 2022 study by scientists Musslick and Cohen.

"We found that by separating the tasks into these two different brain regions, that actually makes the model more robust in that it's harder for noise to disrupt the task in each of these brain regions. By keeping things separated, it allows the brain to do each task very well, without getting confused by the signals from the other task," Jaffe added.

Going forward, the science team is looking to adapt the model to other tasks and even train it to do multiple tasks to start to understand and develop new models that can explain how people accomplish generalizing from limited experience and performing the vast array of complicated tasks that we encounter in daily life.

For instance, fMRI brain scans, a technique employed by the Poldrack Lab, could be fit to the model to capture neural data and behavioral data. "Then we can start to understand how the brain is generating these complex behaviors. That's one of the longer-term goals we have for the task-DyVA framework," Jaffe said.

The Poldrack Lab is currently processing a large number of openly-shared fMRI datasets using a Pathways Allocation on the TACC's Frontera supercomputer.

Said Jaffe, "To fit the complex models that will be needed to explain the brain and explain behavior, one needs really powerful computing systems, and in particular, GPUs. Supercomputing resources like those at TACC are essential for doing this important work."

More information: Paul I. Jaffe et al, Modelling human behaviour in cognitive tasks with latent dynamical systems, Nature Human Behaviour (2023). DOI: 10.1038/s41562-022-01510-8