This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

Medical writing: Caution warranted if using ChatGPT

When it comes to health care, it's best to ask a professional. This oft-repeated adage also applies to scientists who might be tempted to use the ChatGPT artificial intelligence model for medical writing.

Researchers from CHU Sainte-Justine and the Montreal Children's Hospital recently posed 20 medical questions to ChatGPT. The chatbot provided answers of limited quality, including factual errors and fabricated references, show the results of the study published in Mayo Clinic Proceedings: Digital Health.

"These results are alarming, given that trust is a pillar of scientific communication. ChatGPT users should pay particular attention to the references provided before integrating them into medical manuscripts," says Dr. Jocelyn Gravel, lead author of the study and emergency physician at CHU Sainte-Justine.

Striking findings

The researchers drew their questions from existing studies and asked ChatGPT to support its answers with references. They then asked the authors of the articles from which the questions were taken to rate the software's answers on a scale from 0 to 100%.

Out of 20 authors, 17 agreed to review the answers of ChatGPT. They judged them to be of questionable quality (median score of 60%). They also found major (five) and minor (seven) factual errors. For example, the software suggested administering an anti-inflammatory drug by injection, when it should be swallowed. ChatGPT also overestimated the global burden of mortality associated with Shigella infections by a factor of ten.

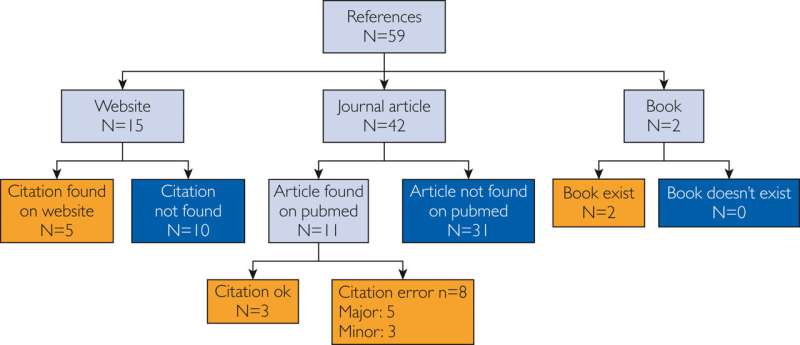

Of the references provided, 69% were fabricated, yet looked real. Most of the false citations (95%) used the names of authors who had already published articles on a related subject, or came from recognized organizations such as the Centers for Disease Control and Prevention or the Food and Drug Administration. The references all bore a title related to the subject of the question and used the names of known journals or websites.

Even some of the real references contained errors (eight out of 18).

ChatGPT explains

When asked about the accuracy of the references provided, ChatGPT gave varying answers. In one case, it claimed, "References are available in Pubmed," and provided a web link. This link referred to other publications unrelated to the question. At another point, the software replied, "I strive to provide the most accurate and up-to-date information available to me, but errors or inaccuracies can occur."

"The importance of proper referencing in science is undeniable. The quality and breadth of the references provided in authentic studies demonstrate that the researchers have performed a complete literature review and are knowledgeable about the topic. This process enables the integration of findings in the context of previous work, a fundamental aspect of medical research advancement. Failing to provide references is one thing but creating fake references would be considered fraudulent for researchers," says Dr. Esli Osmanlliu, emergency physician at the Montreal Children's Hospital and scientist with the Child Health and Human Development Program at the Research Institute of the McGill University Health Centre.

"Researchers using ChatGPT may be misled by false information because clear, seemingly coherent and stylistically appealing references can conceal poor content quality," adds Dr. Osmanlliu.

This is the first study to assess the quality and accuracy of references provided by ChatGPT, the researchers point out.

More information: Jocelyn Gravel et al, Learning to Fake It: Limited Responses and Fabricated References Provided by ChatGPT for Medical Questions, Mayo Clinic Proceedings: Digital Health (2023). DOI: 10.1016/j.mcpdig.2023.05.004