This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

Exploring the brain basis of concepts by using a new type of neural network

The influence of language on human thinking could be stronger than previously assumed. This is the result of a new study by Professor Friedemann Pulvermüller and his team from the Brain Language Laboratory at Freie Universität Berlin.

In this study, the researchers examined the modeling of human concept formation and the impact of language mechanisms on the emergence of concepts. The results were published in the journal Progress in Neurobiology under the title "Neurobiological Mechanisms for Language, Symbols, and Concepts: Clues from Brain-Constrained Deep Neural Networks."

Children can learn one or more languages with little effort. However, the cognitive activity involved should not be underestimated. Not only do language learners have to learn how to pronounce words, they must also learn how to interlink word forms with content—with concepts such as "coffee," "drinking," or "beauty." But what are the actual mechanisms at work in the network of billions of nerve cells within our brains? And might the learning of some concepts strictly require the presence of language?

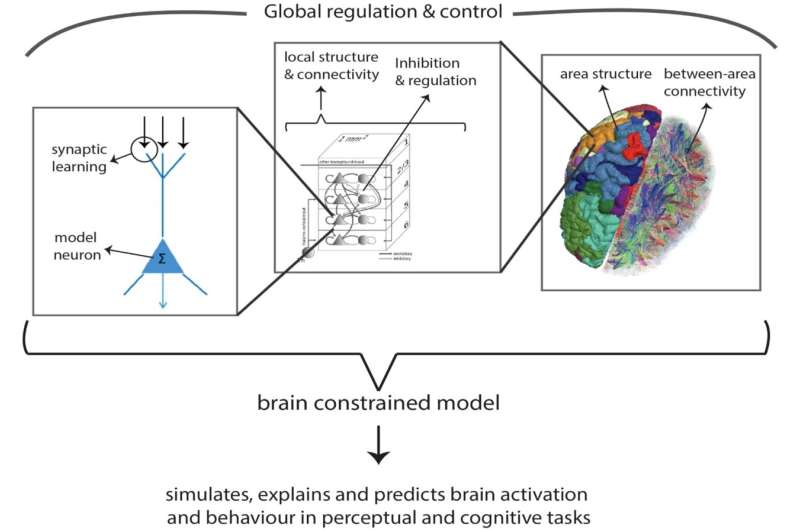

One method to seek answers to these questions uses computer models that simulate nerve cell activity. However, classic artificial neural networks are not sufficient because the structure and function of these networks are often dissimilar to the anatomy and physiology of real brains. Therefore, Pulvermüller and his research team are developing a new type of neural network that more closely approximates real brain networks.

These networks are not only subdivided into areas that resemble those of the brain, they also mimic the human cerebral cortex in terms of how these areas are connected. The areas consist of groups of artificial "neurons" that communicate with each other via local connections. When activated together, these individual "neurons" can strengthen their connections; however, their connections are weakened when activated independently.

This learning principle, known as Hebbian learning, has been well studied in biological systems. Using these brain-like or "brain-constrained" networks, researchers can test neurobiological theories of language and cognition and explain cognitive phenomena.

For example, these networks can be used to simulate how objects are perceived and the subsequent learning processes; while in more advanced studies, they can provide information on the formation of conceptual representations and the influence of language on them. Similar to humans, the networks can quickly learn how words correspond to specific objects or actions.

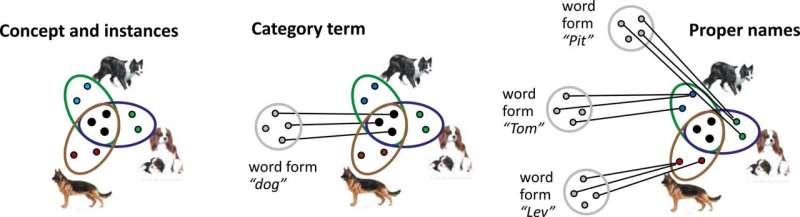

Of particular interest to the researchers is the discovery that highly interconnected nerve cell populations emerge within the brain-like networks. These populations function as the biological basis of concepts and are active not only for specific objects but also for entire classes of similar objects and entities, such as "robots," "cats," or "sunrises."

The networks activate the relevant conceptual "nerve cell circuitry" even when confronted with new, previously unobserved objects. Concept formation was even more efficient and faster when linguistic expressions were learned together with objects or actions. "These results indicate that language at the biological level can support and accelerate concept formation," says Pulvermüller.

The influence of language on the formation of abstract concepts such as "beauty" or "peace" is particularly pronounced. Whereas a concrete concept such as that of a cat has very similar real-world correlates (i.e., different cats varying in size, color, fur texture, etc.), those of abstract concepts are extremely variable.

An eye, a sculpture, and a sunset may all be perceived as "beautiful" although they do not have any common visual characteristics or features. In the network simulations it turned out that the network can only build a neuronal representation of an abstract concept if it experiences the same linguistic label with the variable instances of the abstract concept.

These new research findings suggest that the influence of language on our thinking is much stronger and more significant than previously thought. Although several linguists since Wilhelm von Humboldt have pointed out that thought and language are interrelated, the idea that language significantly influences our thinking has been rather unpopular or at least highly controversial among researchers.

The new results with brain-constrained networks now demonstrate that language has a strong influence on concept formation in simulation experiments. They also suggest the existence of a neurobiological mechanism for the causal influence of language on thought.

More information: Friedemann Pulvermüller, Neurobiological mechanisms for language, symbols and concepts: Clues from brain-constrained deep neural networks, Progress in Neurobiology (2023). DOI: 10.1016/j.pneurobio.2023.102511