This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

'Draw me a cell': Generative AI takes on clinical predictions in cancer

A study published in Nature Machine Intelligence introduces an advanced artificial intelligence (AI) model capable of creating virtual colorations of cancer tissue. The study, co-led by scientists at the Universities of Lausanne and Bern, is a major step forward in enhancing pathology analysis and diagnostics of cancer.

Through a combination of innovative computational techniques, a team of computer scientists, biologists, and clinicians led by Marianna Rapsomaniki at the University of Lausanne and Marianna Kruithof-de Julio at the University of Bern has developed a novel approach to analyzing cancer tissue.

Driven by the motivation to overcome missing experimental data, a challenge that researchers often face when working with limited patient tissues, the scientists have created the "VirtualMultiplexer": an artificial intelligence (AI) model that generates virtual pictures of diagnostic tissue colorations.

Virtual staining: A new frontier in cancer research

Employing generative AI, the tool creates accurate and detailed images of a cancer tissue that imitate what its staining for a given cellular marker would look like. Such specific dyes can provide important information on the status of a patient's cancer and play a major role in diagnosis.

"The idea is that you only need one actual tissue coloration that is done in the lab as part of routine pathology, to then simulate which cells in that tissue would dye positive for several other, more specific markers," explains Rapsomaniki, a computer scientist and AI expert at the Biomedical Data Science Center of the University of Lausanne and the Lausanne University Hospital, and co-corresponding author of the study.

The technology reduces the need to perform resource-intensive laboratory analyses and is intended to complement information obtained from experiments. "Our model can be very helpful when the available tissue material is limited, or when experimental stainings cannot be done for other reasons," adds Pushpak Pati, the study's first author.

Understanding the method: Contrastive unpaired translation

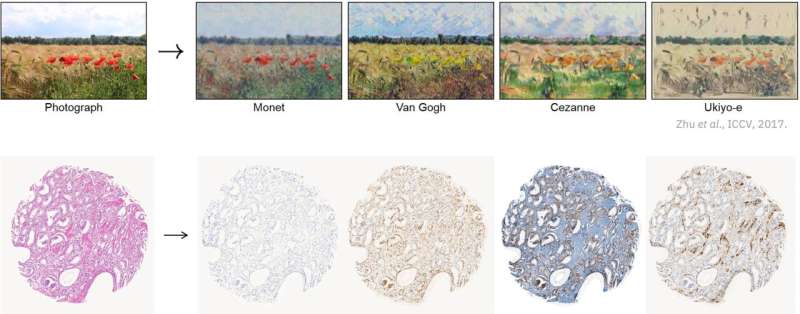

To understand the underlying methodology termed "contrastive unpaired translation," one can imagine a mobile phone app that predicts what a young person would look like at an older age.

Based on a current photo, the app produces a virtual image simulating a person's future appearance. It achieves this by processing information from thousands of pictures of other, unrelated, aged individuals. As the algorithm learns "what an old person looks like," it can apply this transformation to any given photo.

Similarly, the VirtualMultiplexer transforms a photo of one coloration that broadly distinguishes different regions within a cancer tissue into images depicting which cells in that tissue stain positive for a given marker molecule. This becomes possible by training the AI model on numerous pictures of other tissues, on which these dyes were done experimentally.

Once having learned the logic defining a real-life dyed picture, the VirtualMultiplexer is capable of applying the same style to a given tissue image and generating a virtual version of the desired dye.

Preventing hallucinations: Ensuring performance and clinical relevance

The scientists applied a rigorous validation process to ensure that the virtual pictures are clinically meaningful and not just AI-generated outputs that seem plausible but are in fact false inventions, termed "hallucinations." They tested how well the artificial images predict clinical outcomes, such as patients' survival or disease progression, compared to existing data from real-life stained tissues.

The comparison confirmed that the virtual dyes are not only accurate but also clinically useful, which shows that the model is reliable and trustworthy.

Moving deeper, the researchers took the VirtualMultiplexer to the so-called Turing test. Named after the founding father of modern AI, Alan Turing, this test determines whether an AI can produce outputs that are indistinguishable from those created by humans.

By asking expert pathologists to tell apart traditional stained images from the AI-generated colorations, the authors found out that the artificial creations are perceived as close to identical to real pictures, showing their model's effectiveness.

Multiscale approach: A major advancement

One of the major breakthroughs setting apart the VirtualMultiplexer is its multiscale approach. Traditional models often focus on examining the tissue at either a microscopic (cell level) or macroscopic (overall tissue) scale.

The model proposed by the team from Lausanne and Bern considers three different scales of the structure of a cancer tissue: its global appearance and architecture, the relationships between neighboring cells, and detailed characteristics of individual cells. This holistic approach allows for a more accurate representation of the tissue image.

Implications for cancer research and beyond

The study marks a significant advance in oncology research, complementing existing experimental data. By generating high-quality simulated stainings, the VirtualMultiplexer can help experts formulate hypotheses, prioritize experiments, and advance their understanding of cancer biology.

Marianna Kruithof-de Julio, head of the Urology Research Laboratory at the University of Bern, and co-corresponding author of the study, sees important potential for future applications. "We developed our tool using tissues from people affected by prostate cancer. In the paper we also showed that it works similarly well for pancreatic tumors—making us confident that it can be useful for many other disease types."

The innovative approach also has the potential to support so-called foundation AI models in biological studies. The power of such models is their ability to learn through the processing of vast amounts of data in a self-supervised manner, allowing them to understand the logic behind complex structures and acquire the ability to perform different types of tasks.

"The available data for rare tissues is scarce. The VirtualMultiplexer can fill these gaps by generating realistic images rapidly and at no cost, and thereby help future foundation models to analyze and describe tissue characteristics in multiple different ways. This will pave the way for new discoveries in research and diagnosis," concludes Rapsomaniki.

More information: Accelerating histopathology workflows with generative AI-based virtually multiplexed tumour profiling', Nature Machine Intelligence (2024). DOI: 10.1038/s42256-024-00889-5