Mimicking the brain, in silicon: New computer chip models how neurons communicate

For decades, scientists have dreamed of building computer systems that could replicate the human brain’s talent for learning new tasks.

MIT researchers have now taken a major step toward that goal by designing a computer chip that mimics how the brain’s neurons adapt in response to new information. This phenomenon, known as plasticity, is believed to underlie many brain functions, including learning and memory.

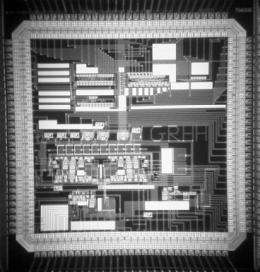

With about 400 transistors, the silicon chip can simulate the activity of a single brain synapse — a connection between two neurons that allows information to flow from one to the other. The researchers anticipate this chip will help neuroscientists learn much more about how the brain works, and could also be used in neural prosthetic devices such as artificial retinas, says Chi-Sang Poon, a principal research scientist in the Harvard-MIT Division of Health Sciences and Technology.

Poon is the senior author of a paper describing the chip in the Proceedings of the National Academy of Sciences the week of Nov. 14. Guy Rachmuth, a former postdoc in Poon’s lab, is lead author of the paper. Other authors are Mark Bear, the Picower Professor of Neuroscience at MIT, and Harel Shouval of the University of Texas Medical School.

Modeling synapses

There are about 100 billion neurons in the brain, each of which forms synapses with many other neurons. A synapse is the gap between two neurons (known as the presynaptic and postsynaptic neurons). The presynaptic neuron releases neurotransmitters, such as glutamate and GABA, which bind to receptors on the postsynaptic cell membrane, activating ion channels. Opening and closing those channels changes the cell’s electrical potential. If the potential changes dramatically enough, the cell fires an electrical impulse called an action potential.

All of this synaptic activity depends on the ion channels, which control the flow of charged atoms such as sodium, potassium and calcium. Those channels are also key to two processes known as long-term potentiation (LTP) and long-term depression (LTD), which strengthen and weaken synapses, respectively.

The MIT researchers designed their computer chip so that the transistors could mimic the activity of different ion channels. While most chips operate in a binary, on/off mode, current flows through the transistors on the new brain chip in analog, not digital, fashion. A gradient of electrical potential drives current to flow through the transistors just as ions flow through ion channels in a cell.

“We can tweak the parameters of the circuit to match specific ion channels,” Poon says. “We now have a way to capture each and every ionic process that’s going on in a neuron.”

Previously, researchers had built circuits that could simulate the firing of an action potential, but not all of the circumstances that produce the potentials. “If you really want to mimic brain function realistically, you have to do more than just spiking. You have to capture the intracellular processes that are ion channel-based,” Poon says.

The new chip represents a “significant advance in the efforts to incorporate what we know about the biology of neurons and synaptic plasticity onto CMOS [complementary metal-oxide-semiconductor] chips,” says Dean Buonomano, a professor of neurobiology at the University of California at Los Angeles, adding that “the level of biological realism is impressive.

The MIT researchers plan to use their chip to build systems to model specific neural functions, such as the visual processing system. Such systems could be much faster than digital computers. Even on high-capacity computer systems, it takes hours or days to simulate a simple brain circuit. With the analog chip system, the simulation is even faster than the biological system itself.

Another potential application is building chips that can interface with biological systems. This could be useful in enabling communication between neural prosthetic devices such as artificial retinas and the brain. Further down the road, these chips could also become building blocks for artificial intelligence devices, Poon says.

Debate resolved

The MIT researchers have already used their chip to propose a resolution to a longstanding debate over how LTD occurs.

One theory holds that LTD and LTP depend on the frequency of action potentials stimulated in the postsynaptic cell, while a more recent theory suggests that they depend on the timing of the action potentials’ arrival at the synapse.

Both require the involvement of ion channels known as NMDA receptors, which detect postsynaptic activation. Recently, it has been theorized that both models could be unified if there were a second type of receptor involved in detecting that activity. One candidate for that second receptor is the endo-cannabinoid receptor.

Endo-cannabinoids, similar in structure to marijuana, are produced in the brain and are involved in many functions, including appetite, pain sensation and memory. Some neuroscientists had theorized that endo-cannabinoids produced in the postsynaptic cell are released into the synapse, where they activate presynaptic endo-cannabinoid receptors. If NMDA receptors are active at the same time, LTD occurs.

When the researchers included on their chip transistors that model endo-cannabinoid receptors, they were able to accurately simulate both LTD and LTP. Although previous experiments supported this theory, until now, “nobody had put all this together and demonstrated computationally that indeed this works, and this is how it works,” Poon says.

This story is republished courtesy of MIT News (web.mit.edu/newsoffice/), a popular site that covers news about MIT research, innovation and teaching.