March 30, 2013 feature

The visual system as economist: Neural resource allocation in visual adaptation

(Medical Xpress)—It has long been held that in a new environment, visual adaptation should improve visual performance. However, evidence has contradicted this expectation: Adaptation sometimes not only decreases sensitivity for the adapting stimuli, but can also change sensitivity for stimuli very different from the adapting ones. Recently, scientists at the Salk Institute for Biological Studies and the Schepens Eye Research Institute formulated and tested the hypothesis that these results can be explained by a process that optimizes sensitivity for many stimuli, rather than changing sensitivity only for those stimuli whose statistics have changed. By manipulating stimulus statistics – that is, measuring visual sensitivity across a wide range of spatiotemporal luminance modulations while varying the distribution of stimulus speeds – the researchers demonstrated a large-scale reorganization of visual sensitivity. This reorganization formed an orderly pattern of sensitivity gains and losses predicted by a theory describing how visual systems can optimize the distribution of receptive field characteristics across stimuli.

Researchers Sergei Gepshtein, Luis A. Lesmes and Thomas D. Albright faced a variety of challenges in conducting their study. "It's well known that exposure to new visual stimuli changes our perception of these stimuli. However, understanding the nature of this adaptive process – that is, why it happens and what its goals are – has been elusive," Gepshtein tells Medical Xpress. "Previous visual adaptation studies produced puzzling results that would not agree with a simple explanation." More specifically, Gepshtein explains, visual adaptation would sometimes improve sensitivity to new stimuli, but sometimes sensitivity would decrease, or would change for stimuli that differed from the new stimuli.

"From our current perspective, previous results appeared to be inconsistent because adaptation was viewed as a local phenomenon. Rather," Gepshtein points out, "visual perception is mediated by multiple neuronal cells organized in a system in which each cell is responsive only to a small range of stimuli, but the system as a whole is responsive to the entire ensemble of stimuli."

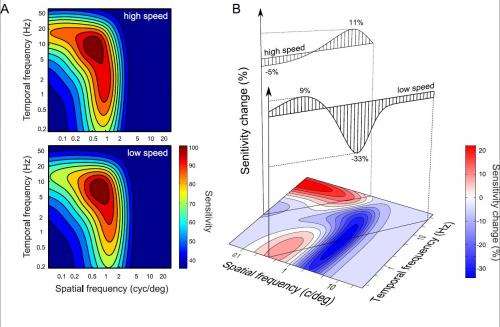

From the system perspective, the question the scientists faced was how the system organizes sensitivity of its multiple cells across the full range of stimuli. "In the previous view, which we call stimulus account of adaptation, the question was how changing stimulus frequency or persistency would change sensitivity to that stimulus," Gepshtein continues. "In our present system account of adaptation, however, we instead ask how adaptation affects sensitivity to the entire ensemble of potential stimuli." In other words, instead of a local approach (sensitivity changes to individual stimuli), the team researchers adopted a global approach (how the distribution of sensitivity across all stimuli is affected by changes in the distribution of stimulation).

Interestingly, since the number of neuronal cells in the system is large but limited, the scientists view the visual system's organization of sensitivity as an economic process – that is, as the allocation of limited resources. "When stimulation changes," Gepshtein explains, "the visual system reorganizes its sensitivity by reallocating neural resources. Because the resources are limited, increasing sensitivity to some stimulus must be accompanied by decreasing sensitivity to some other stimulus. It is therefore expected that sensory adaptation creates a pattern of gains and losses in sensitivity."

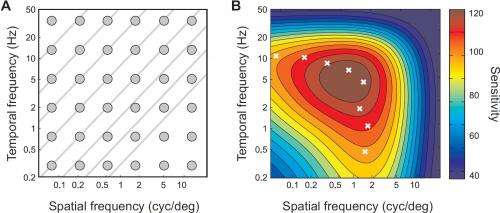

Gepshtein cites a theoretical study of neural resource allocation in the visual system that suggested how sensitivity would change if adaptation were to cause a (re)allocation of resources across the entire range of stimuli1. In particular, he summarizes, the study suggested that the shape of the distribution of sensitivity in human vision is consistent with predictions of efficient allocation of the limited neural resources, and that changes in simulation would cause a shift of the sensitivity function. This shift would entail a characteristic pattern of local gains and losses in sensitivity.

Essentially, Gepshtein continues, their theory is based on the fact that measurements by different cells are characterized by different spatial and temporal intervals of measurement called receptive fields. This fact entails different measurement uncertainty – that is, different expected precision – for different cells, meaning that neural cells with receptive fields of different sizes are expected to be differentially useful for measuring different stimuli. "The theoretical study specifies how such cells should be allocated to stimuli by showing that the most efficient allocation of cells to stimuli results in a sensitivity function similar to the well-known spatiotemporal contrast sensitivity function, and also how changes in speed distribution should cause a shift of the function."

In greater detail, Gepshtein adds, the theory is concerned with how measurements by individual cells can be organized in the visual system to attain efficient performance of the system – that is, for the full range of visual stimuli. "Again, each cell can only measure a limited range of stimuli – but the capacity of every cell is limited by another fundamental constraint." The kind of information that can be obtained from a single cell is limited because of the uncertainty principle of measurement2.

In the field of sensory perception, the principle is associated with Dennis Gabor, a brilliant engineer and inventor, who in 1946 formulated the principle and studied its consequences for auditory perception. In physics the same principle is associated with the name of Werner Heisenberg – one of the founders of quantum mechanics. "In either formulation," Gepshtein notes, "the principle captures a limit to the precision with which certain pairs of physical properties can be measured at the same time - in our case, stimulus location and frequency content." Stimulus location concerns where or when the stimulus occurs in space or time, respectively; stimulus frequency content concerns the ability to identify the stimulus.

"To test predictions of this theory," Gepshtein says, "we varied the distribution of stimuli instead of inducing adaptation by a single stimulus, and measured changes of sensitivity across a broad range of stimuli. We implemented changes of stimulus speed by using the same range of speed in all experiments, while sampling different speeds from this range more or less often." The researchers found that manipulating stimulus speeds caused a large-scale pattern of sensitivity changes and that the adaptive changes added up to an orderly pattern – a pattern similar to that predicted by the theory of efficient allocation.

Despite the extent and complexities of the challenges the researchers faced, Gepshtein says that their study was made possible by two key factors: the theoretical insight discussed above, and the new rapid methods of measurement of visual sensitivity developed by Dr. Lesmes and his colleagues. "Previously," Gepshtein explains, "sensitivity had to be measured separately for many stimuli, which took a prohibitively long time. Dr. Lesmes' new methods allowed us to measure parameters of the sensitivity function directly, rather than fitting the sensitivity function to results of multiple separate sensitivity measurements, thereby optimizing the measurements for estimating sensitivity functions."

Moving forward, the scientists are planning the next steps in their research. "This work is one of the first demonstrations of how previously puzzling results can make sense from the perspective of efficient allocation of limited neural recourses," Gepshtein points out. "We're at the beginning of a large series of studies inspired by this approach." For example, so far the team has studied motion perception using only one spatial dimension – but to study motion direction, at least two spatial dimensions must be included. "We'll generalize the theoretical framework and the measurement procedures to study how motion sensitivity is controlled across both speed and direction," Gepshtein adds. "This will allow us to use our approach to investigate perception of natural stimuli, such as movies that capture motion in natural visual scenes."

In addition, the scientists have been concerned with stimuli at a single spatial location at a time – but Gepshtein points out that the economic view suggests that neural resources can be (re)allocated across spatial locations, just as they are (re)allocated across stimulus speeds. "It's an obvious extension of our present approach, and it's one of the steps we'll have to take in order to develop a complete understanding of motion adaptation."

It's also important, he adds, to better understand connections between their present results and those of previous adaptation studies, which as discussed found that adaptation can cause gains of losses of sensitivity. "Now we've found that gains and losses can be special cases of a large-scale pattern of sensitivity changes," Gepshtein stresses. "For the sake of completeness, it's important to show how previous results are consistent with our new results."

That said, Gepshtein cautions that the connection is not as simple as showing that the previous (local) results add up to the newly found large-scale pattern, because the local and global studies use different distributions (narrow and broad, respectively) of adapting stimuli. "From our present perspective, there's an interesting paradox here, in that the process of measurement changes the object of measurement – that is, the visual system. By nature of adaptation, different stimulus distributions cause different patterns of adaptation. This is one of the questions we're pursuing at the moment – and we're doing so by tracing adaptation effects as we change the distribution of adapting stimuli from narrow to broad."

Looking further ahead, Gepshtein says that the team is very interested in how large-scale sensitivity transformation is implemented in neural circuits. "We approach this question two ways," he explains. "Firstly, we perform simulations of neuronal plasticity in the circuits that control receptive field size, and trace the effects of this plasticity to changes in the sensitivity function." (This work is done in collaboration with a group of researchers led by computer scientist Peter Jurica3 at the RIKEN Brain Science Institute.)

"Secondly," he continues, "we're beginning to investigate how neural circuits and neural cells change their preferences in response to the manipulation of speed distribution employed in the present study." This physiological project is led by Prof. Albright, who directs the Salk Institute Vision Center Laboratory.

Gepshtein says that the applications of their research are very broad. "They concern the technologies where motion sensing and compression of dynamic visual signals are involved. To illustrate, detection of motion using modern sensors is fast and inexpensive. Finding the meaning of motion signals – for example, discovering the identity of moving objects – is slow and requires considerable computational resources because information from multiple local sensors has to be integrated and analyzed. Our studies reveal how this integration is implemented in biological vision."

The emerging picture, Gepshtein notes, is that biological visual systems improve their efficiency, including processing speed, by rapidly reallocating their computational resources to important stimuli. "This reallocation is graded," he concludes. "It doesn't leave the system unprepared for perception of stimuli that are less important at the moment. Rather, all stimuli are monitored, albeit with different quality, so the resources can be rapidly moved to the newly important aspects of stimulation."

More information: Sensory adaptation as optimal resource allocation, PNAS March 12, 2013 vol. 110 no. 11 4368-4373, doi:10.1073/pnas.1204109110 [Updated version with Corrections marked]

Related:

1The economics of motion perception and invariants of visual sensitivity, Journal of Vision June 21, 2007 vol. 7 no. 8 article 8, doi: 10.1167/7.8.8

2Two psychologies of perception and the prospect of their synthesis (Section 4, pp. 247-263)

3Unsupervised adaptive optimization of motion-sensitive systems guided by measurement uncertainty, Proceedings of the Third International Conference on Intelligent Sensors, Sensor Networks and Information Processing, ISSNIP 2007, p. 179-184

Copyright 2013 Medical Xpress

All rights reserved. This material may not be published, broadcast, rewritten or redistributed in whole or part without the express written permission of Phys.org.