What sign language teaches us about the brain

The world's leading humanoid robot, ASIMO, has recently learnt sign language. The news of this breakthrough came just as I completed Level 1 of British Sign Language (I dare say it took me longer to master signing than it did the robot!). As a neuroscientist, the experience of learning to sign made me think about how the brain perceives this means of communicating.

For instance, during my training, I found that mnemonics greatly simplified my learning process. To sign the colour blue you use the fingers of your right hand to rub the back of your left hand, my simple mnemonic for this sign being that the veins on the back of our hand appear blue. I was therefore forming an association between the word blue (English), the sign for blue (BSL), and the visual aid that links the two. However, the two languages differ markedly in that one relies on sounds and the other on visual signs.

Do our brains process these languages differently? It seems that for the most part, they don't. And it turns out that brain studies of sign language users have helped bust a few myths.

As neuroscience took off, it became fashionable to identify specific regions of the brain that were thought to be responsible for certain skills. However, we now know that this oversimplification paints only half a picture. Nowhere else is this clearer than in the case of how human brains perceive language, whether spoken or sign language.

The evidence for this comes from two kinds of studies: lesion analyses, which examine the functional consequences of damage to brain regions involved in language, and neuroimaging, which explores how these regions are engaged in processing language.

Lesions teach new lessons

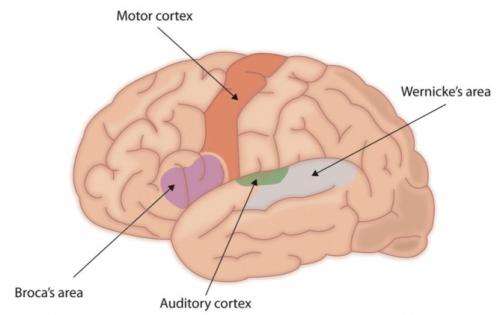

Early theories of language processing pointed to two regions in the left hemisphere of the brain that were thought to be chiefly responsible for producing and understanding spoken language – .

Damage to Broca's area, which is located near the part of the motor cortex that controls the mouth and lips, usually gives rise to difficulties in the production of speech. But this doesn't adversely affect one's ability to communicate, or understand conversation. So a hearing person with a lesion in Broca's area – which can form, say, after a stroke – may not be able to form fluid sentences, but he or she could use single words, short phrases, and possibly nod or shake their head to gesture their responses.

The comprehension of speech, on the other hand, is largely believed to be processed within Wernicke's area, which is located near the auditory cortex – the part of the brain that receives signals from the ears. Hearing people with damage to Wernicke's area are usually fluent in producing speech, but may make up words (for example: "cataloop" for "caterpillar" shown in the video below) and speak in long sentences that have no meaning.

If Broca's area is involved solely in the production of speech, and Wernicke's area in understanding speech sounds, then we might expect that visual languages like sign language remain unaffected when these areas are damaged. But, surprisingly, they do not.

One of the seminal studies in this field was by award-winning husband and wife team Edward Klima and Ursula Bellugi at the Salk Institute. They found that deaf signers who had lesions in left hemisphere "speech centres" like Broca's and Wernicke's areas produced significantly more sign errors on naming, repetition and sentence-comprehension tasks than signers with damaged right hemispheres.

The right hemisphere of the brain is more involved in visual and spatial functions than the left hemisphere, and this is not to say that the right hemisphere is not at all involved in producing and comprehending sign language. However, these findings verify that despite the differences in modality, signed and spoken languages are similarly affected by damage to the left hemisphere of the brain.

Images speak out too

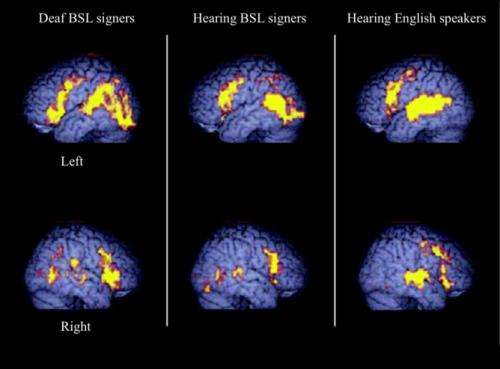

Functional neuroimaging, which can show images of active regions in the brain, has agreed with lesion studies. Despite the fundamental differences in input or output modes for signed and spoken languages, there are common patterns of brain activation when deaf and hearing people process language.

For instance, Broca's area is also activated when producing signs and Wernicke's area is activated during the perception of sign language.

Most importantly, these lesion and neuroimaging studies helped clarify two facts. First, that language is not simply limited to hearing and speech, and sign languages are complex linguistic systems processed much like spoken languages. Second, it also cemented our growing reservations of the oversimplified theories of language perception. Their involvement in processing sign language meant that we could no longer think of Broca's and Wernicke's areas exclusively as centres for producing speech and hearing sound, but rather as higher-order language areas in the brain.

Contrary to the common misconception, there is no universal sign language. According to a recent estimate, there are 138 variations of sign language in the world today, with structured syntax, grammar, and even regional accents. It is unfortunate then that a significant proportion of the global deaf community is still battling for legal recognition of these languages.

Sign language is sometimes misguidedly looked upon as a "disability" language and simply a visual means of communicating spoken language, when it fact its linguistic construction is almost entirely independent of spoken language. For instance, American and British Sign Language are mutually incomprehensible, even though the hearing people of Britain and America predominantly share the same spoken language.

Knowledge of how sign languages are processed in the brain has not only furthered our understanding of the brain itself, but has also played a part in quashing the once widely believed notion that these signs were simply a loose collection of gestures strung together to communicate spoken language.

This story is published courtesy of The Conversation (under Creative Commons-Attribution/No derivatives).![]()

.jpg)