Scientists reaching consensus on how brain processes speech

Neuroscientists feel they are much closer to an accepted unified theory about how the brain processes speech and language, according to a scientist at Georgetown University Medical Center who first laid the concepts a decade ago and who has now published a review article confirming the theory.

In the June issue of Nature Neuroscience, the investigator, Josef Rauschecker, PhD, and his co-author, Sophie Scott, PhD, a neuroscientist at University College, London, say that both human and non-human primate studies have confirmed that speech, one important facet of language, is processed in the brain along two parallel pathways, each of which run from lower- to higher-functioning neural regions.

These pathways are dubbed the "what" and "where" streams and are roughly analogous to how the brain processes sight, but are located in different regions, says Rauschecker, a professor in the department of physiology and biophysics and a member of the Georgetown Institute for Cognitive and Computational Sciences.

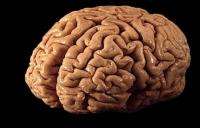

Both pathways begin with the processing of signals in the auditory cortex, located inside a deep fissure on the side of the brain underneath the temples - the so-called "temporal lobe." Information processed by the "what" pathway then flows forward along the outside of the temporal lobe, and the job of that pathway is to recognize complex auditory signals, which include communication sounds and their meaning (semantics). The "where" pathway is mostly in the parietal lobe, above the temporal lobe, and it processes spatial aspects of a sound - its location and its motion in space - but is also involved in providing feedback during the act of speaking.

Auditory perception - the processing and interpretation of sound information - is tied to anatomical structures; signals move from lower to higher brain regions, Rauschecker says. "Sound as a whole enters the ear canal and is first broken down into single tone frequencies, then higher-up neurons respond only to more complex sounds, including those used in the recognition of speech, as the neural representation of the sound moves through the various brain regions," he says.

Both human and nonhuman primate studies were examined in this review.

In humans, researchers use functional magnetic resonance imaging (fMRI) to "watch" activity move between brain regions in experiments testing speech "(re)cognition," Rauschecker says. In non-human primates, investigators use a technique known as single-cell recording, which can measure changes within a single neuron. To do this, anesthetized animals are equipped with microelectrodes that can pick up activity in pinpointed brain areas, a technique that can be used only rarely in human patients but provides much better resolution.

"In both species, we are using species-specific communication sounds for stimulation, such as speech in humans and rhesus-specific calls in rhesus monkeys," Rauschecker says. "We find that the structure of these communication sounds is similar across species."

What is so interesting to Rauschecker is that although speech and language are considered to be uniquely human abilities, the emerging picture of brain processing of language suggests "in evolution, language must have emerged from neural mechanisms at least partially available in animals," he says.

"Speech, or the early process of language, is well modeled by animal communication systems, and these studies now demonstrate that primate auditory cortex, across species, displays the same patterns of hierarchical structure, topographic mapping, and streams of functional processing," Rauschecker says. "There appears to be a conservation of certain processing pathways through evolution in humans and nonhuman primates."

While this research is basic science trying to solve fundamental questions about the brain, it may ultimately yield some valuable insights into disorders that involve problems in comprehending auditory signals, such as autism and schizophrenia, he says.

"Understanding speech is one of the major problems seen in autism, and a person with schizophrenia hears sounds that are just hallucinations," Rauschecker says. "Eventually, this area of research will lead us to better treatment for these issues.

"But mostly, we are fascinated by the fact that humans can make such exquisite sense of the slight variation in sound waves that reach our ears, and only lately have we been able to model how the brain knows how to attach meaning to these sounds in terms of communication." he says.

Source: Georgetown University Medical Center (news : web)