December 31, 2012 feature

Face the facts: Neural integration transforms unconscious face detection into conscious face perception

(Medical Xpress)—The apparent ease and immediacy of human perception is deceptive, requiring highly complex neural operations to determine the category of objects in a visual scene. Nevertheless, the human brain is able to complete operations such as face category tuning (the ability differentiate faces from other similar objects) completely outside of conscious awareness. Apparently, such complex processes are not sufficient for us to consciously perceive faces. Now, scientists from the University of Amsterdam used functional magnetic resonance imaging (fMRI) and electroencephalography (EEG) to show that while visible and invisible faces produce similar category-selective responses in the brain's ventral visual cortex, only visible faces caused widespread response enhancements and changes in neural oscillatory synchronization. The team concluded that sustained neural information integration is a key factor in conscious face perception.

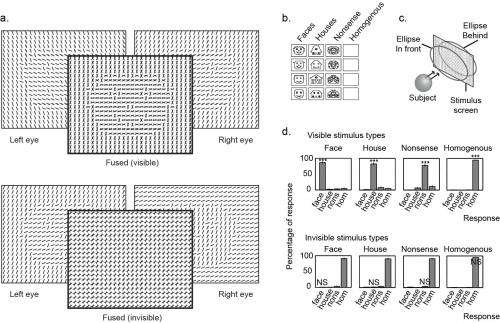

Dr. Johannes J. Fahrenfort, Prof. Victor A. F. Lamme and their fellow researchers faced a series of challenges in conducting their study. "First off there were practical challenges," Fahrenfort tells Medical Xpress, referring to their finding that visible and invisible faces produce similar category-selective neural responses. "To create dichoptic fusion" – the perceptual integration of different sensory events in the left and the right eye – "we used a stereo presentation setup with two beamers that were projecting on the same screen but contained differently polarized filters, resulting in a different image in the left and the right eye. It proved to be very difficult to get – and keep! – the beamers exactly aligned." The scientists addressed this by having a special apparatus fabricated that allowed them to adjust the position of each beamer millimeter-by-millimeter in all directions. In addition, they had to use a stimulus sequence that made objects easily visible in the visible condition but completely invisible in the invisible condition. "It took quite a bit of pilot experimenting to get this right."

Another issue they faced was in the analysis, Fahrenfort adds, because finding visibility-invariant category tuning required them to look for voxels (three-dimensional volumetric pixels) that responded both to visible and invisible faces. "These were easiest to find using the experimental dataset itself," he explains. "To prevent double dipping, we initially used the last run to localize voxels, and the other runs to do statistical testing. (Double dipping is an invalid statistical procedure in which the same data set is used for data selection and statistical testing.) "However, one of the reviewers felt that we should optimize power, so we ended up reanalyzing the dataset using a split-half method – that is, one in which we used one half of the data to determine regions of interest, exporting the other half for statistical testing, and vice versa."

Regarding their confirmation that neural activity patterns evoked by visible faces could be used to decode the presence of invisible faces and vice versa, Fahrenfort notes that two main things were important: Separately normalizing across visible and across invisible conditions, and to maximizing power by using the entire visible invisible stimuli datasets for each subject, resulting in a single classification value (1 or 0) for each subject. Both of these critical steps were derived from the seminal 2001 Haxby paper in Science1".

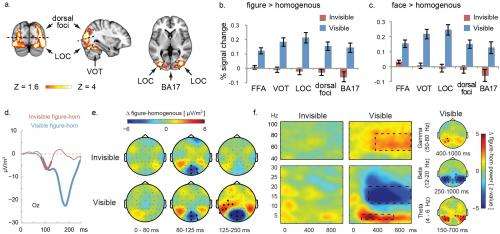

However, the extensive response enhancements and changes in neural oscillatory synchronization, Fahrenfort continues, were extremely clear-cut. "There's always a challenge involved in making a case for a null-result: actually proving a null-result is of course impossible. It was very helpful that the difference between visible and invisible was so telling. With respect to the response enhancements, the amount of modulation for visible faces went through the roof, really. For invisible faces there was no modulation whatsoever – in fact, if anything there was a slight negative modulation in early visual areas. The only area that showed positive modulation for invisible faces was the fusiform face area." The fusiform face area, or FFA, is a region of the human cortex that responds selectively to human face. "This was to be expected, giving its selectivity for both visible and invisible faces. Given that monocular stimulation was the same for both conditions, these results were very convincing."

Similarly, Fahrenfort points out, there was not much of challenge in determining that increased functional connectivity between higher and lower visual areas beyond running the basic analysis. "That being said, I was a bit worried because it is apparently very difficult to get significant psychophysiological interactions from event related data – but the massive dataset was enough to bring this out." (Psychophysiological interactions analysis, or PPI, is a method used to determine whether the correlation in activity between two distant brain areas is different in different contexts – in other words, whether there is an interaction between the psychological state and the functional coupling between two brain areas.) "Again the results were very clear-cut and in line with what one would expect given the rest of the data and from how the visual system works."

The key insight gleaned from addressing these challenges? "I'd never again use a stereo setup that has beamers not specifically made for stereo presentation;" Fahrenfort quips "Instead, I'd probably use a much simpler mirror system. Another insight is to be goal-directed when analyzing our data." The scientists initially analyzed the brain imaging dataset using Brainvoyager, but later switched to FSL because they decided to do the analysis on a group level. "The statistical mixed effects models in FSL are better because they take subject variance into account, and the spatial normalization across subjects was better and more straightforward – at least at the time. This indeed improved the analysis…but there are also many other things you can do to try and improve your analysis line. My conclusion: it's better to first make major decisions regarding analysis that seem reasonable, and then target contrasts and analyses that drive your points home, rather than trying too many things at once."

Expanding on the paper's your conclusion that conscious face perception is more tightly linked to neural processes of sustained information integration and binding than to processes accommodating face category tuning, Fahrenfort says that "the dataset strongly suggests that you can get face-category tuning from feedforward computations alone. This is in line with the classical view of visual processing as a hierarchical network in which elements that are tuned to small and simple stimulus characteristics are combined to generate more complex stimulus representations. Critically, however, we show that these complex responses are not sufficient to become conscious of a face. Conscious face perception requires that face-selective cells 'reach back' to interact with earlier areas in the network that initially respond only to simple bottom-up features. This interaction in which information is integrated, or bound, is really what causes stimulus to become conscious."

Moving forward, the team is developing new ways of enhancing the current experimental design. "In terms of analysis," Fahrenfort says, "we only looked at 'markers' of information integration, such as concurrent response enhancements, or functional connectivity, and oscillatory synchronization in the EEG – but the evidence is still circumstantial. An innovation that I would like to apply is to look at measures that more accurately capture processes of actual information integration, such as those put forward by Giulio Tononi2, showing that activations in one cortical region are caused by those in another region and vice versa."

Next, Fahrenfort continues, he'd like to more closely investigate the extent to which information integration measures explain phenomenology (subjective perception) rather than explaining physical stimulus presentation. "The experiments I've done so far were a good first step, but I intend to use better measures of information integration, and use stimulus sets that are even better at isolating changes in phenomenology while keeping physical stimulus characteristics the same."

Fahrenfort also sees the potential for other areas of research to benefit from their findings. "One area might be medicine: If we better understand what generates conscious experience, this could lead to better assessments of the effectiveness of anesthetics, and could also help assessing the level of consciousness in people in comatose or vegetative states.

"Another area is computer vision, which of course is extremely challenging," Fahrenfort notes. "For robots to ultimately become as good as humans in perceiving the environment requires them to become more and more integrative – that is using recurrent rather than feedforward architectures. I believe this will ultimately endow them with phenomenology, because I intuitively feel that information integration is deeply intertwined with phenomenology, and in fact suspect they have an identity relationship. Consequently, I'm convinced that phenomenology is not epiphenomenal" (the view that mental events are epiphenomena caused by physical events in the brain, but are not functionally relevant). "In that sense, this type of research may ultimately also be of use in philosophy."

More information: Neuronal integration in visual cortex elevates face category tuning to conscious face perception, PNAS December 26, 2012 vol. 109 no. 52 21504-21509, doi:10.1073/pnas.1207414110

Related:

1Distributed and Overlapping Representations of Faces and Objects in Ventral Temporal Cortex, Science 28 September 2001: Vol. 293 no. 5539 pp. 2425-2430, doi:10.1126/science.1063736

2An information integration theory of consciousness, BMC Neuroscience 2 November 2004, 5:42, doi:10.1186/1471-2202-5-42

Copyright 2012 Medical Xpress

All rights reserved. This material may not be published, broadcast, rewritten or redistributed in whole or part without the express written permission of Phys.org/Medical Xpress.