Rationality: Research shows we're not as stupid as we have been led to believe

Suppose you toss a coin and get four heads in a row – what do you think will come up on the fifth toss? Many of us have a gut feeling that a tails is due. This feeling, called the Gambler's Fallacy, can be seen in action at the roulette wheel. A long run of blacks leads to a flurry of bets on red. In fact, no matter what has gone before, red and black are always equally likely.

The example is one of many thought to demonstrate the fallibility of the human mind. Decades of psychological research have emphasised the biases and errors in human decision making. But a new approach is challenging this view – showing that people are much smarter than they've been led to believe. According to this research, the Gambler's Fallacy might not be as irrational as it seems.

Rationality has long been an important concept in the study of judgement and decision making. The highly influential work of psychologists Daniel Kahneman and Amos Tversky comprehensively showed that we often fail to make rational decisions – such as worrying about a terrorist attack but not about crossing the road.

But this failure is based on a strict interpretation of what it is to be rational – obeying the laws of logic and probability. It is not interested in the machine that must weigh up the evidence and reach a decision. In our case, that machine is the human brain – and like any physical system, it has its limits.

Computational rationality

Although our decision making falls short of the standards required by logic and mathematics, there is still a role for rationality in understanding human cognition. The psychologist Gerd Gigerenzer has shown that while many of the heuristics we use may not be perfect, they are both useful and efficient.

But a recent approach called computational rationality goes a step further, borrowing an idea from artificial intelligence. It suggests that a system with limited abilities can still take an optimal course of action. The question becomes "What is the best outcome I can achieve with the tools I have?", as opposed to "What is the best outcome that could be achieved without any constraints at all?" For humans, this means taking things like memory, capacity, attention and noisy sensory systems into account.

Computational rationality is leading to some elegant and surprising explanations of our biases and errors. One early success consistent with this approach was to examine the mathematics of random sequences like coin tosses, but under the assumption that the observer has a limited memory capacity and could only ever see sequences of finite length. A highly counterintuitive mathematical result reveals that, under these conditions, the observer will have to wait longer for some sequences to arise than others – even with a perfectly fair coin.

The upshot is that for a finite set of coin tosses, the sequences we intuitively feel to be less random are precisely the ones that are least likely to occur. Imagine a sliding window that can only "see" four coin tosses at a time (roughly the size of our memory capacity) while going through a series of results – say from 20 coin tosses. The mathematics show that the contents of that window will hold "HHHT" more often than "HHHH" ("H" and "T" stands for for heads and tails). That's why we think tails will come after three heads in a row when tossing a coin – demonstrating that humans do make sensible use of the information we observe. If we had unlimited memory, however, we would think differently.

There are many other examples of this kind, where the optimal solution, once cognitive limitations are taken into account, is surprising. Our recent work shows that inconsistent preferences – a cornerstone of supposed human irrationality – are actually useful when you are unsure about the value of options available to you. Traditional economic rationality suggests that a bad option that you would never choose (from a menu, say) should not have any effect on which of the good options you do choose. But our analysis shows that bad, and supposedly irrelevant options, allow you to get a more accurate estimate of how good the remaining alternatives are.

Others have shown that the availability bias, where we overestimate the probability of rare events such as plane crashes, results from a highly efficient way of processing the possible outcomes of a decision. In short, given that we only have a finite amount of time to make a decision, it is optimal to make sure that the most critical outcomes are considered.

A deeper understanding

The perception that we are irrational is one unfortunate side effect of the ever growing catalogue of human decision-making biases. But when we apply computational rationality, these biases aren't seen as evidence of failures, but as windows on to how the brain is solving complex problems, often very efficiently.

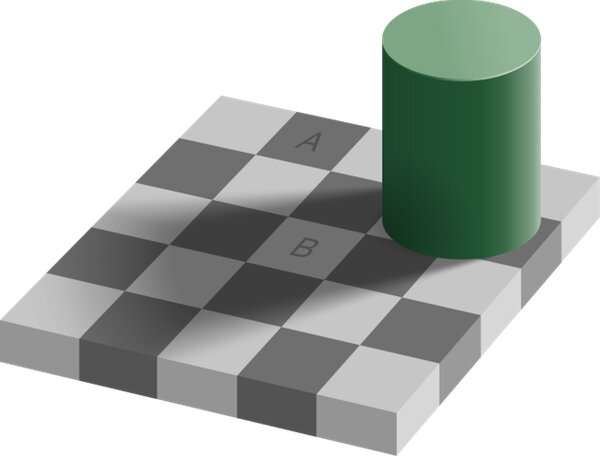

This way of thinking about decision making is more akin to how vision scientists think about visual illusions. Take a look at the picture on the right. The fact that the A and B squares appear to be different shades (they aren't – see the video below) doesn't mean that your visual system is faulty, rather that it is making a sensible inference given the context.

Computational rationality leads to a deeper understanding because it goes beyond descriptions of how we fail. Instead, it shows us how the brain marshals its resources to solve problems. One benefit of this approach is the ability to test theories of what our abilities and constraints are.

For example, we have recently shown that people with autism are less prone to some decision-making biases. So we are now exploring whether altered levels of neural noise (electrical fluctuations in networks of brain cells), a feature autism, could cause this.

With more insight into the strategies the brain uses, we might be able to tailor information in a way that helps people. We have tested what people learn from observing a long random sequence. Those that viewed a sequence divided into short chunks (as we typically would in everyday life) did not benefit at all, but those that viewed the same sequence divided into much longer chunks rapidly improved in their ability to recognise randomness.

So the next time you hear people characterised as irrational, you may want to point out that this is only in comparison to a system that has unlimited resources and abilities. With that in mind, we are really not so dumb after all.

This article is republished from The Conversation under a Creative Commons license. Read the original article.![]()