When it comes to hearing words, it's a division of labor between our brain's two hemispheres

Scientists have uncovered a new "division of labor" between our brain's two hemispheres in how we comprehend the words and other sounds we hear—a finding that offers new insights into the processing of speech and points to ways to address auditory disorders.

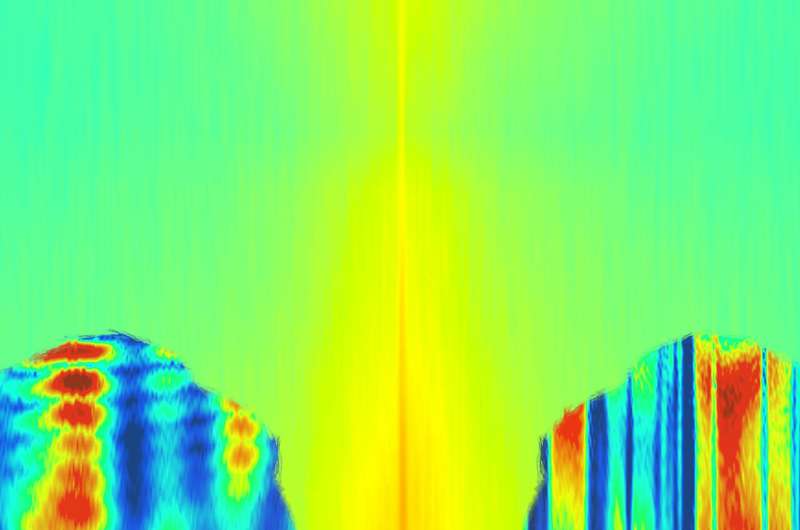

"Our findings point to a new way to think about the division of labor between the right and left hemispheres," says Adeen Flinker, the study's lead author and an assistant professor in the Department of Neurology at NYU School of Medicine. "While both hemispheres perform overlapping roles when we listen, the left hemisphere gauges how sounds change in time—for example when speaking at slower or faster rates—while the right is more attuned to changes in frequency, resulting in alterations in pitch."

Clinical observations dating back to the 19th century have shown that damage to the left, but not right, hemisphere impairs language processing. While researchers have offered an array of hypotheses on the roles of the left and right hemispheres in speech, language, and other aspects of cognition, the neural mechanisms underlying cerebral asymmetries remain debated.

In the study, which appears in the journal Nature Human Behavior, the researchers sought to elucidate the mechanisms underlying the processing of speech, with the larger aim of furthering our understanding of basic mechanisms of speech analysis as well as enriching the diagnostic and treatment tools for language disorders.

To do so, they created new tools to manipulate recorded speech, then used these recordings in a set of five experiments spanning behavioral experiments and two types of brain recording. They used magnetoencephalography (MEG), which allows measurements of the tiny magnetic fields generated by brain activity, as well as electrocorticography (ECoG), recordings directly from within the brain in volunteer surgical patients.

"We hope this approach will provide a framework to highlight the similarities and differences between human and non-human processing of communication signals," adds Flinker. "Furthermore, the techniques we provide to the scientific community may help develop new training procedures for individuals suffering from damage to one hemisphere."

More information: Spectrotemporal modulation provides a unifying framework for auditory cortical asymmetries, Nature Human Behavior (2019). DOI: 10.1038/s41562-019-0548-z , www.nature.com/articles/s41562-019-0548-z