Perfecting pitch perception

New research from MIT neuroscientists suggests that natural soundscapes have shaped our sense of hearing, optimizing it for the kinds of sounds we most often encounter.

In a study reported Dec. 14 in the journal Nature Communications, researchers led by McGovern Institute for Brain Research associate investigator Josh McDermott used computational modeling to explore factors that influence how humans hear pitch. Their model's pitch perception closely resembled that of humans—but only when it was trained using music, voices, or other naturalistic sounds.

Humans' ability to recognize pitch—essentially, the rate at which a sound repeats—gives melody to music and nuance to spoken language. Although this is arguably the best-studied aspect of human hearing, researchers are still debating which factors determine the properties of pitch perception, and why it is more acute for some types of sounds than others. McDermott, who is also an associate professor in MIT's Department of Brain and Cognitive Sciences, and an Investigator with the Center for Brains, Minds, and Machines (CBMM) at MIT, is particularly interested in understanding how our nervous system perceives pitch because cochlear implants, which send electrical signals about sound to the brain in people with profound deafness, don't replicate this aspect of human hearing very well.

"Cochlear implants can do a pretty good job of helping people understand speech, especially if they're in a quiet environment. But they really don't reproduce the percept of pitch very well," says Mark Saddler, a graduate student and CBMM researcher who co-led the project and an inaugural graduate fellow of the K. Lisa Yang Integrative Computational Neuroscience Center. "One of the reasons it's important to understand the detailed basis of pitch perception in people with normal hearing is to try to get better insights into how we would reproduce that artificially in a prosthesis."

Artificial hearing

Pitch perception begins in the cochlea, the snail-shaped structure in the inner ear where vibrations from sounds are transformed into electrical signals and relayed to the brain via the auditory nerve. The cochlea's structure and function help determine how and what we hear. And although it hasn't been possible to test this idea experimentally, McDermott's team suspected our "auditory diet" might shape our hearing as well.

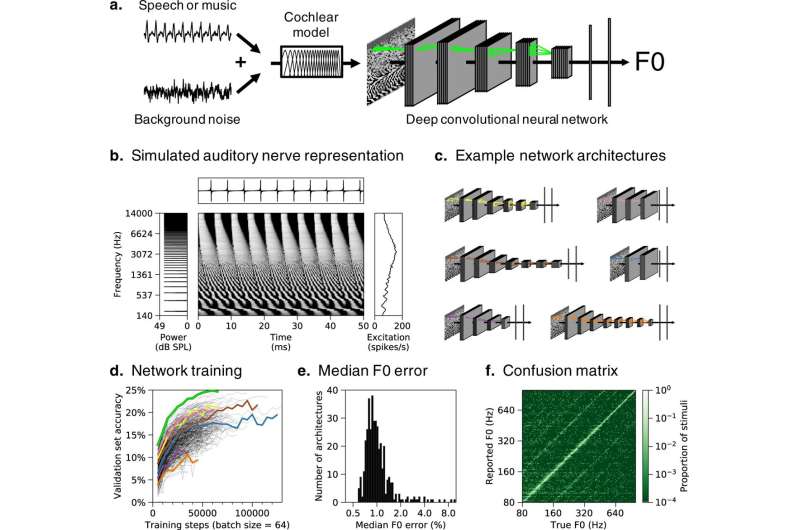

To explore how both our ears and our environment influence pitch perception, McDermott, Saddler, and Research Assistant Ray Gonzalez built a computer model called a deep neural network. Neural networks are a type of machine learning model widely used in automatic speech recognition and other artificial intelligence applications. Although the structure of an artificial neural network coarsely resembles the connectivity of neurons in the brain, the models used in engineering applications don't actually hear the same way humans do—so the team developed a new model to reproduce human pitch perception. Their approach combined an artificial neural network with an existing model of the mammalian ear, uniting the power of machine learning with insights from biology. "These new machine-learning models are really the first that can be trained to do complex auditory tasks and actually do them well, at human levels of performance," Saddler explains.

The researchers trained the neural network to estimate pitch by asking it to identify the repetition rate of sounds in a training set. This gave them the flexibility to change the parameters under which pitch perception developed. They could manipulate the types of sound they presented to the model, as well as the properties of the ear that processed those sounds before passing them on to the neural network.

When the model was trained using sounds that are important to humans, like speech and music, it learned to estimate pitch much as humans do. "We very nicely replicated many characteristics of human perception … suggesting that it's using similar cues from the sounds and the cochlear representation to do the task," Saddler says.

But when the model was trained using more artificial sounds or in the absence of any background noise, its behavior was very different. For example, Saddler says, "If you optimize for this idealized world where there's never any competing sources of noise, you can learn a pitch strategy that seems to be very different from that of humans, which suggests that perhaps the human pitch system was really optimized to deal with cases where sometimes noise is obscuring parts of the sound."

The team also found the timing of nerve signals initiated in the cochlea to be critical to pitch perception. In a healthy cochlea, McDermott explains, nerve cells fire precisely in time with the sound vibrations that reach the inner ear. When the researchers skewed this relationship in their model, so that the timing of nerve signals was less tightly correlated to vibrations produced by incoming sounds, pitch perception deviated from normal human hearing.

McDermott says it will be important to take this into account as researchers work to develop better cochlear implants. "It does very much suggest that for cochlear implants to produce normal pitch perception, there needs to be a way to reproduce the fine-grained timing information in the auditory nerve," he says. "Right now, they don't do that, and there are technical challenges to making that happen—but the modeling results really pretty clearly suggest that's what you've got to do."

More information: Mark R. Saddler et al, Deep neural network models reveal interplay of peripheral coding and stimulus statistics in pitch perception, Nature Communications (2021). DOI: 10.1038/s41467-021-27366-6

This story is republished courtesy of MIT News (web.mit.edu/newsoffice/), a popular site that covers news about MIT research, innovation and teaching.