This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

New AI tool identifies factors that predict the reproducibility of psychology research

The replication success of scientific research is linked to research methods, citation impact and social media coverage—but not university prestige or citation numbers—according to a new study involving UCL researchers.

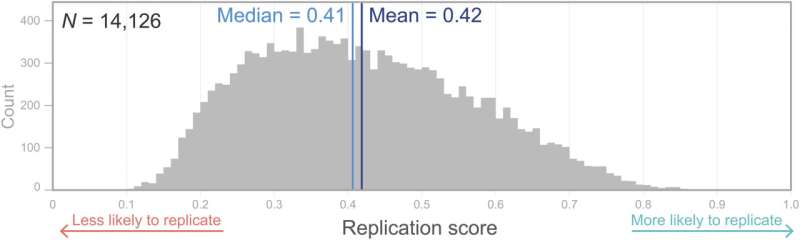

Published in the journal Proceedings of the National Academy of Sciences (PNAS), the study explores the ability of a validated text-based machine learning model to predict the likelihood of successful replication for more than 14,100 psychology research articles published since 2000 across six top-tier journals.

Undertaken in partnership with the University of Notre Dame, France, and Northwestern University, U.S., the study identifies several factors that increased the likelihood of research replicability—that is, the likelihood that if a study is conducted a second time using the same methods, the results would be the same.

Overall, the authors found that experimental studies were significantly less replicable than non-experimental studies across all subfields of psychology. The authors found that mean replication scores—the relative likelihood of replication success—were 0.50 for non-experimental papers, compared to 0.39 for experimental papers, meaning that non-experimental papers are around 1.3 times more likely to be reproducible.

The authors say that this finding is worrying, given that psychology's strong scientific reputation is at least partly built on its proficiency with experiments.

The study also shows that an authors' cumulative publication number and citation impact were positively related to replication success. However, other proxies of research quality and rigor, such as an author's university prestige and a paper's citations, were found to be unrelated to replicability.

Predicted replication rates were also found to vary among psychology subfields (clinical psychology, cognitive psychology, developmental psychology, organizational psychology, personality psychology, and social psychology). The authors conclude that due to such variation within psychology, and within its subfields, using a single metric to characterize the whole field's replicability is likely insufficient.

The study also identified factors that were negatively correlated with the likelihood of replication, with media attention negatively related to replication success. The authors speculate that this is likely due to the fact that the media are more likely to report on unusual or unexpected findings.

The authors say that the study could help to address widespread concern about weak replicability in the social sciences, particularly psychology, and strengthen the field as a whole.

Study co-author Dr. Youyou Wu (IOE, UCL's Faculty of Education & Society) said, "Replicability is a problem faced across the social sciences, and in psychology in particular—and the number of manually replicated studies falls well below the abundance of important studies that the scientific community would like to see replicated, given time and resource constraints

"Our results could help develop new strategies for testing a scientific literature's overall replicability, self-assessing research prior to journal submission—as well as training peer reviewers."

More information: Wu Youyou et al, A discipline-wide investigation of the replicability of Psychology papers over the past two decades, Proceedings of the National Academy of Sciences (2023). DOI: 10.1073/pnas.2208863120