November 15, 2023 feature

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Study unveils similarities between the auditory pathway and deep learning models for processing speech

The human auditory pathway is a highly sophisticated biological system that includes both physical structures and brain regions specialized in the perception and processing of sounds. The sounds that humans pick up through their ears are processed in various brain regions, including the cochlear and superior olivary nuclei, the lateral lemniscus, the inferior colliculus and the auditory cortex.

Over the past few decades, computer scientists have developed increasingly advanced computational models that can process sounds and speech, thus artificially replicating the function of the human auditory pathway. Some of these models have achieved remarkable results and are now widely used worldwide, for instance allowing voice assistants (i.e., Alexa, Siri, etc.) to understand the requests of users.

Researchers at University of California, San Francisco, recently set out to compare these models with the human auditory pathway. Their paper, published in Nature Neuroscience, revealed striking similarities between how deep neural networks and how the biological auditory pathway process speech.

"AI speech models have become very good in recent years because of deep learning in computers," Edward F. Chang, one of the authors of the paper, told Medical Xpress. "We were interested to see if what the models learn is similar to how the human brain processes speech."

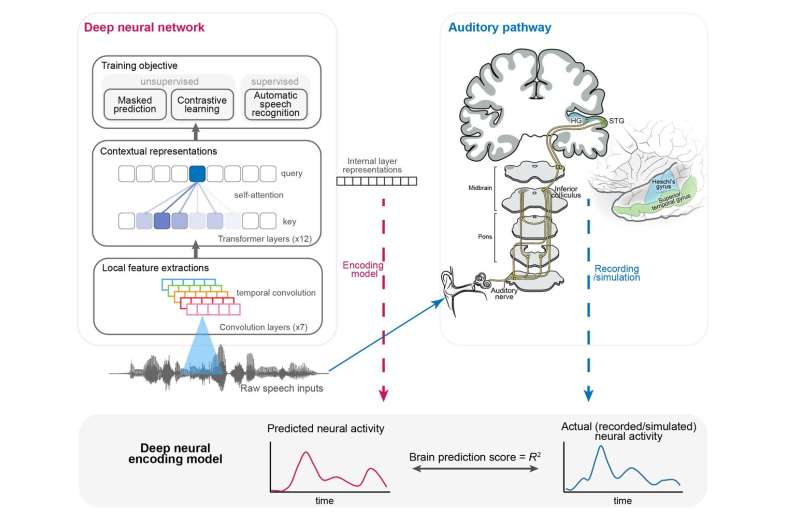

To compare deep neural networks to the human auditory pathway, the researchers firstly looked at the speech representations produced by the models. These are essentially the ways in which these models encode speech in their different layers.

Subsequently, Chang and his colleagues compared these representations to the activity that takes place in the different parts of the brain associated with the processing of sounds. Remarkably, they found a correlation between the two, unveiling possible similarities between artificial and biological speech processing.

"We used several commercial deep learning models of speech and compared how the artificial neurons in those models compared to real neurons in the brain," Chang explained. "We compared how speech signals are processed across the different layers, or processing stations, in the neural network, and directly compared those to processing across different brain areas."

Interestingly, the researchers also found that models trained to process speech in either English or Mandarin could predict the responses in the brain of native speakers of the corresponding language. This suggests that deep learning techniques process speech similarly to the human brain, also encoding language-specific information.

"AI models that capture context and learn the important statistical properties of speech sounds do well at predicting brain responses," Chang said. "In fact, they are better than traditional linguistic models. The implication is that there is huge potential to harness AI to understand the human brain in the coming years."

The recent work by Chang and his collaborators improves the general understanding of deep neural networks designed to decode human speech, showing that they might be more like the biological auditory system than researchers had anticipated. In the future, it could guide the development of further computational techniques designed to artificially reproduce the neural underpinnings of audition.

"We are now trying to understand what the AI models can be redesigned to better understand the brain. Right now, we just getting started and there is so much to learn," said Chang.

More information: Yuanning Li et al, Dissecting neural computations in the human auditory pathway using deep neural networks for speech, Nature Neuroscience (2023). DOI: 10.1038/s41593-023-01468-4

© 2023 Science X Network