This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Study explores how individual neurons allow us to understand the sounds of speech

In a first-of-its-kind study published in Nature, researchers in the lab of Edward Chang, MD, at the University of California, San Francisco (UCSF) recorded activity from hundreds of individual neurons while participants listened to spoken sentences, giving us an unprecedented view into how the brain analyzes the sounds in words.

"Neurons are a fundamental unit of computation in the brain," said Matthew Leonard, Ph.D., associate professor of neurological surgery and co-first author of the study. "We also know that neurons in the cortex exist in a complex structure of densely interconnected layers. To really understand how the brain processes speech, we need to be able to observe lots of neurons from across these layers."

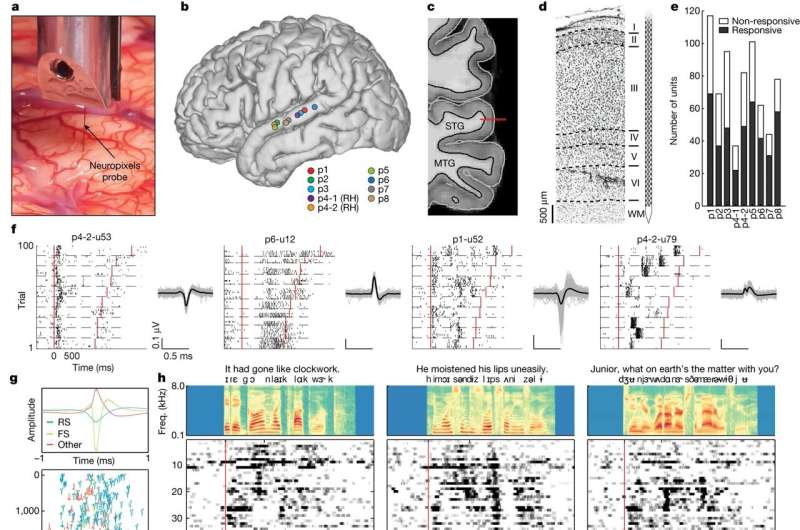

To achieve this, the researchers used the most advanced neural recording technology available, called Neuropixels probes, which allowed them to 'listen in' on hundreds of individual neurons throughout the cortex which was previously not possible. Over the last five years Neuropixels probes have revolutionized neuroscientific studies in animals, but have only now been adapted for humans.

Chang, chair of neurological surgery at UCSF and senior author of the study, used the Neuropixels technology to map the superior temporal gyrus (STG) of eight study participants who were undergoing awake brain surgery for epilepsy or brain tumors. The probe is the length and width of an eyelash, yet packs nearly 1,000 electrodes on a miniscule silicon chip that can traverse the 5-mm depth of the cortex. The researchers recorded from a total of 685 neurons at nine sites spread across all cortical layers of the STG (a brain region known to be critical for auditory perception of speech).

For Chang, whose lab studies how speech is organized in the brain, this technology represented an opportunity to increase the details of these speech maps down to the level of individual brain cells. Previous studies of the human brain have relied on familiar technology like fMRI, which measures blood oxygenation levels, and also direct recordings from cortical surface (called electrocorticography), in which an electrode grid can record aggregate signals from large populations of neurons.

"As useful as previous approaches have been, they still record from tens of thousands of neurons in a combination that we don't really understand," said Leonard. "We don't know what activity looks like in the individual cells that make up that aggregate signal."

A 3D image of speech

The researchers were also interested in characterizing how cells beneath the cortical surface were organized relative to their function. "Within a single spot in the cortex, are most of the neurons doing the same thing, or are there neurons that respond to different types of speech sounds?" said Laura Gwilliams, Ph.D., co-first author of the study. Gwilliams, a former postdoctoral scholar at UCSF, is now an assistant professor of psychology at Stanford University and faculty scholar at the Wu Tsai Neurosciences Institute and Stanford Data Science.

The Neuropixels recordings from participants in this study showed that individual neurons had preferences for certain speech sounds. Some of them responded to parts of vowels. Others to consonants. Some preferred silence. Some responded not to what was said, but how it was said, for example speech sounds with a higher pitch for emphasis.

Generally, neurons across the cortical layers formed columns that responded to a particular type of speech sound. The researchers were able to show that this dominant tuning pattern relates to the aggregate signal recorded from the surface of the brain using electrocorticography. "The maps that we have been able to put together across the 2-dimensional surface of the cortex seem to generally reflect what the neurons underneath that surface are doing," said Chang.

However, the researchers were surprised to find that within these distinct patches, not all neurons played the same role. For example, a site where a majority of neurons were tuned to a dominant feature such as vowels like "ee" or "ae" also contained neurons that were tuned to the pitch of the voice, as well as neurons sensitive to how loud the speech was.

"The fact that each column contained neurons that had different functions shows that very small areas of the brain compute lots of different information about spoken language," said Leonard.

This physical proximity of neurons with different behaviors may be what makes it possible for us to understand speech so effortlessly and instantaneously. It also shows that the architecture of the cortex itself—its layered structure—is important for understanding the computations that give rise to our ability to understand speech. "We are beginning to see how there's this crucial third dimension of the cortex that contains all kinds of rich variability, which has been largely invisible to scientists until now," said Gwilliams.

What makes us human

A central question for contemporary neuroscience is how the human brain gives us abilities that are unique in nature. How we are able to communicate our thoughts and desires to one another. How we are able to imagine scenarios or understand a different point of view. And the potential for learning how individual neurons behave and form circuits under different conditions offers all sorts of exciting possibilities for how we understand ourselves.

Additional detailed studies of language may also help us to understand pathologies that affect our ability to speak or to develop more sophisticated brain-machine interfaces to restore speech to those paralyzed by stroke or injury.

"With these new tools we can start to answer fundamental questions about how the human brain works from hundreds and thousands of single cells," says Chang. "This is critical for understanding behaviors like speech, and will someday allow us to tackle big questions about what makes us human."

More information: Matthew K. Leonard et al, Large-scale single-neuron speech sound encoding across the depth of human cortex, Nature (2023). DOI: 10.1038/s41586-023-06839-2