What's coming next? Scientists identify how the brain predicts speech

An international collaboration of neuroscientists has shed light on how the brain helps us to predict what is coming next in speech.

In the study, publishing on April 25 in the open access journal PLOS Biology scientists from Newcastle University, UK, and a neurosurgery group at the University of Iowa, USA, report that they have discovered mechanisms in the brain's auditory cortex involved in processing speech and predicting upcoming words, which is essentially unchanged throughout evolution. Their research reveals how individual neurons coordinate with neural populations to anticipate events, a process that is impaired in many neurological and psychiatric disorders such as dyslexia, schizophrenia and Attention Deficit Hyperactivity Disorder (ADHD).

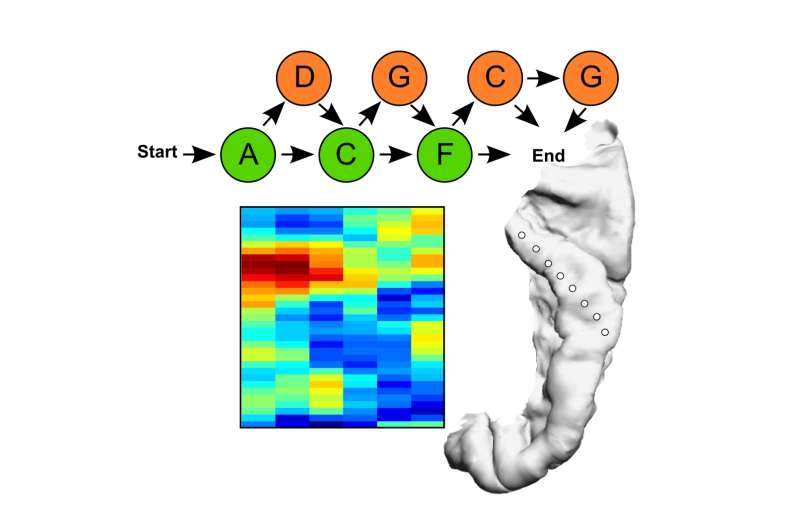

Using an approach first developed for studying infant language learning, the team of neuroscientists led by Dr Yuki Kikuchi and Prof Chris Petkov of Newcastle University had humans and monkeys listen to sequences of spoken words from a made-up language. Both species were able to learn the predictive relationships between the spoken sounds in the sequences.

Neural responses from the auditory cortex in the two species revealed how populations of neurons responded to the speech sounds and to the learned predictive relationships between the sounds. The neural responses were found to be remarkably similar in both species, suggesting that the way the human auditory cortex responds to speech harnesses evolutionarily conserved mechanisms, rather than those that have uniquely specialized in humans for speech or language.

"Being able to predict events is vital for so much of what we do every day," Professor Petkov notes. "Now that we know humans and monkeys share the ability to predict speech we can apply this knowledge to take forward research to improve our understanding of the human brain."

Dr Kikuchi elaborates, "in effect we have discovered the mechanisms for speech in your brain that work like predictive text on your mobile phone, anticipating what you are going to hear next. This could help us better understand what is happening when the brain fails to make fundamental predictions, such as in people with dementia or after a stroke."

Building on these results, the team are working on projects to harness insights on predictive signals in the brain to develop new models to study how these signals go wrong in patients with stroke or dementia. The long-term goal is to identify strategies that yield more accurate prognoses and treatments for these patients.

More information: Kikuchi Y, Attaheri A, Wilson B, Rhone AE, Nourski KV, Gander PE, et al. (2017) Sequence learning modulates neural responses and oscillatory coupling in human and monkey auditory cortex. PLoS Biol 15(4): e2000219. DOI: 10.1371/journal.pbio.2000219