Decoding movement and speech from the brain of a tetraplegic person

New research identifies a brain region where both imagined hand grasps and spoken words can be decoded, indicating a promising candidate region for brain implants for neuroprosthetic applications.

Every year, the lives of hundreds of thousands of people are severely disrupted when they lose the ability to move or speak as a result of spinal injury, stroke, or neurological diseases. At Caltech, neuroscientists in the laboratory of Richard Andersen, James G. Boswell Professor of Neuroscience, and Leadership Chair and Director of the Tianqiao & Chrissy Chen Brain-Machine Interface Center, are studying how the brain encodes movements and speech, in order to potentially restore these functions to those individuals who have lost them.

Brain-machine interfaces (BMIs) are devices that record brain signals and interpret them to issue commands that operate external assistive devices, such as computers or robotic limbs. Thus, an individual can control such machinery just with their thoughts. For example, in 2015, the Andersen team and colleagues worked with a tetraplegic participant to implant recording electrodes into a part of the brain that forms intentions to move. The BMI enabled the participant to direct a robotic limb to reach out and grasp a cup, just by thinking about those actions.

Now, new research from the Andersen lab has identified a region of the brain, called the supramarginal gyrus (SMG), that codes for both grasping movements and speech—indicating a promising candidate region for the implantation of more efficient BMIs that can control multiple types of prosthetics in both the grasp and speech domains.

The research is described in a paper that appears in the journal Neuron on March 31. Sarah Wandelt, who is a graduate student in the computation and neural systems program at Caltech, is the study's first author.

The exact location in the brain where electrodes are implanted affects BMI performance and what the device can interpret from brain signals. In the previously mentioned 2015 study, the laboratory discovered that BMIs are able to decode motor intentions while a movement is being planned, and thus before the onset of that action, if they are reading signals from a high-level brain region that governs intentions: the posterior parietal cortex (PPC). Electrode implants in this area, then, could lead to control of a much larger repertoire of movements than more specialized motor areas of the brain.

Because of the ability to decode intention and translate it into movement, an implant in the PPC requires only that a patient think about the desire to grasp an object rather than having to envision each the precise movements involved in grasping—opening the hand, unfolding each finger, placing the hand around an object, closing each finger, and so on.

"When you reach out to grab a cup of water, you don't consciously think about the precise grasping movements involved—you simply think about your desired action, and your more specialized motor areas do the rest," says Wandelt. "Our goal is to find brain regions that can be easily decoded by a BMI to produce whole-grasp movements, or even speech, as we discovered in this study."

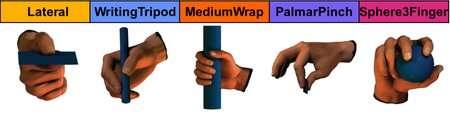

The SMG is a region within the PPC. Previous studies with nonhuman primates and functional magnetic resonance imaging (fMRI) conducted with humans indicated that the SMG was activated for grasping movements. In this new research, the team implanted an array of microelectrodes into the SMG of a tetraplegic participant and then measured brain activity as the person imagined making grasping movements. The electrode array implant, which has 96 recording electrodes on a 4.4-by-4.2-millimeter-square chip, smaller than the nail of one's thumb, measured activity from 40 to 50 neurons during each recording session. The study showed for the first time that populations of individual neurons in human SMG encode a variety of imagined grasps.

Next, the team followed up on previous research suggesting that the SMG is involved in language processes to see if speech could be decoded from the neural signals recorded from the implant. The researchers asked the participant to speak specific words and simultaneously measured SMG activity. These experiments showed that spoken words, whether spoken grasp types (for example, hand positions called writing tripod or palmar pinch) or spoken colors (such as "green" or "yellow") could be decoded from the neural activity. This suggests that the SMG would be a good candidate region for implanting BMIs, as the BMIs could be connected to prosthetics that enable a person to move and speak.

"The finding of an area that encodes both imagined grasps and spoken words extends the concept of cognitive BMIs, in which signals from high-level cognitive areas of the brain can provide a very large variety of useful brain signals," says Andersen. "In this study, the speech-related activity was for spoken words, and our next step is to see if imagined speech, that inner dialog we have with ourselves, can also be decoded from the SMG."

The paper is titled "Decoding grasp and speech signals from the cortical grasp circuit in a tetraplegic human" and is published in Neuron.

More information: Sarah K. Wandelt et al, Decoding grasp and speech signals from the cortical grasp circuit in a tetraplegic human, Neuron (2022). DOI: 10.1016/j.neuron.2022.03.009