This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

New research sheds light on how human vision perceives scale

Researchers from Aston University and the University of York have discovered new insights into how the human brain makes perceptual judgements of the external world.

The study, published on May 8 in the journal PLOS One, explored the computational mechanisms used by the human brain to perceive the size of objects in the world around us.

The research, led by Professor Tim Meese, in the School of Optometry at Aston University and Dr. Daniel Baker in the Department of Psychology at University of York, tells us more about how our visual system can exploit 'defocus blur' to infer perceptual scale, but that it does so crudely.

It is well known that to derive object size from retinal image size, our visual system needs to estimate the distance to the object. The retinal image contains many pictorial cues, such as linear perspective, which help the system derive the relative size of objects. However, to derive absolute size, the system needs to know about spatial scale.

By taking account of defocus blur, like the blurry parts of an image outside the depth of focus of a camera, the visual system can achieve this. The math behind this has been well worked out by others, but the study asked the question: does human vision exploit this math?

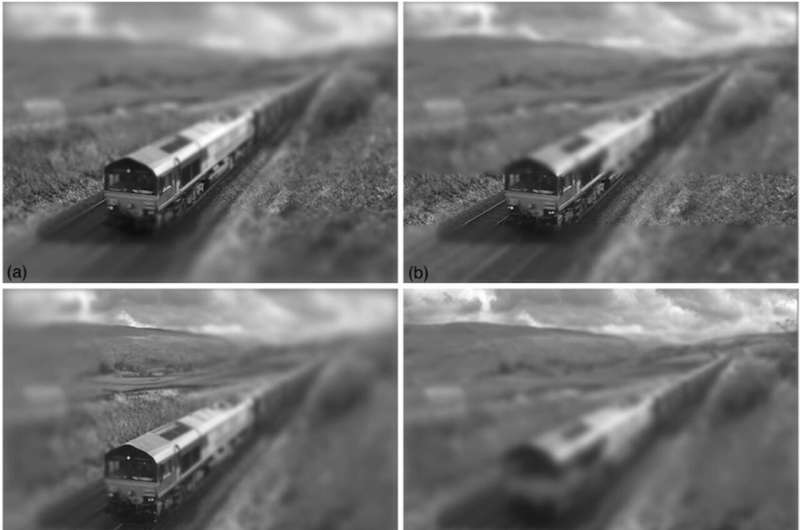

The research team presented participants with photographic pairs of full-scale railway scenes subject to various artificial blur treatments and small-scale models of railway scenes taken with a long exposure and small aperture to diminish defocus blur. The task was to detect which photograph in each pair was the real full-scale scene.

When the artificial blur was appropriately oriented with the ground plane (the horizontal plane representing the ground on which the viewer is standing) in the full-scale scenes, participants were fooled and believed the small models to be the full-scale scenes. Remarkably, this did not require the application of realistic gradients of blur. Simple uniform bands of blur at the top and bottom of the photographs achieved almost equivalent miniaturization effects.

Tim Meese, professor of vision science at Aston University, said, "Our results indicate that human vision can exploit defocus blur to infer perceptual scale but that it does this crudely—more a heuristic than a metrical analysis. Overall, our findings provide new insights into the computational mechanisms used by the human brain in perceptual judgments about the relation between ourselves and the external world."

Daniel Baker, senior lecturer in psychology at the University of York, said, "These findings demonstrate that our perception of size is not perfect and can be influenced by other properties of a scene. It also highlights the remarkable adaptability of the visual system. This might have relevance for understanding the computational principles underlying our perception of the world. For example, when judging the size and distance of hazards when driving."

More information: Tim S. Meese et al, Blurring the boundary between models and reality: Visual perception of scale assessed by performance, PLOS ONE (2023). DOI: 10.1371/journal.pone.0285423