June 8, 2023 feature

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Exploring how the human brain and artificial neural networks assemble knowledge

As humans explore the world around them and experience new things, they innately start to make sense of what they encounter, creating mental connections between the objects, people, places, and events they encounter. Neuroscientists have been trying to identify the neural processes underpinning this 'mental assembly of knowledge' for decades, and their studies have gathered several important findings.

In recent years, research focusing on this topic has somewhat intensified, due to the emergence of artificial neural networks (ANNs), computational tools inspired by the structure and function of neural networks in the brain that can be trained to tackle different tasks. Attaining a better understanding of the neural processes that enable the successful assembly of knowledge in humans could ultimately help to adapt the design of ANNs and improve their performance on tasks that can benefit from this capability.

Concurrently, some researchers have also started comparing how the human brain tackles specific tasks to the processes underpinning the functioning of ANNs. These comparisons could unveil interesting parallelisms between AI and the human brain, which could be beneficial for both neuroscience and computer science research.

A research group at University of Oxford recently carried out an interesting study specifically exploring the assembly of knowledge by the human brain and ANN-based computational models. Their paper, published in Neuron, resulted in the identification of an approach that could help to enhance knowledge assembly in AI tools.

"Human understanding of the world can change rapidly when new information comes to light, such as when a plot twist occurs in a work of fiction," Stephanie Nellie, Lukas Braun and their colleagues wrote in their paper. "This flexible 'knowledge assembly' requires few-shot reorganization of neural codes for relations among objects and events. However, existing computational theories are largely silent about how this could occur."

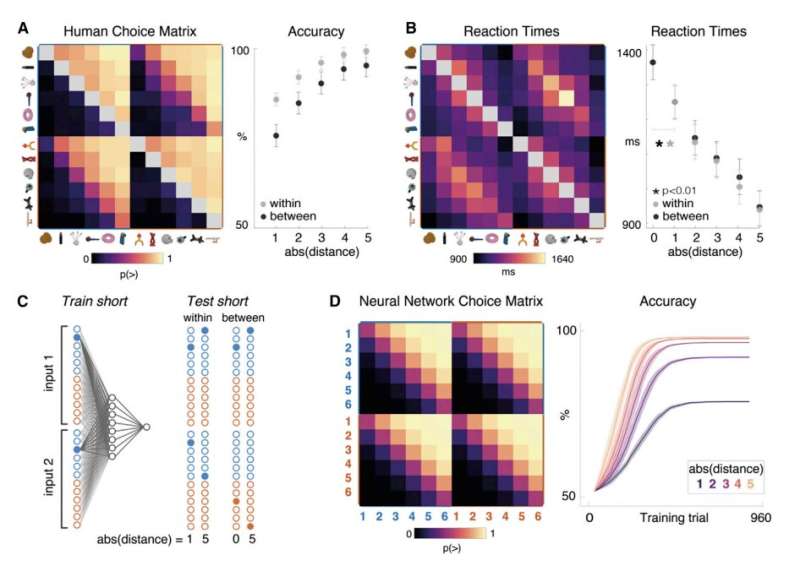

To investigate how the human brain assembles knowledge, the researchers carried out a series of experiments involving 34 participants. These participants were asked to complete a simple computerized task, which required them to make decisions about new objects that they were shown on a screen.

As the participants completed this task, their brain was scanned using a functional magnetic resonance imaging (fMRI) scanner. This is an imaging method that detects small changes in blood flow associated with brain activity.

"Participants learned a transitive ordering among novel objects within two distinct contexts before exposure to new knowledge that revealed how they were linked," Nellie, Braun and their colleagues explained in their paper. "Blood-oxygen-level-dependent (BOLD) signals in dorsal frontoparietal cortical areas revealed that objects were rapidly and dramatically rearranged on the neural manifold after minimal exposure to linking information."

Essentially, Nellie, Braun and their colleagues found that as the participants learned new information about objects and the relationships between them, the ways in which these objects were represented in the brain appeared to be 'rearranged'. Using these findings, the team tried to replicate a similar process in a computational model based on an ANN.

Their approach allows the model to rapidly assemble and re-assemble knowledge it acquires. It does this using an adaptation of online stochastic gradient descent, a technique used to enable gradual and online learning in computational models.

Overall, the recent study by this team of researchers confirms previous findings highlighting the involvement of dorsal brain structures, particularly the parietal cortex, in encoding abstract representations of objects, which can change over time as humans acquire new knowledge. In the future, their findings could inform the development of other computational approaches that better replicate this "knowledge assembly process."

More information: Stephanie Nelli et al, Neural knowledge assembly in humans and neural networks, Neuron (2023). DOI: 10.1016/j.neuron.2023.02.014

© 2023 Science X Network