This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Scientists use AI-generated images to map visual functions in the brain

Researchers at Weill Cornell Medicine, Cornell Tech and Cornell's Ithaca campus have demonstrated the use of AI-selected natural images and AI-generated synthetic images as neuroscientific tools for probing the visual processing areas of the brain. The goal is to apply a data-driven approach to understand how vision is organized while potentially removing biases that may arise when looking at responses to a more limited set of researcher-selected images.

In the study, published in Communications Biology, the researchers had volunteers look at images that had been selected or generated based on an AI model of the human visual system. The images were predicted to maximally activate several visual processing areas. Using functional magnetic resonance imaging (fMRI) to record the brain activity of the volunteers, the researchers found that the images did activate the target areas significantly better than control images.

The researchers also showed that they could use this image-response data to tune their vision model for individual volunteers so that images generated to be maximally activating for a particular individual worked better than images generated based on a general model.

"We think this is a promising new approach to study the neuroscience of vision," said study senior author Dr. Amy Kuceyeski, a professor of mathematics in radiology and of mathematics in neuroscience at the Feil Family Brain and Mind Research Institute at Weill Cornell Medicine.

The study was a collaboration with the laboratory of Dr. Mert Sabuncu, a professor of electrical and computer engineering at Cornell Engineering and Cornell Tech and of electrical engineering in radiology at Weill Cornell Medicine. The study's first author was Dr. Zijin Gu, a who was a doctoral student co-mentored by Dr. Sabuncu and Dr. Kuceyeski at the time of the study.

Making an accurate model of the human visual system, in part by mapping brain responses to specific images, is one of the more ambitious goals of modern neuroscience. Researchers have found, for example, that one visual processing region may activate strongly in response to an image of a face, whereas another may respond to a landscape. Scientists must rely mainly on non-invasive methods in pursuit of this goal, given the risk and difficulty of recording brain activity directly with implanted electrodes.

The preferred non-invasive method is fMRI, which essentially records changes in blood flow in small vessels of the brain—an indirect measure of brain activity—as subjects are exposed to sensory stimuli or otherwise perform cognitive or physical tasks. An fMRI machine can read out these tiny changes in three dimensions across the brain at a resolution on the order of cubic millimeters.

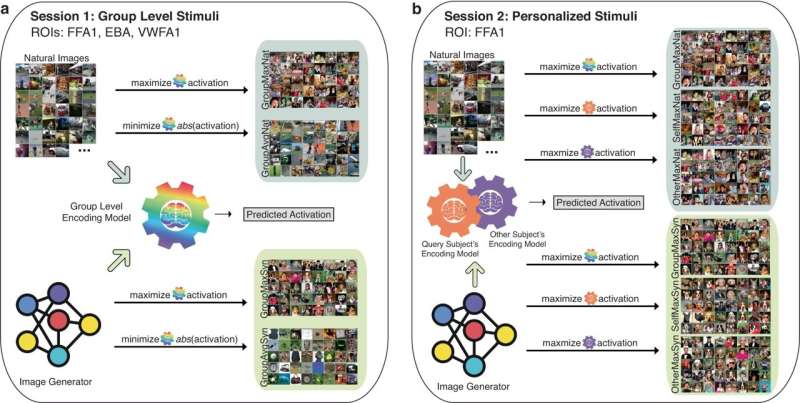

For their own studies, Dr. Kuceyeski and Dr. Sabuncu and their teams used an existing dataset comprising tens of thousands of natural images, with corresponding fMRI responses from human subjects, to train an AI-type system called an artificial neural network (ANN) to model the human brain's visual processing system.

They then used this model to predict which images, across the dataset, should maximally activate several targeted vision areas of the brain. They also coupled the model with an AI-based image generator to generate synthetic images to accomplish the same task.

"Our general idea here has been to map and model the visual system in a systematic, unbiased way, in principle even using images that a person normally wouldn't encounter," Dr. Kuceyeski said.

The researchers enrolled six volunteers and recorded their fMRI responses to these images, focusing on the responses in several visual processing areas.

The results showed that, for both the natural images and the synthetic images, the predicted maximal activator images, on average across the subjects, did activate the targeted brain regions significantly more than a set of images that were selected or generated to be only average activators. This supports the general validity of the team's ANN-based model and suggests that even synthetic images may be useful as probes for testing and improving such models.

In a follow-on experiment, the team used the image and fMRI-response data from the first session to create separate ANN-based visual system models for each of the six subjects. They then used these individualized models to select or generate predicted maximal-activator images for each subject.

The fMRI responses to these images showed that, at least for the synthetic images, there was greater activation of the targeted visual region, a face-processing region called FFA1, compared to the responses to images based on the group model. This result suggests that AI and fMRI can be useful for individualized visual-system modeling, for example to study differences in visual system organization across populations.

The researchers are now running similar experiments using a more advanced version of the image generator, called Stable Diffusion.

The same general approach could be useful in studying other senses such as hearing, they noted.

Dr. Kuceyeski also hopes ultimately to study the therapeutic potential of this approach.

"In principle, we could alter the connectivity between two parts of the brain using specifically designed stimuli, for example to weaken a connection that causes excess anxiety," she said.

More information: Zijin Gu et al, Human brain responses are modulated when exposed to optimized natural images or synthetically generated images, Communications Biology (2023). DOI: 10.1038/s42003-023-05440-7