This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

Study discovers neurons in the human brain that can predict what we are going to say before we say it

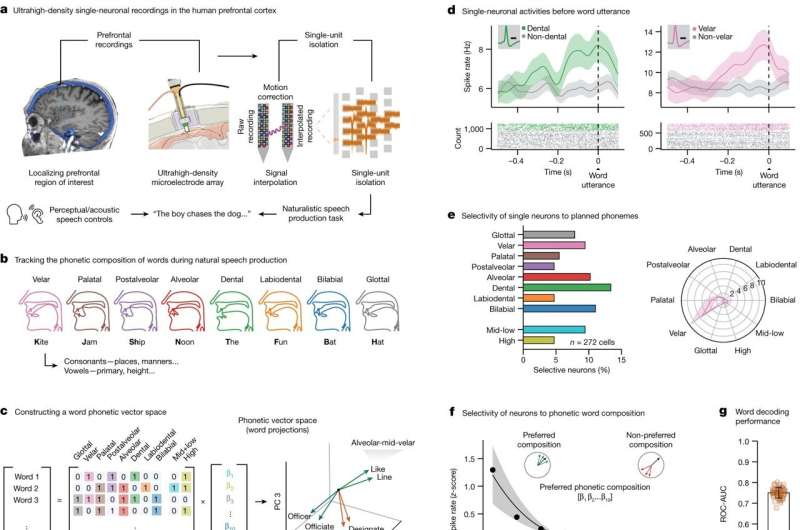

By using advanced brain recording techniques, a new study led by researchers from Massachusetts General Hospital (MGH) demonstrates how neurons in the human brain work together to allow people to think about what words they want to say and then produce them aloud through speech.

Together, these findings provide a detailed map of how speech sounds such as consonants and vowels are represented in the brain well before they are even spoken and how they are strung together during language production.

The work, which is published in Nature, reveals insights into the brain's neurons that enable language production, and which could lead to improvements in the understanding and treatment of speech and language disorders.

"Although speaking usually seems easy, our brains perform many complex cognitive steps in the production of natural speech—including coming up with the words we want to say, planning the articulatory movements and producing our intended vocalizations," says senior author Ziv Williams, MD, an associate professor in Neurosurgery at MGH and Harvard Medical School.

"Our brains perform these feats surprisingly fast—about three words per second in natural speech—with remarkably few errors. Yet how we precisely achieve this feat has remained a mystery."

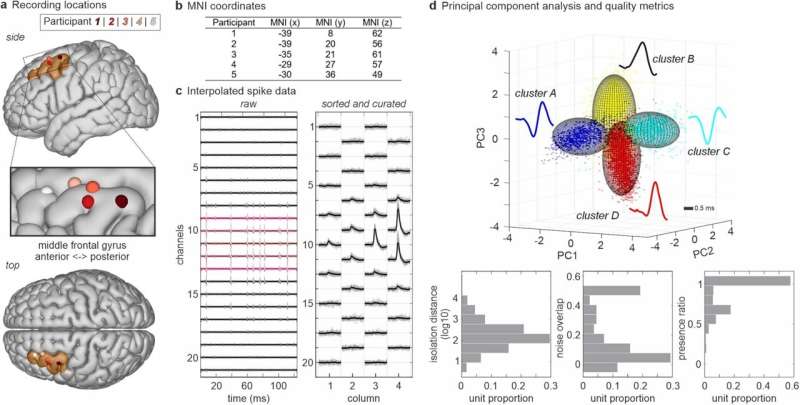

When they used a cutting-edge technology called Neuropixels probes to record the activities of single neurons in the prefrontal cortex, a frontal region of the human brain, Williams and his colleagues identified cells that are involved in language production and that may underlie the ability to speak. They also found that there are separate groups of neurons in the brain dedicated to speaking and listening.

"The use of Neuropixels probes in humans was first pioneered at MGH. These probes are remarkable—they are smaller than the width of a human hair, yet they also have hundreds of channels that are capable of simultaneously recording the activity of dozens or even hundreds of individual neurons," says Williams, who had worked to develop these recording techniques with Sydney Cash, MD, Ph.D., a professor in Neurology at MGH and Harvard Medical School, who also helped lead the study.

"Use of these probes can therefore offer unprecedented new insights into how neurons in humans collectively act and how they work together to produce complex human behaviors such as language," Williams continues.

The study showed how neurons in the brain represent some of the most basic elements involved in constructing spoken words—from simple speech sounds called phonemes to their assembly into more complex strings such as syllables.

For example, the consonant "da," which is produced by touching the tongue to the hard palate behind the teeth, is needed to produce the word dog.

By recording individual neurons, the researchers found that certain neurons become active before this phoneme is spoken out loud. Other neurons reflected more complex aspects of word construction such as the specific assembly of phonemes into syllables.

With their technology, the investigators showed that it's possible to reliably determine the speech sounds that individuals will say before they articulate them.

In other words, scientists can predict what combination of consonants and vowels will be produced before the words are actually spoken. This capability could be leveraged to build artificial prosthetics or brain-machine interfaces capable of producing synthetic speech, which could benefit a range of patients.

"Disruptions in the speech and language networks are observed in a wide variety of neurological disorders—including stroke, traumatic brain injury, tumors, neurodegenerative disorders, neurodevelopmental disorders, and more," says Arjun Khanna, who is a co-author on the study. "Our hope is that a better understanding of the basic neural circuitry that enables speech and language will pave the way for the development of treatments for these disorders."

The researchers hope to expand on their work by studying more complex language processes that will allow them to investigate questions related to how people choose the words that they intend to say and how the brain assembles words into sentences that convey an individual's thoughts and feelings to others.

Additional authors include William Muñoz, Young Joon Kim, Yoav Kfir, Angelique C. Paulk, Mohsen Jamali, Jing Cai, Martina L Mustroph, Irene Caprara, Richard Hardstone, Mackenna Mejdell, Domokos Meszena, Abigail Zuckerman, and Jeffrey Schweitzer.

More information: Arjun R. Khanna et al, Single-neuronal elements of speech production in humans, Nature (2024). DOI: 10.1038/s41586-023-06982-w