This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Improving AI large language models helps them better align with human brain activity

With generative artificial intelligence (GenAI) transforming the social interaction landscape in recent years, large language models (LLMs), which use deep-learning algorithms to train GenAI platforms to process language, have been put in the spotlight.

A recent study by The Hong Kong Polytechnic University (PolyU) found that LLMs perform more like the human brain when being trained in more similar ways as humans process language, which has brought important insights to brain studies and the development of AI models.

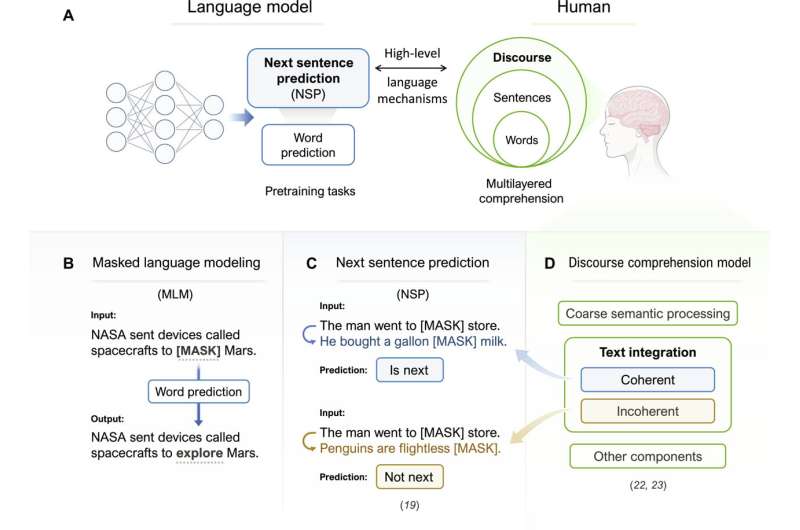

Current LLMs mostly rely on a single type of pretraining—contextual word prediction. This simple learning strategy has achieved surprising success when combined with massive training data and model parameters, as shown by popular LLMs such as ChatGPT.

Recent studies also suggest that word prediction in LLMs can serve as a plausible model for how humans process language. However, humans do not simply predict the next word but also integrate high-level information in natural language comprehension.

A research team led by Prof. Li Ping, Dean of the Faculty of Humanities and Sin Wai Kin Foundation Professor in Humanities and Technology at PolyU, has investigated the next sentence prediction (NSP) task, which simulates one central process of discourse-level comprehension in the human brain to evaluate if a pair of sentences is coherent, into model pretraining and examined the correlation between the model's data and brain activation.

The study has been recently published in the journal Science Advances.

The research team trained two models, one with NSP enhancement and the other without; both also learned word prediction. Functional magnetic resonance imaging (fMRI) data were collected from people reading connected sentences or disconnected sentences. The research team examined how closely the patterns from each model matched up with the brain patterns from the fMRI brain data.

It was clear that training with NSP provided benefits. The model with NSP matched human brain activity in multiple areas much better than the model trained only on word prediction. Its mechanism also nicely maps onto established neural models of human discourse comprehension.

The results give new insights into how our brains process full discourse such as conversations. For example, parts of the right side of the brain, not just the left, helped understand longer discourse. The model trained with NSP could also better predict how fast someone read—showing that simulating discourse comprehension through NSP helped AI understand humans better.

Recent LLMs, including ChatGPT, have relied on vastly increasing the training data and model size to achieve better performance. Prof. Li Ping said, "There are limitations in just relying on such scaling. Advances should also be aimed at making the models more efficient, relying on less rather than more data. Our findings suggest that diverse learning tasks such as NSP can improve LLMs to be more human-like and potentially closer to human intelligence."

"More importantly, the findings show how neurocognitive researchers can leverage LLMs to study higher-level language mechanisms of our brain. They also promote interaction and collaboration between researchers in the fields of AI and neurocognition, which will lead to future studies on AI-informed brain studies as well as brain-inspired AI."

More information: Shaoyun Yu et al, Predicting the next sentence (not word) in large language models: What model-brain alignment tells us about discourse comprehension, Science Advances (2024). DOI: 10.1126/sciadv.adn7744