In a noisy environment, lip-reading can help us to better understand the person we are speaking to

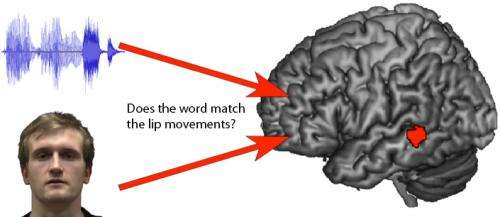

(Medical Xpress)—In a noisy environment, lip-reading can aid understanding of a conversation. Researchers at the Max Planck Institute for Human Cognitive and Brain Sciences who have been investigating this phenomenon are now able to show that the greater the activity in a particular region of the temporal lobe was, the more able participants were to match words with mouth movements. Visual and auditory information are combined in the so-called superior temporal sulcus (STS).

In everyday life we rarely consciously try to lip-read. However, in a noisy environment it is often very helpful to be able to see the mouth of the person you are speaking to. Re-searcher Helen Blank at the MPI in Leipzig explains why this is so: "When our brain is able to combine information from different sensory sources, for example during lip-reading, speech comprehension is improved." In a recent study, the researchers of the Max Planck Research Group "Neural Mechanisms of Human Communication" investigated this phenomenon in more detail to uncover how visual and auditory brain areas work together during lip-reading.

In the experiment, brain activity was measured using functional magnetic resonance imaging (fMRI) while participants heard short sentences. The participants then watched a short silent video of a person speaking. Using a button press, participants indicated whether the sentence they had heard matched the mouth movements in the video. If the sentence did not match the video, a part of the brain network that combines visual and auditory information showed greater activity and there were increased connections between the auditory speech region and the STS.

"It is possible that advanced auditory information generates an expectation about the lip movements that will be seen", says Blank. "Any contradiction between the prediction of what will be seen and what is actually observed generates an error signal in the STS."

How strong the activation is depends on the lip-reading skill of participants: The strong-er the activation, the more correct responses were. "People that were the best lip-readers showed an especially strong error signal in the STS", Blank explains. This effect seems to be specific to the content of speech - it did not occur when the subjects had to decide if the identity of the voice and face matched.

The results of this study are very important to basic research in this area. A better un-derstanding of how the brain combines auditory and visual information during speech processing could also be applied in clinical settings. "People with hearing impairment are often strongly dependent on lip-reading", says Blank. The researchers suggest that further studies could examine what happens in the brain after lip-reading training or during a combined use of sign language and lip-reading.

More information: Blank, H., von Kriegstein, K. Mechanisms of enhancing visual–speech recognition by prior auditory information. NeuroImage 65, 15 January 2013, 109–118.