Look at what I'm saying: Engineers show brain depends on vision to hear

University of Utah bioengineers discovered our understanding of language may depend more heavily on vision than previously thought: under the right conditions, what you see can override what you hear. These findings suggest artificial hearing devices and speech-recognition software could benefit from a camera, not just a microphone.

"For the first time, we were able to link the auditory signal in the brain to what a person said they heard when what they actually heard was something different. We found vision is influencing the hearing part of the brain to change your perception of reality—and you can't turn off the illusion," says the new study's first author, Elliot Smith, a bioengineering and neuroscience graduate student at the University of Utah. "People think there is this tight coupling between physical phenomena in the world around us and what we experience subjectively, and that is not the case."

The brain considers both sight and sound when processing speech. However, if the two are slightly different, visual cues dominate sound. This phenomenon is named the McGurk effect for Scottish cognitive psychologist Harry McGurk, who pioneered studies on the link between hearing and vision in speech perception in the 1970s. The McGurk effect has been observed for decades. However, its origin has been elusive.

In the new study, which appears today in the journal PLOS ONE, the University of Utah team pinpointed the source of the McGurk effect by recording and analyzing brain signals in the temporal cortex, the region of the brain that typically processes sound.

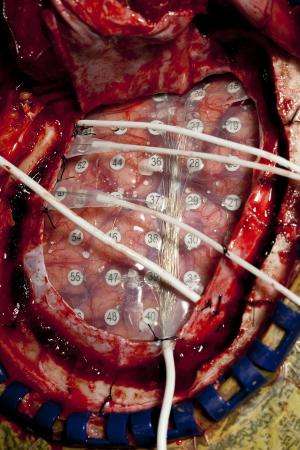

Working with University of Utah bioengineer Bradley Greger and neurosurgeon Paul House, Smith recorded electrical signals from the brain surfaces of four severely epileptic adults (two male, two female) from Utah and Idaho. House placed three button-sized electrodes on the left, right or both brain hemispheres of each test subject, depending on where each patient's seizures were thought to originate. The experiment was done on volunteers with severe epilepsy who were undergoing surgery to treat their epilepsy.

These four test subjects were then asked to watch and listen to videos focused on a person's mouth as they said the syllables "ba," "va," "ga" and "tha." Depending on which of three different videos were being watched, the patients had one of three possible experiences as they watched the syllables being mouthed:

- The motion of the mouth matched the sound. For example, the video showed "ba" and the audio sound also was "ba," so the patients saw and heard "ba."

- The motion of the mouth obviously did not match the corresponding sound, like a badly dubbed movie. For example, the video showed "ga" but the audio was "tha," so the patients perceived this disconnect and correctly heard "tha."

- The motion of the mouth only was mismatched slightly with the corresponding sound. For example, the video showed "ba" but the audio was "va," and patients heard "ba" even though the sound really was "va." This demonstrates the McGurk effect—vision overriding hearing.

By measuring the electrical signals in the brain while each video was being watched, Smith and Greger could pinpoint whether auditory or visual brain signals were being used to identify the syllable in each video. When the syllable being mouthed matched the sound or didn't match at all, brain activity increased in correlation to the sound being watched. However, when the McGurk effect video was viewed, the activity pattern changed to resemble what the person saw, not what they heard. Statistical analyses confirmed the effect in all test subjects.

"We've shown neural signals in the brain that should be driven by sound are being overridden by visual cues that say, 'Hear this!'" says Greger. "Your brain is essentially ignoring the physics of sound in the ear and following what's happening through your vision."

Greger was senior author of the study as an assistant professor of bioengineering at the University of Utah. He recently took a faculty position at Arizona State University.

The new findings could help researchers understand what drives language processing in humans, especially in a developing infant brain trying to connect sounds and lip movement to learn language. These findings also may help researchers sort out how language processing goes wrong when visual and auditory inputs are not integrated correctly, such as in dyslexia, Greger says.