How spatial navigation correlates with language

Cognitive neuroscientists from the Higher School of Economics and Aarhus University experimentally demonstrate how spatial navigation impacts language comprehension. The results of the study have been published in NeuroImage.

Language is a complicated cognitive function, which is performed not only by local brain modules, but by a distributed network of cortical generators. Physical experience such as movement and spatial motion play an important role in psychological experiences and cognitive function, which is related to how an individual mentally constructs the meaning of a sentence.

Nikola Vukovic and Yury Shtyrov carried out an experiment at the HSE Centre for Cognition & Decision Making, which explains the relations between the systems responsible for spatial navigation and language. Using neurophysiological data, they describe brain mechanisms that support navigation systems use in both spatial and linguistic tasks.

"When we read or hear stories about characters, we have to represent the inherently different perspectives people have on objects and events, and 'put ourselves in their shoes'. Our study is the first to show that our brain mentally simulates sentence perspective by using non-linguistic areas typically in charge of visuo-spatial thought" says Dr. Nikola Vukovic, the scientist who was chiefly responsible for devising and running the experiment.

Previous studies have shown that humans have certain spatial preferences that are based either on one's body (egocentric) or are independent from it (allocentric). Although not absolute and subject to change in various situations, these preferences define how an individual perceives the surrounding space and how they plan and understand navigation in this space.

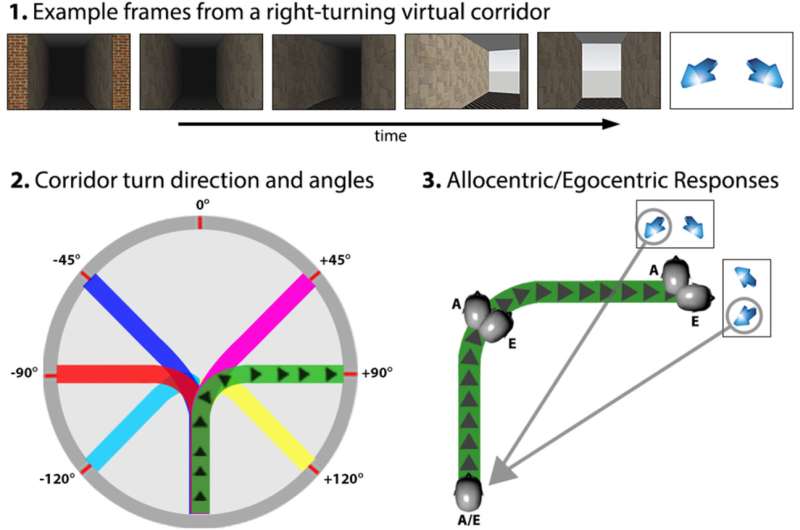

The participants of the experiment solved two types of tasks. The first was a computer-based spatial navigation task involving movement through a twisting virtual tunnel, at the end of which they had to indicate the beginning of the tunnel. The shape of the tunnel was designed so that people with egocentric and allocentric perspectives estimated the starting point differently. This difference in their subjective estimates helped the researchers split the participants according to their reference frame predispositions.

The second task involved understanding simple sentences and matching them with pictures. The pictures differed in terms of their perspective, and the same story could be described using first ("I") or second person pronouns ("You"). The participants had to choose which pictures best matched the situation described by the sentence.

During the experiment, electrical brain activity was recorded in all participants with the use of continuous electroencephalographic (EEG) data. Spectral perturbations registered by EEG demonstrated that a lot of areas responsible for navigation were active during the completion of both types of tasks. One of the most interesting facts for the researchers was that activation of areas when hearing the sentences also depended on the type of individual's spatial preferences.

'Brain activity when solving a language task is related to a individuals' egocentric or allocentric perspective, as well as their brain activity in the navigation task. The correlation between navigation and linguistic activities proves that these phenomena are truly connected', emphasized Yury Shtyrov, leading research fellow at the HSE Centre for Cognition & Decision Making and professor at Aarhus University, where he directs MEG/EEG research. 'Furthermore, in the process of language comprehension we saw activation in well-known brain navigation systems, which were previously believed to make no contribution to speech comprehension'.

These data may one day be used by neurobiologists and health professionals. For example, in some types of aphasia, comprehension of motion-related words suffers, and knowledge on the correlation between the navigation and language systems in the brain could help in the search for mechanisms to restore these links.

More information: Nikola Vukovic et al, Cortical networks for reference-frame processing are shared by language and spatial navigation systems, NeuroImage (2017). DOI: 10.1016/j.neuroimage.2017.08.041