Voices and emotions: the forehead is the key

Gestures and facial expressions betray our emotional state but what about our voices? How does simple intonation allow us to decode emotions – on the telephone, for example? By observing neuronal activity in the brain, researchers at the University of Geneva (UNIGE), Switzerland, have been able to map the cerebral regions we use to interpret and categorise vocal emotional representations. The results, which are presented in the journal Scientific Reports, underline the essential role played by the frontal regions in interpreting emotions communicated orally. When the process does not function correctly – following a brain injury, for instance – an individual will lack the ability to interpret another person's emotions and intentions properly. The researchers also noted the intense network of connections that links this area to the amygdala, the key organ for processing emotions.

The upper part of the temporal lobe in mammals is linked to hearing in particular. A specific area is dedicated to the vocalisations of their congeners, making it possible to distinguish them from (for example) environmental noises. But the voice is more than a sound to which we are especially sensitive: it is also a vector of emotions.

Categorising and discriminating

"When someone speaks to us, we use the acoustic information that we perceive in him or her and classify it according to various categories, such as anger, fear or pleasure," explains Didier Grandjean, professor in UNIGE's Faculty of Psychology and Educational Sciences (SCAS) and at the Swiss Centre for Affective Sciences (CISA). This way of classifying emotions is called categorisation, and we use it (inter alia) to establish that a person is sad or happy during a social interaction. Categorisation differs from discrimination, which consists of focusing attention on a particular state: detecting or looking for someone happy in a crowd, for example. But how does the brain categorise these emotions and determine what the other person is expressing? In an attempt to answer this question, Grandjean's team analysed the cerebral regions that are mobilised when constructing vocal emotional representations.

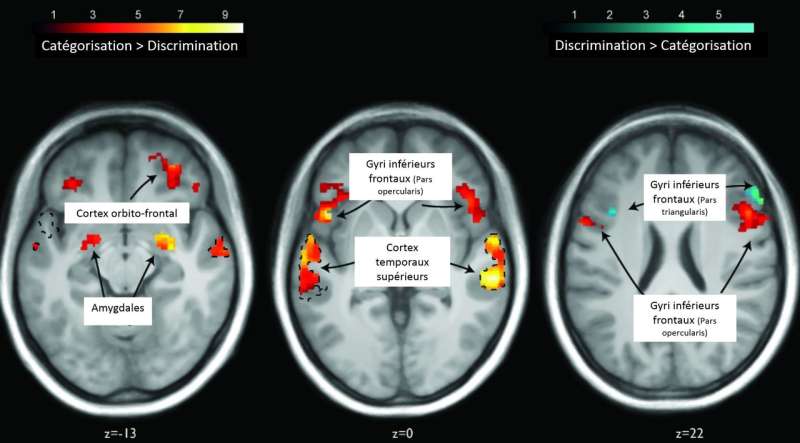

The sixteen adults who took part in the experiment were exposed to a vocalisation database consisting of six men's voices and six women's, all saying pseudo-words that were meaningless but uttered with emotion. The participants first had to classify each voice as to whether it was angry, neutral or happy so that the researchers could observe which area of the brain was being used for categorisation. Next, the subjects simply had to decide whether a voice was angry or happy or not so that the scientists could look at the area solicited by discrimination. "Functional magnetic resonance imaging meant we could observe which areas were being activated in each case. We found that categorisation and discrimination did not use exactly the same region of the inferior frontal cortex," says Sascha Frühholz, a researcher at UNIGE's SCAS at the time of the experiment but who is now a professor at the University of Zurich.

The crucial role of the frontal lobe

Unlike the voice-background noise distinction that is found in the temporal lobe, the actions of categorising and discriminating call on the frontal lobe, in particular the inferior frontal gyri (down the sides of the forehead). "We expected the frontal lobe to be involved, and had predicted the observation of two different sub-regions that would be activated depending on the action of categorising or discriminating," says Grandjean. In the first instance, it was the pars opercularis sub-region that corresponded to the categorisation of voices, while in the second case – discrimination – it was the pars triangularis. "This distinction is linked not just to brain activations selective to the processes studied but is also due to the difference in connections with other cerebral regions that require these two operations," continues Grandjean. "When we categorise, we have to be more precise than when we discriminate. That's why the temporal region, the amygdala and the orbito-frontal cortex – crucial areas for emotion – are used to a much higher degree and are functionally connected to the pars opercularis rather than the pars triangularis."

The research, which emphasises the difference between functional sub-territories in perceiving emotions through vocal communication, shows that the more complex and precise the processes related to emotions are, the more the frontal lobe and its connections with other cerebral regions are solicited. There is a difference between processing basic sound information (a distinction between surrounding noise and voices) made by the upper part of the temporal lobe, and processing high-level information (perceived emotions and contextual meanings) made by the frontal lobe. It is the latter that enables social interaction by decoding the intention of the speaker. "Without this area, it is not possible to represent the emotions of the other person through his or her voice, and we no longer understand his or her expectations and have difficulty integrating contextual information, as in sarcasm," concludes Grandjean. We now know why an individual with a brain injury affecting the inferior frontal gyrus and the orbito-frontal regions can no longer interpret the emotions related to what his or her peers are saying, and may, as a result, adopt socially inappropriate behaviour."

More information: Mihai Dricu et al. Biased and unbiased perceptual decision-making on vocal emotions, Scientific Reports (2017). DOI: 10.1038/s41598-017-16594-w