Why the world looks stable while we move

Head movements change the environmental image received by the eyes. People still perceive the world as stable, because the brain corrects for any changes in visual information caused by head movements. For the first time, two neuroscientists of the University of Tübingen's Werner Reichardt Centre for Integrative Neuroscience (CIN) have observed these correction processes in the brain with functional magnetic resonance imaging (fMRI). Their study, now published in NeuroImage, has far-reaching implications for the understanding of the effects of virtual realities on our brain.

Even while moving, the environment appears stable, because the brain constantly balances input from different senses. Visual stimuli are compared with input from the sense of equilibrium, the relative positions of the head and body, and of the movements being performed. The result: when people walk or run around, the perception of the world surrounding us does not roll our bounce. But when visual stimuli and our perception of movement do not fit together, this balancing act in the brain falls apart.

Anybody who has ever delved into fantasy worlds with virtual reality glasses may have experienced this disconnect. VR glasses continually monitor head movements, and the computer adapts the devices' visual input. Nevertheless, prolonged use of VR glasses often leads to motion sickness .Even modern VR systems lack the precision necessary for visual information and head movements to chime perfectly.

Until recently, neuroscientists have not been able to identify the mechanisms that enable the brain to harmonise visual and motion perception. Modern noninvasive studies on human subjects such as by functional magnetic resonance imaging (fMRI) run into one particular problem: images can only be obtained of the resting head.

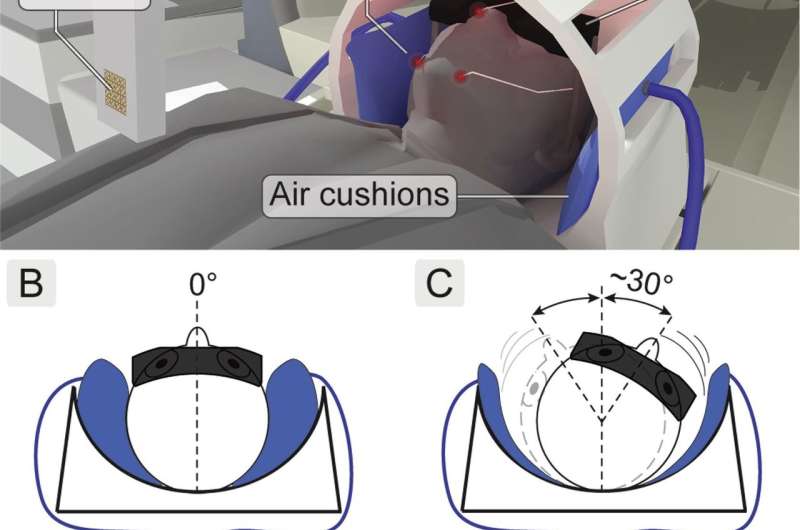

Tübingen researchers Andreas Schindler and Andreas Bartels have developed a sophisticated apparatus to circumvent this problem. They are now able to employ fMRI to observe what happens in the brain when we move our head while perceiving fitting – or non-fitting – visual and motion stimuli. In order to do so, subjects wearing VR glasses entered a specially modified fMRI scanner in which computer-controlled air cushions fixate the subjects' heads immediately following movement. During the head movements, the VR glasses displayed images congruent with the movements. In other cases, the glasses displayed images incongruent with head movements. When the air cushions stabilised the probands' heads after the movements, the fMRI signal was recorded.

Andreas Schindler says, "With fMRI, we cannot directly measure neuronal activity. fMRI just shows blood flow and oxygen saturation in the brain, with a delay of several seconds. That is often seen as a deficiency, but for our study, it was actually useful for once: we were able to record the very moment when the subject's brain was busy balancing its own head movement and the images displayed on the VR glasses. And we were able to do so seconds after the fact, when the subject's head was already resting quietly on its air cushion. Normally, head movements and brain imaging don't go together, but we hacked the system, so to speak."

The researchers could thus observe brain activity that had so far only been investigated in primates and, indirectly, in certain patients with brain lesions. One area in the posterior insular cortex showed heightened activity whenever the VR display and head movements congruently simulated a stable environment. When the two signals conflicted, this heightened activity vanished. The same observation held true in a number of other brain regions responsible for the processing of visual information in motion.

The new method opens the door for a more focused study of the neuronal interactions between motion and visual perception. Moreover, the Tübingen researchers have shown for the first time what happens in the brain when we enter virtual worlds and balance on the knife's edge between immersion and motion sickness.

More information: Andreas Schindler et al. Integration of visual and non-visual self-motion cues during voluntary head movements in the human brain, NeuroImage (2018). DOI: 10.1016/j.neuroimage.2018.02.006