Researchers find the brain processes sight and sound in same manner

Although sight is a much different sense than sound, Georgetown University Medical Center neuroscientists have found that the human brain learns to make sense of these stimuli in the same way.

The researchers say in a two-step process, neurons in one area of the brain learn the representation of the stimuli, and another area categorizes that input so as to ascribe meaning to it—like first seeing just a car without a roof and then analyzing that stimulus in order to place it in the category of "convertible." Similarly, when a child learns a new word, it first has to learn the new sound and then, in a second step, learn to understand that different versions (accents, pronunciations, etc.) of the word, spoken by different members of the family or by their friends, all mean the same thing and need to be categorized together.

"A computational advantage of this scheme is that it allows the brain to easily build on previous content to learn novel information," says the study's senior investigator, Maximilian Riesenhuber, PhD, a professor in Georgetown University School of Medicine's Department of Neuroscience. Study co-authors include first author, Xiong Jiang, PhD; graduate student Mark A. Chevillet; and Josef P. Rauschecker, PhD, all Georgetown neuroscientists.

Their study, published in Neuron, is the first to provide strong evidence that learning in vision and audition follows similar principles. "We have long tried to make sense of senses, studying how the brain represents our multisensory world," says Riesenhuber.

In 2007, the investigators were first to describe the two-step model in human learning of visual categories, and the new study now shows that the brain appears to use the same kind of learning mechanisms across sensory modalities.

The findings could also help scientists devise new approaches to restore sensory deficits, Rauschecker, one of the co-authors, says.

"Knowing how senses learn the world may help us devise workarounds in our very plastic brains," he says. "If a person can't process one sensory modality, say vision, because of blindness, there could be substitution devices that allow visual input to be transformed into sounds. So one disabled sense would be processed by other sensory brain centers."

The 16 participants in this study were trained to categorize monkey communication calls— real sounds that mean something to monkeys, but are alien in meaning to humans. The investigators divided the sounds into two categories labeled with nonsense names, based on prototypes from two categories: so-called "coos" and "harmonic arches." Using an auditory morphing system, the investigators were able to create thousands of monkey call combinations from the prototypes, including some very similar calls that required the participants to make fine distinctions between the calls. Learning to correctly categorize the novel sounds took about six hours.

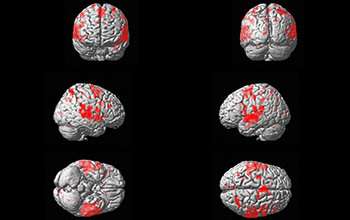

Before and after training, fMRI data were obtained from the volunteers to investigate changes in neuronal tuning in the brain that were induced by categorization training. Advanced fMRI techniques, functional magnetic resonance imaging rapid adaptation (fMRI-RA) and multi-voxel pattern analysis, were used along with conventional fMRI and functional connectivity analyses. In this way, researchers were able to see two distinct sets of changes: a representation of the monkey calls in the left auditory cortex, and tuning analysis that leads to category selectivity for different types of calls in the lateral prefrontal cortex.

"In our study, we used four different techniques, in particular fMRI-RA and MVPA, to independently and synergistically provide converging results. This allowed us to obtain strong results even from a small sample," says co-author Jiang.

Processing sound requires discrimination in acoustics and tuning changes at the level of the auditory cortex, a process that the researchers say is the same between humans and animal communication systems. Using monkey calls instead of human speech forced the participants to categorize the sounds purely on the basis of acoustics rather than meaning.

"At an evolutionary level, humans and animals need to understand who is friend and who is foe, and sight and sound are integral to these judgments," Riesenhuber says.

More information: Neuron (2018). DOI: 10.1016/j.neuron.2018.03.014