July 5, 2019 feature

Computing hubs in the hippocampus and cortex

Neural computation occurs in large neural networks within dynamic brain states, yet it remains poorly understood if the functions are performed by a specific subset of neurons or if they occurred in specific, dynamic regions. In a recent study, Wesley Clawson and co-workers at the Institute of Neuroscience Systems in France, used high density recordings in the hippocampus, medial entorhinal and medial prefrontal cortex of the rat. Using the animal model, they identified computing substates where specific computing hub neurons performed well-defined operations on storage and sharing in a brain state-dependent manner.

The scientists retrieved distinct computing substates in each global brain state, which included REM (rapid-eye-movement) and NREM (non-rapid-eye-movement) sleep. The results suggested that the functional roles were not hardwired but reassigned at a specified time-scale. Clawson et al. identified the sequence of substates whose temporal organization was dynamic between order and disorder. The results of the study are now published on Science Advances.

Information processing in the brain can be approached on three levels to include (1) biophysical, (2) algorithmic and (3) behavioral components. The algorithmic level remains the least understood, where it describes emergent functional computations that can be decomposed into simpler processing steps with complex architectures. At the lowest level of individual system components such as single neurons, the building blocks of distributed information processing can be modeled as primitive operations of storing, transferring or nonlinearly integrating information streams. During resting state conditions both blood-oxygen level dependent (BOLD) and electroencephalogram (EEG) signals are characterized by discrete periods of functional connectivity or topographical stability known as resting state networks and microstates. Neuroscientists have demonstrated that transition between the large-scale epochs are not periodic or random but occur through a fractal and complex syntax, hitherto not understood.

For instance, does the macroscale organization also occur at the microscale? Is neuronal activity at the level of the microcircuit associated with different styles of information processing? To answer these questions, the first goal of Clawson and co-workers was to determine if information processing at the local neuronal circuit level was structured into discrete sequences of substates to form a hallmark of computation. For this, they focused on the low-level computing operation at the level of the single-neuron such as basic information storage and sharing. They studied two conditions – anesthesia and natural sleep that were characterize by theta (THE)/ slow oscillation (SO) and rapid eye movement (REM)/nonREM sleep, respectively.

During the work, Clawson considered the CA1 region of the hippocampus (HPC), the medial entorhinal cortex (mEC) and medial prefrontal cortex (mPFC) to investigate the algorithmic properties shared between the regions. Their second study goal, aimed to determine if primitive processing operations were localized, or distributed in the microcircuit as previously proposed. This concept raised two key questions:

(1) are specific operations driven by a few key neurons in a rich-club architecture?

(2) Do neurons have predetermined computing roles as information "sharer," "storer" or rigidly described partners in their functional interactions? More specifically, is information routed through a hardwired neuronal "switchboard" system?

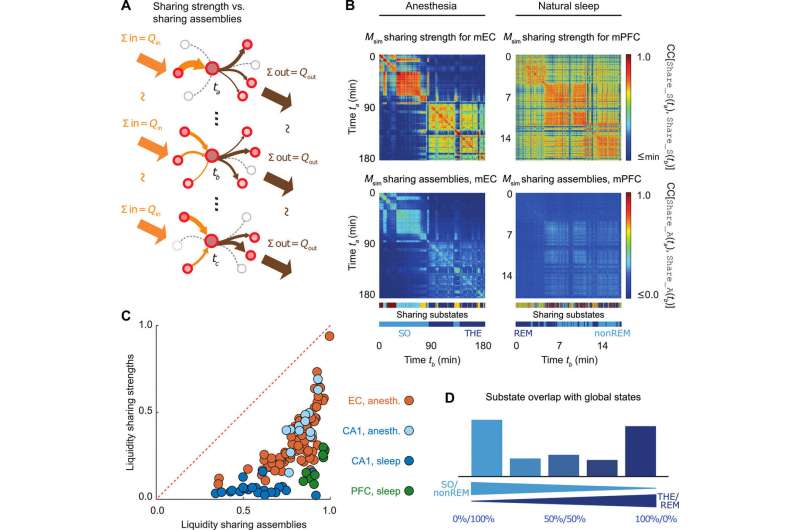

In total, the findings suggest a more distributed and less hierarchical style of information processing in neuronal microcircuits similar to emergent liquid state computation than pre-programmed processing pipelines.

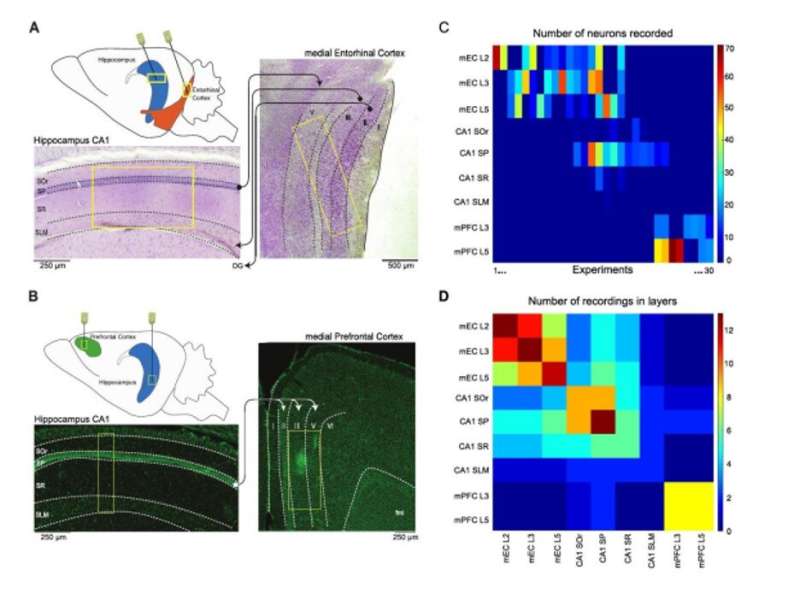

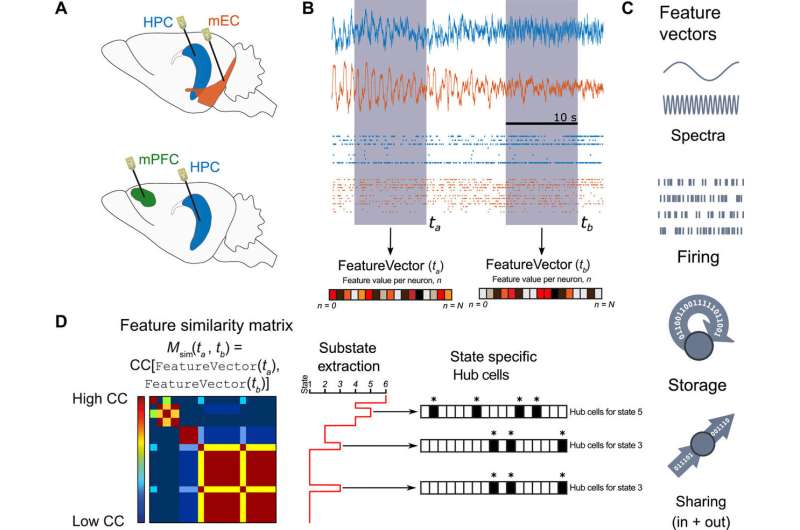

Clawson et al. recorded neurons simultaneously from the CA1 region of the HPC (hippocampus) and mEC (medial entorhinal cortex) under anesthesia and from the mPFC (medial prefrontal cortex) region during natural sleep. They focused on two factors –

(1) How much information could a neuron buffer in time? They measured the parameter as active information storage, and

(2) Information sharing – how much of a neuron's activity information was available to other neurons? This was measured as mutual information.

The neuroscientists identified brain global states by the clustering analysis of field recordings performed in the CA1 region. Using unsupervised clustering they identified two states of anesthesia corresponding to the periods dominated by slow oscillations (SO state) and theta (THE state) oscillations, as well as two states corresponding to REM vs. nonREM episodes.

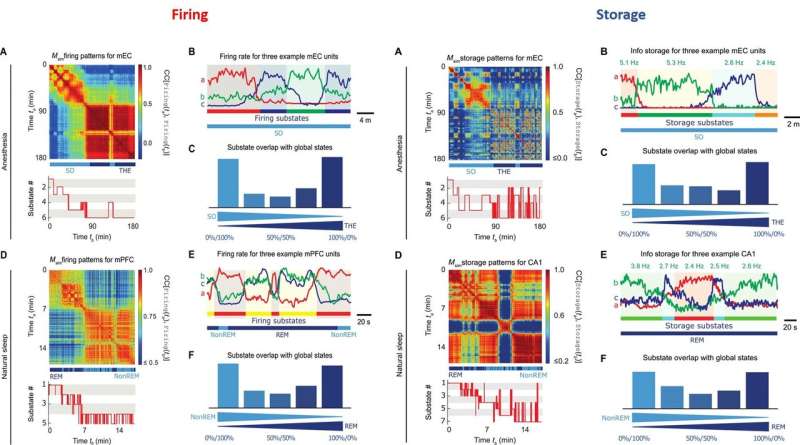

During proof-of-principle experiments in the animal models, the scientists assessed brain state-dependent firing substates and revealed a total of six firing substates in mEC and five in mPFC during THE oscillations and REM episodes. The scientists showed that the neuronal activity was compartmentalized with discrete switching events from one substate to another. The firing substates were brain state and region specific, without strict entrainment by the global oscillatory state. At any given time, Clawson et al. observed neuronal activity to convey an amount of information, measured by Shannon entropy.

Similar to the firing substates, the information storage substates did not show strict alignment between the studied regions. During anesthesia, the scientists observed the absolute storage values to be stronger in mEC than the CA1 regions. However, during natural sleep, the storage values for CA1 were two orders of magnitude larger than during anesthesia. The results showed that information storage was dynamically distributed in discrete substates to be brain state and brain region dependent. As a result, storage capacity of a neuron could vary substantially across time.

Following up from the single-cell level analysis conducted so far, the scientists next determined which neurons sharing cells could exchange information. Although the sum of incoming and outgoing information remained constant in each sharing substate, the information was shared across different cell assemblies from one time period to the next. All three brain regions showed remarkable liquid-like sharing assemblies across brain states with specificity to the brain regions.

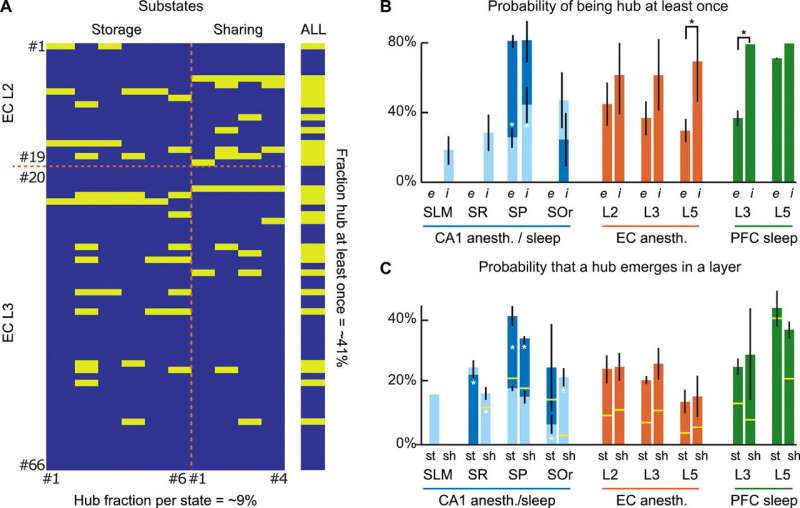

Since functional, effective and anatomical hub neurons were previously identified in the brain, Clawson et al. complemented the concept by introducing storing and sharing hubs where neurons displayed elevated storage or sharing values respectively. The scientists observed a general tendency for inhibitory interneurons to serve as computing hubs than for excitory cells. The tendency was stronger for cortical regions during anesthesia and during sleep. In total, the scientists observed only 12 percent of the neurons to function as "multifunction hubs" for both storage and sharing functions.

The findings showed the existence of substate sequences in three different brain regions: the HPC, the mEC and the mPFC; during anesthesia and natural sleep. Since the analysis was limited to a few brain states, the scientists assume they may have underestimated the proportion of GABA neurons acting as computational hubs in the study. The observed capacity to generate complex sequences of patterns in the study is a hallmark of self-organizing systems, associated with their emergent potential to perform universal computation. Such dynamics at the "edge of chaos" (transition between order and disorder) allow advantages for information processing. The observed capacity to generate complex sequences of patterns is a hall-mark of self-organizing systems and is associated with their emergent potential to perform universal computations. Understanding such patterns and dynamics within brain states will benefit early interpretation of neurological disorders.

In this way, Wesley Clawson and co-workers revealed a rich algorithmic-level organization of brain computation during natural sleep and anesthesia. The work indicates the existence of a basic architecture for low level computations shared by diverse neuronal circuits. Although the work did not prove functional relevance of substate dynamics, it can serve as a platform for previously undisclosed neural computations. The neuroscientists aim to perform similar analysis during behavioral tasks next with the addition of goal-driven maze navigation.

More information: 1. Computing hubs in the hippocampus and cortex advances.sciencemag.org/content/5/6/eaax4843 Wesley Clawson et al. 26 June 2019, Science Advances.

2. Between order and chaos www.nature.com/articles/nphys2190 James Crutchfield, 22 December 2011, Nature Physics.

3. Reliable Recall of Spontaneous Activity Patterns in Cortical Networks www.jneurosci.org/content/29/46/14596 Olivier Marre, November 2009, Journal of Neuroscience.

4. GABAergic Hub Neurons Orchestrate Synchrony in Developing Hippocampal Networks science.sciencemag.org/content/326/5958/1419 P. Bonifazi et al. December 2009, Science.

© 2019 Science X Network