This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Reading a mouse's mind from its face: New tool decodes neural activity using facial movements

Mice are always in motion. Even if there's no external motivation for their actions—like a cat lurking a few feet away—mice are constantly sweeping their whiskers back and forth, sniffing around their environment and grooming themselves.

These spontaneous actions light up neurons across many different regions of the brain, providing a neural representation of what the animal is doing moment-by-moment across the brain. But how the brain uses these persistent, widespread signals remains a mystery.

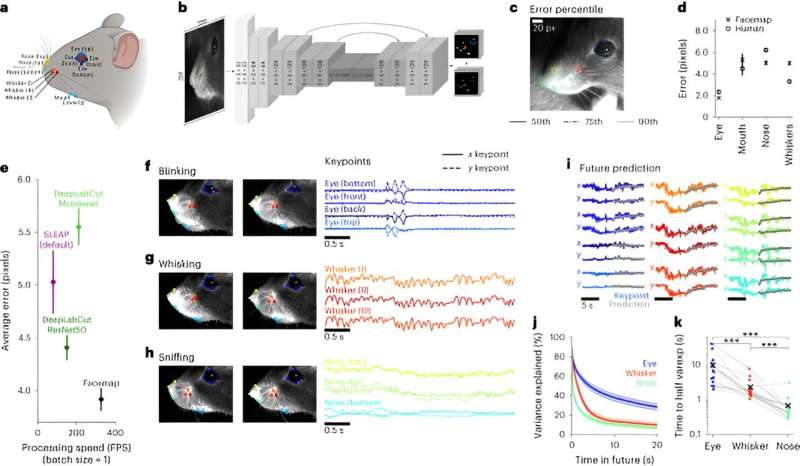

Now, scientists at HHMI's Janelia Research Campus have developed a tool that could bring researchers one step closer to understanding these enigmatic brain-wide signals. The tool, known as Facemap, uses deep neural networks to relate information about a mouse's eye, whisker, nose, and mouth movements to neural activity in the brain.

The findings are published in the journal Nature Neuroscience.

"The goal is: What are those behaviors that are being represented in those brain regions? And, if a lot of that information is in the facial movements, then how can we track that better?" says Atika Syeda, a graduate student in the Stringer Lab and lead author of a new paper describing the research.

Creating Facemap

The idea to create a better tool for understanding brain-wide signals grew out of previous research from Janelia Group Leaders Carsen Stringer and Marius Pachitariu. They found that activity in many different areas across a mouse's brain—long thought to be background noise—are signals driven by these spontaneous behaviors. Still unclear, however, was how the brain uses this information.

"The first step in really answering that question is understanding what are the movements that are driving this activity, and what exactly is represented in these brain areas," Stringer says.

To do this, researchers need to be able to track and quantify movements and correlate them with brain activity. But the tools enabling scientists to do such experiments weren't optimized for use in mice, so researchers haven't been able to get the information they need.

"All of these different brain areas are driven by these movements, which is why we think it is really important to get a better handle on what these movements actually are because our previous techniques really couldn't tell us what they were," Stringer says.

To address this shortcoming, the team looked at 2,400 video frames and labeled distinct points on the mouse face corresponding to different facial movements associated with spontaneous behaviors. They homed in on 13 key points on the face that represent individual behaviors, like whisking, grooming, and licking.

The team had first developed a neural network-based model that could identify these key points in videos of mouse faces collected in the lab under various experimental setups.

They then developed another deep neural network-based model to correlate this key facial point data representing mouse movement to neural activity, allowing them to see how a mouse's spontaneous behaviors drive neural activity in a particular brain region.

Facemap is more accurate and faster than previous methods used to track orofacial movements and behaviors in mice. The tool is also specifically designed to track mouse faces and has been pretrained to track many different mouse movements. These factors make Facemap a particularly effective tool: The model can predict twice as much neural activity in mice compared to prior methods.

In earlier work, the team found that spontaneous behaviors activated neurons in the visual cortex, the brain region that processes visual information from the eye. Using Facemap, they discovered that these neuronal activity clusters were more spread out across this region of the brain than previously thought.

Facemap is freely available and easy to use. Hundreds of researchers around the world have already downloaded the tool since it was released last year.

"This is something that if anyone wanted to get started, they could download Facemap, run their videos, and get their results on the same day," Syeda says. "It just makes research, in general, much easier."

More information: Atika Syeda et al, Facemap: a framework for modeling neural activity based on orofacial tracking, Nature Neuroscience (2023). DOI: 10.1038/s41593-023-01490-6