This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Study finds human brains respond differently to deepfake voices and real ones

Do our brains process natural voices and deepfake voices differently? Research conducted at the University of Zurich indicates that this is the case. In a new study, researchers have identified two brain regions that respond differently to natural and deepfake voices. The work is published in the journal Communications Biology.

Much like fingerprints, our voices are unique and can help us identify people. The latest voice-synthesizing algorithms have become so powerful that it is now possible to create deepfake clones that closely resemble the identity features of natural speakers. This means it is becoming increasingly easy to use deepfake technology to mimic natural voices, for example, to scam people over the phone or replicate the voice of a famous actor in an AI voice assistant.

Until now, however, it has been unclear how the human brain reacts when presented with such fake voices. Do our brains accept them as real, or do they recognize the "fake"? A team of researchers at the University of Zurich has now found that people often accept fake voice identities as real, but that our brains respond differently to deepfake voices than to those of natural speakers.

Identity in deepfake voices almost deceptively similar

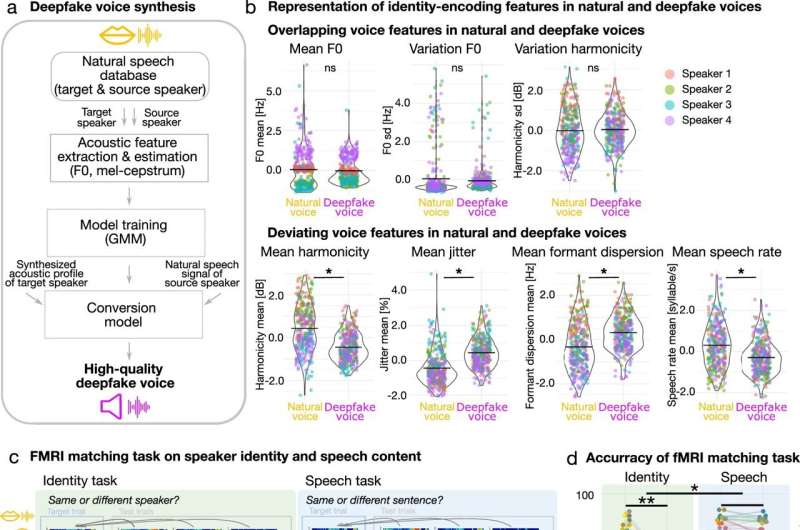

The researchers first used psychoacoustical methods to test how well human voice identity is preserved in deepfake voices. To do this, they recorded the voices of four male speakers and then used a conversion algorithm to generate deepfake voices.

In the main experiment, 25 participants listened to multiple voices and were asked to decide whether or not the identities of two voices were the same. Participants either had to match the identity of two natural voices, or of one natural and one deepfake voice.

The deepfakes were correctly identified in two thirds of cases. "This illustrates that current deepfake voices might not perfectly mimic an identity, but do have the potential to deceive people," says Claudia Roswandowitz, first author and a postdoc at the Department of Computational Linguistics.

Reward system reacts to natural voices but not deepfakes

The researchers then used imaging techniques to examine which brain regions responded differently to deepfake voices compared to natural voices. They successfully identified two regions that were able to recognize the fake voices: the nucleus accumbens and the auditory cortex.

"The nucleus accumbens is a crucial part of the brain's reward system. It was less active when participants were tasked with matching the identity between deepfakes and natural voices," says Roswandowitz. In contrast, the nucleus accumbens showed much more activity when it came to comparing two natural voices.

Auditory cortex distinguishes acoustic quality in natural and deepfake voices

The second brain region active during the experiments, the auditory cortex, appears to respond to acoustic differences between the natural voices and the deepfakes. This region, which processes auditory information, was more active when participants had to distinguish between deepfakes and natural voices.

"We suspect that this region responds to the deepfake voices' imperfect imitation in an attempt to compensate the missing acoustic information in deepfakes," says Roswandowitz. The less natural and likable a fake voice was perceived compared to the corresponding natural one, the greater the differences in activity in the auditory cortex.

Deepfake voices appear to be less pleasant to listen to, almost regardless of the acoustic sound quality. "Humans can thus only be partially deceived by deepfakes. The neural mechanisms identified during deepfake processing particularly highlight our resilience to fake information, which we encounter more frequently in everyday life," says Roswandowitz.

More information: Claudia Roswandowitz et al, Cortical-striatal brain network distinguishes deepfake from real speaker identity, Communications Biology (2024). DOI: 10.1038/s42003-024-06372-6