Human brain recalls visual features in reverse order than it detects them

Scientists at Columbia's Zuckerman Institute have contributed to solving a paradox of perception, literally upending models of how the brain constructs interpretations of the outside world. When observing a scene, the brain first processes details—spots, lines and simple shapes—and uses that information to build internal representations of more complex objects, like cars and people. But when recalling that information, the brain remembers those larger concepts first to then reconstruct the details—representing a reverse order of processing. The research, which involved people and employed mathematical modeling, could shed light on phenomena ranging from eyewitness testimony to stereotyping to autism.

This study was published today in Proceedings of the National Academy of Sciences.

"The order by which the brain reacts to, or encodes, information about the outside world is very well understood," said Ning Qian, PhD, a neuroscientist and a principal investigator at Columbia's Mortimer B. Zuckerman Mind Brain Behavior Institute. "Encoding always goes from simple things to the more complex. But recalling, or decoding, that information is trickier to understand, in large part because there was no method—aside from mathematical modeling—to relate the activity of brain cells to a person's perceptual judgment."

Without any direct evidence, researchers have long assumed that decoding follows the same hierarchy as encoding: you start from the ground up, building up from the details. The main contribution of this work with Misha Tsodyks, PhD, the paper's co-senior author who performed this work while at Columbia and is at the Weizmann Institute of Science in Israel, "is to show that this standard notion is wrong," Dr. Qian said. "Decoding actually goes backward, from high levels to low."

As an analogy of this reversed decoding, Dr. Qian cites last year's presidential election as an example.

"As you observed the things one candidate said and did over time, you may have formed a categorical negative or positive impression of that person. From that moment forward, the way in which you recalled the candidate's words and actions are colored by that overall impression," said Dr. Qian. "Our findings revealed that higher-level categorical decisions—'this candidate is trustworthy'—tend to be stable. But lower-level memories—'this candidate said this or that'—are not as reliable. Consequently, high-level decoding constrains low-level decoding."

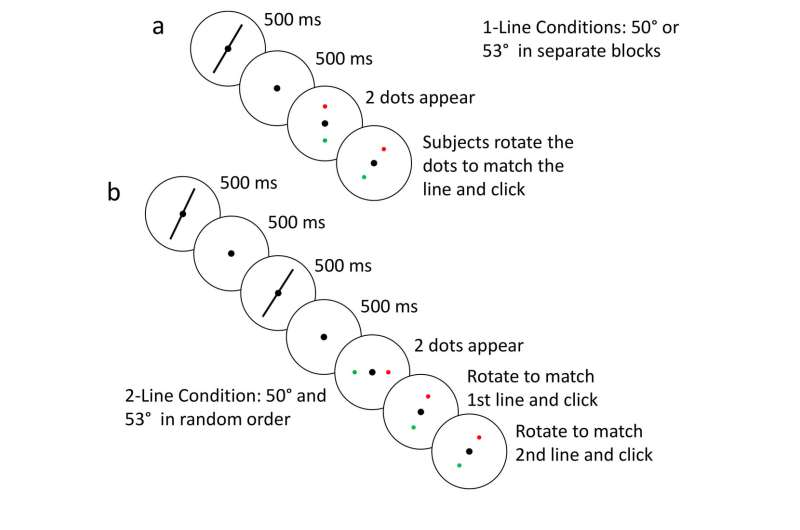

To explore this decoding hierarchy, Drs. Qian and Tsodyks and their team conducted an experiment that was simple in design in order to have a clear interpretation of the results. They asked 12 people to perform a series of similar tasks. In the first, they viewed a line angled at 50 degrees on a computer screen for half a second. Once it disappeared, the participants repositioned two dots on the screen to match what they remembered to be the angle of the line. They then repeated this task 50 more times. In a second task, the researchers changed the angle of the line to 53 degrees. And in a third task, the participants were shown both lines at the same time, and then had to orient pairs of dots to match each angle.

Previously held models of decoding predicted that in the two-line task, people would first decode the individual angle of each line (a lower-level feature) and the use that information to decode the two lines' relationship (a higher-level feature).

"Memories of exact angles are usually imprecise, which we confirmed during the first set of one-line tasks. So, in the two-line task, traditional models predicted that the angle of the 50-degree line would frequently be reported as greater than the angle of the 53-degree line," said Dr. Qian.

But that is not what happened. Traditional models also failed to explain several other aspects of the data, which revealed bi-directional interactions between the way participants recalled the angle of the two lines. The brain appeared to encode one line, then the other, and finally encode their relative orientation. But during decoding, when participants were asked to report the individual angle of each line, their brains used that the lines' relationship—which angle is greater— to estimate the two individual angles.

"This was striking evidence of participants employing this reverse decoding method," said Dr. Qian.

The authors argue that reverse decoding makes sense, because context is more important than details. Looking at a face, you want to assess quickly if someone is frowning, and only later, if need be, estimate the exact angles of the eyebrows. "Even your daily experience shows that perception seems to go from high to low levels," Dr. Qian added.

To lend further support, the authors then constructed a mathematical model of what they think happens in the brain. They used something called Bayesian inference, a statistical method of estimating probability based on prior assumptions. Unlike typical Bayesian models, however, this new model used the higher-level features as the prior information for decoding lower-level features. Going back to the visual line task, they developed an equation to estimate individual lines' angles based on the lines' relationship. The model's predictions fit the behavioral data well.

In the future, the researchers plan to extend their work beyond these simple tasks of perception and into studies of long-term memory, which could have broad implications—from how we assess a presidential candidate, to if a witness is offering reliable testimony.

"The work will help to explain the brain's underlying cognitive processes that we employ every day," said Dr. Qian. "It might also help to explain complex disorders of cognition, such as autism, where people tend to overly focus on details while missing important context."

This paper is titled: "Visual perception as retrospective Bayesian decoding from high- to low-level features."

More information: Stephanie Ding el al., "Visual perception as retrospective Bayesian decoding from high- to low-level features," PNAS (2017). www.pnas.org/cgi/doi/10.1073/pnas.1706906114