Brain-machine interface lets monkeys control two virtual arms (w/ Video)

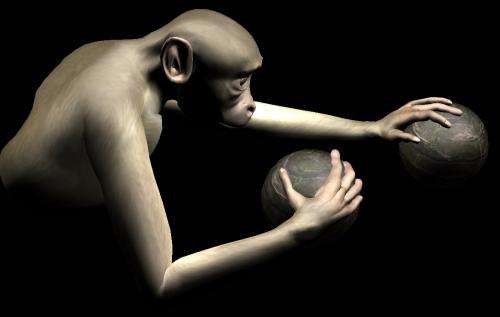

In a study led by Duke researchers, monkeys have learned to control the movement of both arms on an avatar using just their brain activity.

The findings, published Nov. 6, 2013, in the journal Science Translational Medicine, advance efforts to develop bilateral movement in brain-controlled prosthetic devices for severely paralyzed patients.

To enable the monkeys to control two virtual arms, researchers recorded nearly 500 neurons from multiple areas in both cerebral hemispheres of the animals' brains, the largest number of neurons recorded and reported to date.

Millions of people worldwide suffer from sensory and motor deficits caused by spinal cord injuries. Researchers are working to develop tools to help restore their mobility and sense of touch by connecting their brains with assistive devices. The brain-machine interface approach, pioneered at the Duke University Center for Neuroengineering in the early 2000s, holds promise for reaching this goal. However, until now brain-machine interfaces could only control a single prosthetic limb.

"Bimanual movements in our daily activities—from typing on a keyboard to opening a can—are critically important," said senior author Miguel Nicolelis, M.D., Ph.D., professor of neurobiology at Duke University School of Medicine. "Future brain-machine interfaces aimed at restoring mobility in humans will have to incorporate multiple limbs to greatly benefit severely paralyzed patients."

Nicolelis and his colleagues studied large-scale cortical recordings to see if they could provide sufficient signals to brain-machine interfaces to accurately control bimanual movements.

The monkeys were trained in a virtual environment within which they viewed realistic avatar arms on a screen and were encouraged to place their virtual hands on specific targets in a bimanual motor task. The monkeys first learned to control the avatar arms using a pair of joysticks, but were able to learn to use just their brain activity to move both avatar arms without moving their own arms.

As the animals' performance in controlling both virtual arms improved over time, the researchers observed widespread plasticity in cortical areas of their brains. These results suggest that the monkeys' brains may incorporate the avatar arms into their internal image of their bodies, a finding recently reported by the same researchers in the journal Proceedings of the National Academy of Sciences.

The researchers also found that cortical regions showed specific patterns of neuronal electrical activity during bimanual movements that differed from the neuronal activity produced for moving each arm separately.

The study suggests that very large neuronal ensembles—not single neurons—define the underlying physiological unit of normal motor functions. Small neuronal samples of the cortex may be insufficient to control complex motor behaviors using a brain-machine interface.

"When we looked at the properties of individual neurons, or of whole populations of cortical cells, we noticed that simply summing up the neuronal activity correlated to movements of the right and left arms did not allow us to predict what the same individual neurons or neuronal populations would do when both arms were engaged together in a bimanual task," Nicolelis said. "This finding points to an emergent brain property—a non-linear summation—for when both hands are engaged at once."

Nicolelis is incorporating the study's findings into the Walk Again Project, an international collaboration working to build a brain-controlled neuroprosthetic device. The Walk Again Project plans to demonstrate its first brain-controlled exoskeleton, which is currently being developed, during the opening ceremony of the 2014 FIFA World Cup.

More information: "A Brain-Machine Interface Enables Bimanual Arm Movements in Monkeys," by P.J. Ifft; S. Shokur et al. Science Translational Medicine, 2013.